mirror of

https://github.com/VictoriaMetrics/VictoriaMetrics.git

synced 2025-03-21 15:45:01 +00:00

Merge branch 'public-single-node' into pmm-6401-read-prometheus-data-files

This commit is contained in:

commit

660c3c7251

146 changed files with 3868 additions and 1156 deletions

MakefileREADME.md

app

victoria-metrics

vmagent

vmalert

vmauth

vmbackup

vmctl

vmgateway

README.mdvmgateway-access-control.jpgvmgateway-overview.jpegvmgateway-rate-limiting.jpgvmgateway.png

vminsert

common

csvimport

graphite

influx

native

opentsdb

opentsdbhttp

prometheusimport

prompush

promremotewrite

vmimport

vmrestore

vmselect

deployment/docker

docs

Articles.mdBestPractices.mdCHANGELOG.mdCaseStudies.mdCluster-VictoriaMetrics.mdFAQ.mdHome.mdMetricsQL.mdQuick-Start.mdRelease-Guide.mdSampleSizeCalculations.mdSingle-server-VictoriaMetrics.mdvmagent.mdvmalert.mdvmauth.mdvmbackup.mdvmctl.mdvmgateway-access-control.jpgvmgateway-overview.jpegvmgateway-rate-limiting.jpgvmgateway.mdvmrestore.md

go.modgo.sumlib

persistentqueue

promauth

promscrape

client.goconfig.goconfig_test.go

discovery

consul

dockerswarm

ec2

eureka

kubernetes

openstack

discoveryutils

scraper.goscrapework.goproxy

storage

vendor/cloud.google.com/go

36

Makefile

36

Makefile

|

|

@ -53,6 +53,14 @@ vmutils: \

|

|||

vmrestore \

|

||||

vmctl

|

||||

|

||||

vmutils-pure: \

|

||||

vmagent-pure \

|

||||

vmalert-pure \

|

||||

vmauth-pure \

|

||||

vmbackup-pure \

|

||||

vmrestore-pure \

|

||||

vmctl-pure

|

||||

|

||||

vmutils-arm64: \

|

||||

vmagent-arm64 \

|

||||

vmalert-arm64 \

|

||||

|

|

@ -103,7 +111,7 @@ release-victoria-metrics-generic: victoria-metrics-$(GOARCH)-prod

|

|||

victoria-metrics-$(GOARCH)-prod \

|

||||

&& sha256sum victoria-metrics-$(GOARCH)-$(PKG_TAG).tar.gz \

|

||||

victoria-metrics-$(GOARCH)-prod \

|

||||

| sed s/-$(GOARCH)// > victoria-metrics-$(GOARCH)-$(PKG_TAG)_checksums.txt

|

||||

| sed s/-$(GOARCH)-prod/-prod/ > victoria-metrics-$(GOARCH)-$(PKG_TAG)_checksums.txt

|

||||

|

||||

release-vmutils: \

|

||||

release-vmutils-amd64 \

|

||||

|

|

@ -145,7 +153,7 @@ release-vmutils-generic: \

|

|||

vmbackup-$(GOARCH)-prod \

|

||||

vmrestore-$(GOARCH)-prod \

|

||||

vmctl-$(GOARCH)-prod \

|

||||

| sed s/-$(GOARCH)// > vmutils-$(GOARCH)-$(PKG_TAG)_checksums.txt

|

||||

| sed s/-$(GOARCH)-prod/-prod/ > vmutils-$(GOARCH)-$(PKG_TAG)_checksums.txt

|

||||

|

||||

release-vmutils-windows-generic: \

|

||||

vmagent-windows-$(GOARCH)-prod \

|

||||

|

|

@ -254,11 +262,21 @@ golangci-lint: install-golangci-lint

|

|||

install-golangci-lint:

|

||||

which golangci-lint || curl -sSfL https://raw.githubusercontent.com/golangci/golangci-lint/master/install.sh | sh -s -- -b $(shell go env GOPATH)/bin v1.29.0

|

||||

|

||||

copy-docs:

|

||||

echo "---\nsort: ${ORDER}\n---\n" > ${DST}

|

||||

cat ${SRC} >> ${DST}

|

||||

|

||||

# Copies docs for all components and adds the order tag.

|

||||

# Cluster docs are supposed to be ordered as 9th.

|

||||

# For The rest of docs is ordered manually.t

|

||||

docs-sync:

|

||||

cp app/vmagent/README.md docs/vmagent.md

|

||||

cp app/vmalert/README.md docs/vmalert.md

|

||||

cp app/vmauth/README.md docs/vmauth.md

|

||||

cp app/vmbackup/README.md docs/vmbackup.md

|

||||

cp app/vmrestore/README.md docs/vmrestore.md

|

||||

cp app/vmctl/README.md docs/vmctl.md

|

||||

cp README.md docs/Single-server-VictoriaMetrics.md

|

||||

SRC=README.md DST=docs/Single-server-VictoriaMetrics.md ORDER=1 $(MAKE) copy-docs

|

||||

SRC=app/vmagent/README.md DST=docs/vmagent.md ORDER=2 $(MAKE) copy-docs

|

||||

SRC=app/vmalert/README.md DST=docs/vmalert.md ORDER=3 $(MAKE) copy-docs

|

||||

SRC=app/vmauth/README.md DST=docs/vmauth.md ORDER=4 $(MAKE) copy-docs

|

||||

SRC=app/vmbackup/README.md DST=docs/vmbackup.md ORDER=5 $(MAKE) copy-docs

|

||||

SRC=app/vmrestore/README.md DST=docs/vmrestore.md ORDER=6 $(MAKE) copy-docs

|

||||

SRC=app/vmctl/README.md DST=docs/vmctl.md ORDER=7 $(MAKE) copy-docs

|

||||

SRC=app/vmgateway/README.md DST=docs/vmgateway.md ORDER=8 $(MAKE) copy-docs

|

||||

|

||||

|

||||

|

|

|

|||

22

README.md

22

README.md

|

|

@ -1,3 +1,5 @@

|

|||

# VictoriaMetrics

|

||||

|

||||

[](https://github.com/VictoriaMetrics/VictoriaMetrics/releases/latest)

|

||||

[](https://hub.docker.com/r/victoriametrics/victoria-metrics)

|

||||

[](http://slack.victoriametrics.com/)

|

||||

|

|

@ -6,9 +8,7 @@

|

|||

[](https://github.com/VictoriaMetrics/VictoriaMetrics/actions)

|

||||

[](https://codecov.io/gh/VictoriaMetrics/VictoriaMetrics)

|

||||

|

||||

|

||||

|

||||

## VictoriaMetrics

|

||||

<img src="logo.png" width="300" alt="Victoria Metrics logo">

|

||||

|

||||

VictoriaMetrics is a fast, cost-effective and scalable monitoring solution and time series database.

|

||||

|

||||

|

|

@ -117,6 +117,7 @@ Alphabetically sorted links to case studies:

|

|||

* [Prometheus querying API usage](#prometheus-querying-api-usage)

|

||||

* [Prometheus querying API enhancements](#prometheus-querying-api-enhancements)

|

||||

* [Graphite API usage](#graphite-api-usage)

|

||||

* [Graphite Render API usage](#graphite-render-api-usage)

|

||||

* [Graphite Metrics API usage](#graphite-metrics-api-usage)

|

||||

* [Graphite Tags API usage](#graphite-tags-api-usage)

|

||||

* [How to build from sources](#how-to-build-from-sources)

|

||||

|

|

@ -1324,6 +1325,8 @@ See the example of alerting rules for VM components [here](https://github.com/Vi

|

|||

* It is recommended to use default command-line flag values (i.e. don't set them explicitly) until the need

|

||||

of tweaking these flag values arises.

|

||||

|

||||

* It is recommended inspecting logs during troubleshooting, since they may contain useful information.

|

||||

|

||||

* It is recommended upgrading to the latest available release from [this page](https://github.com/VictoriaMetrics/VictoriaMetrics/releases),

|

||||

since the encountered issue could be already fixed there.

|

||||

|

||||

|

|

@ -1338,8 +1341,6 @@ See the example of alerting rules for VM components [here](https://github.com/Vi

|

|||

if background merge cannot be initiated due to free disk space shortage. The value shows the number of per-month partitions,

|

||||

which would start background merge if they had more free disk space.

|

||||

|

||||

* It is recommended inspecting logs during troubleshooting, since they may contain useful information.

|

||||

|

||||

* VictoriaMetrics buffers incoming data in memory for up to a few seconds before flushing it to persistent storage.

|

||||

This may lead to the following "issues":

|

||||

* Data becomes available for querying in a few seconds after inserting. It is possible to flush in-memory buffers to persistent storage

|

||||

|

|

@ -1349,10 +1350,13 @@ See the example of alerting rules for VM components [here](https://github.com/Vi

|

|||

|

||||

* If VictoriaMetrics works slowly and eats more than a CPU core per 100K ingested data points per second,

|

||||

then it is likely you have too many active time series for the current amount of RAM.

|

||||

VictoriaMetrics [exposes](#monitoring) `vm_slow_*` metrics, which could be used as an indicator of low amounts of RAM.

|

||||

It is recommended increasing the amount of RAM on the node with VictoriaMetrics in order to improve

|

||||

VictoriaMetrics [exposes](#monitoring) `vm_slow_*` metrics such as `vm_slow_row_inserts_total` and `vm_slow_metric_name_loads_total`, which could be used

|

||||

as an indicator of low amounts of RAM. It is recommended increasing the amount of RAM on the node with VictoriaMetrics in order to improve

|

||||

ingestion and query performance in this case.

|

||||

|

||||

* If the order of labels for the same metrics can change over time (e.g. if `metric{k1="v1",k2="v2"}` may become `metric{k2="v2",k1="v1"}`),

|

||||

then it is recommended running VictoriaMetrics with `-sortLabels` command-line flag in order to reduce memory usage and CPU usage.

|

||||

|

||||

* VictoriaMetrics prioritizes data ingestion over data querying. So if it has no enough resources for data ingestion,

|

||||

then data querying may slow down significantly.

|

||||

|

||||

|

|

@ -1758,6 +1762,8 @@ Pass `-help` to VictoriaMetrics in order to see the list of supported command-li

|

|||

The maximum time the request waits for execution when -search.maxConcurrentRequests limit is reached; see also -search.maxQueryDuration (default 10s)

|

||||

-search.maxStalenessInterval duration

|

||||

The maximum interval for staleness calculations. By default it is automatically calculated from the median interval between samples. This flag could be useful for tuning Prometheus data model closer to Influx-style data model. See https://prometheus.io/docs/prometheus/latest/querying/basics/#staleness for details. See also '-search.maxLookback' flag, which has the same meaning due to historical reasons

|

||||

-search.maxStatusRequestDuration duration

|

||||

The maximum duration for /api/v1/status/* requests (default 5m0s)

|

||||

-search.maxStepForPointsAdjustment duration

|

||||

The maximum step when /api/v1/query_range handler adjusts points with timestamps closer than -search.latencyOffset to the current time. The adjustment is needed because such points may contain incomplete data (default 1m0s)

|

||||

-search.maxTagKeys int

|

||||

|

|

@ -1788,6 +1794,8 @@ Pass `-help` to VictoriaMetrics in order to see the list of supported command-li

|

|||

The maximum number of CPU cores to use for small merges. Default value is used if set to 0

|

||||

-snapshotAuthKey string

|

||||

authKey, which must be passed in query string to /snapshot* pages

|

||||

-sortLabels

|

||||

Whether to sort labels for incoming samples before writing them to storage. This may be needed for reducing memory usage at storage when the order of labels in incoming samples is random. For example, if m{k1="v1",k2="v2"} may be sent as m{k2="v2",k1="v1"}. Enabled sorting for labels can slow down ingestion performance a bit

|

||||

-storageDataPath string

|

||||

Path to storage data (default "victoria-metrics-data")

|

||||

-tls

|

||||

|

|

|

|||

|

|

@ -92,6 +92,9 @@ func main() {

|

|||

|

||||

func requestHandler(w http.ResponseWriter, r *http.Request) bool {

|

||||

if r.URL.Path == "/" {

|

||||

if r.Method != "GET" {

|

||||

return false

|

||||

}

|

||||

fmt.Fprintf(w, "<h2>Single-node VictoriaMetrics.</h2></br>")

|

||||

fmt.Fprintf(w, "See docs at <a href='https://victoriametrics.github.io/'>https://victoriametrics.github.io/</a></br>")

|

||||

fmt.Fprintf(w, "Useful endpoints: </br>")

|

||||

|

|

|

|||

|

|

@ -1,4 +1,4 @@

|

|||

## vmagent

|

||||

# vmagent

|

||||

|

||||

`vmagent` is a tiny but mighty agent which helps you collect metrics from various sources

|

||||

and store them in [VictoriaMetrics](https://github.com/VictoriaMetrics/VictoriaMetrics)

|

||||

|

|

@ -178,7 +178,7 @@ The following scrape types in [scrape_config](https://prometheus.io/docs/prometh

|

|||

|

||||

Please file feature requests to [our issue tracker](https://github.com/VictoriaMetrics/VictoriaMetrics/issues) if you need other service discovery mechanisms to be supported by `vmagent`.

|

||||

|

||||

`vmagent` also support the following additional options in `scrape_config` section:

|

||||

`vmagent` also support the following additional options in `scrape_configs` section:

|

||||

|

||||

* `disable_compression: true` - to disable response compression on a per-job basis. By default `vmagent` requests compressed responses from scrape targets

|

||||

to save network bandwidth.

|

||||

|

|

@ -262,7 +262,7 @@ See [these docs](https://victoriametrics.github.io/#deduplication) for details.

|

|||

|

||||

## Scraping targets via a proxy

|

||||

|

||||

`vmagent` supports scraping targets via http and https proxies. Proxy address must be specified in `proxy_url` option. For example, the following scrape config instructs

|

||||

`vmagent` supports scraping targets via http, https and socks5 proxies. Proxy address must be specified in `proxy_url` option. For example, the following scrape config instructs

|

||||

target scraping via https proxy at `https://proxy-addr:1234`:

|

||||

|

||||

```yml

|

||||

|

|

@ -273,6 +273,7 @@ scrape_configs:

|

|||

|

||||

Proxy can be configured with the following optional settings:

|

||||

|

||||

* `proxy_authorization` for generic token authorization. See [Prometheus docs for details on authorization section](https://prometheus.io/docs/prometheus/latest/configuration/configuration/#scrape_config)

|

||||

* `proxy_bearer_token` and `proxy_bearer_token_file` for Bearer token authorization

|

||||

* `proxy_basic_auth` for Basic authorization. See [these docs](https://prometheus.io/docs/prometheus/latest/configuration/configuration/#scrape_config).

|

||||

* `proxy_tls_config` for TLS config. See [these docs](https://prometheus.io/docs/prometheus/latest/configuration/configuration/#tls_config).

|

||||

|

|

@ -702,6 +703,8 @@ See the docs at https://victoriametrics.github.io/vmagent.html .

|

|||

-remoteWrite.urlRelabelConfig array

|

||||

Optional path to relabel config for the corresponding -remoteWrite.url

|

||||

Supports array of values separated by comma or specified via multiple flags.

|

||||

-sortLabels

|

||||

Whether to sort labels for incoming samples before writing them to all the configured remote storage systems. This may be needed for reducing memory usage at remote storage when the order of labels in incoming samples is random. For example, if m{k1="v1",k2="v2"} may be sent as m{k2="v2",k1="v1"}Enabled sorting for labels can slow down ingestion performance a bit

|

||||

-tls

|

||||

Whether to enable TLS (aka HTTPS) for incoming requests. -tlsCertFile and -tlsKeyFile must be set if -tls is set

|

||||

-tlsCertFile string

|

||||

|

|

|

|||

|

|

@ -145,6 +145,9 @@ func main() {

|

|||

|

||||

func requestHandler(w http.ResponseWriter, r *http.Request) bool {

|

||||

if r.URL.Path == "/" {

|

||||

if r.Method != "GET" {

|

||||

return false

|

||||

}

|

||||

fmt.Fprintf(w, "vmagent - see docs at https://victoriametrics.github.io/vmagent.html")

|

||||

return true

|

||||

}

|

||||

|

|

|

|||

|

|

@ -160,7 +160,7 @@ func getTLSConfig(argIdx int) (*tls.Config, error) {

|

|||

if c.CAFile == "" && c.CertFile == "" && c.KeyFile == "" && c.ServerName == "" && !c.InsecureSkipVerify {

|

||||

return nil, nil

|

||||

}

|

||||

cfg, err := promauth.NewConfig(".", nil, "", "", c)

|

||||

cfg, err := promauth.NewConfig(".", nil, nil, "", "", c)

|

||||

if err != nil {

|

||||

return nil, fmt.Errorf("cannot populate TLS config: %w", err)

|

||||

}

|

||||

|

|

|

|||

|

|

@ -27,6 +27,9 @@ var (

|

|||

// the maximum number of rows to send per each block.

|

||||

const maxRowsPerBlock = 10000

|

||||

|

||||

// the maximum number of labels to send per each block.

|

||||

const maxLabelsPerBlock = 40000

|

||||

|

||||

type pendingSeries struct {

|

||||

mu sync.Mutex

|

||||

wr writeRequest

|

||||

|

|

@ -125,6 +128,7 @@ func (wr *writeRequest) reset() {

|

|||

}

|

||||

|

||||

func (wr *writeRequest) flush() {

|

||||

sortLabelsIfNeeded(wr.tss)

|

||||

wr.wr.Timeseries = wr.tss

|

||||

wr.adjustSampleValues()

|

||||

atomic.StoreUint64(&wr.lastFlushTime, fasttime.UnixTimestamp())

|

||||

|

|

@ -153,7 +157,7 @@ func (wr *writeRequest) push(src []prompbmarshal.TimeSeries) {

|

|||

for i := range src {

|

||||

tssDst = append(tssDst, prompbmarshal.TimeSeries{})

|

||||

wr.copyTimeSeries(&tssDst[len(tssDst)-1], &src[i])

|

||||

if len(wr.samples) >= maxRowsPerBlock {

|

||||

if len(wr.samples) >= maxRowsPerBlock || len(wr.labels) >= maxLabelsPerBlock {

|

||||

wr.tss = tssDst

|

||||

wr.flush()

|

||||

tssDst = wr.tss

|

||||

|

|

|

|||

|

|

@ -151,11 +151,13 @@ func Push(wr *prompbmarshal.WriteRequest) {

|

|||

for len(tss) > 0 {

|

||||

// Process big tss in smaller blocks in order to reduce the maximum memory usage

|

||||

samplesCount := 0

|

||||

labelsCount := 0

|

||||

i := 0

|

||||

for i < len(tss) {

|

||||

samplesCount += len(tss[i].Samples)

|

||||

labelsCount += len(tss[i].Labels)

|

||||

i++

|

||||

if samplesCount > maxRowsPerBlock {

|

||||

if samplesCount >= maxRowsPerBlock || labelsCount >= maxLabelsPerBlock {

|

||||

break

|

||||

}

|

||||

}

|

||||

|

|

@ -208,7 +210,13 @@ func newRemoteWriteCtx(argIdx int, remoteWriteURL string, maxInmemoryBlocks int,

|

|||

c := newClient(argIdx, remoteWriteURL, sanitizedURL, fq, *queues)

|

||||

sf := significantFigures.GetOptionalArgOrDefault(argIdx, 0)

|

||||

rd := roundDigits.GetOptionalArgOrDefault(argIdx, 100)

|

||||

pss := make([]*pendingSeries, *queues)

|

||||

pssLen := *queues

|

||||

if n := cgroup.AvailableCPUs(); pssLen > n {

|

||||

// There is no sense in running more than availableCPUs concurrent pendingSeries,

|

||||

// since every pendingSeries can saturate up to a single CPU.

|

||||

pssLen = n

|

||||

}

|

||||

pss := make([]*pendingSeries, pssLen)

|

||||

for i := range pss {

|

||||

pss[i] = newPendingSeries(fq.MustWriteBlock, sf, rd)

|

||||

}

|

||||

|

|

|

|||

51

app/vmagent/remotewrite/sort_labels.go

Normal file

51

app/vmagent/remotewrite/sort_labels.go

Normal file

|

|

@ -0,0 +1,51 @@

|

|||

package remotewrite

|

||||

|

||||

import (

|

||||

"flag"

|

||||

"sort"

|

||||

"sync"

|

||||

|

||||

"github.com/VictoriaMetrics/VictoriaMetrics/lib/prompbmarshal"

|

||||

)

|

||||

|

||||

var sortLabels = flag.Bool("sortLabels", false, `Whether to sort labels for incoming samples before writing them to all the configured remote storage systems. `+

|

||||

`This may be needed for reducing memory usage at remote storage when the order of labels in incoming samples is random. `+

|

||||

`For example, if m{k1="v1",k2="v2"} may be sent as m{k2="v2",k1="v1"}`+

|

||||

`Enabled sorting for labels can slow down ingestion performance a bit`)

|

||||

|

||||

// sortLabelsIfNeeded sorts labels if -sortLabels command-line flag is set.

|

||||

func sortLabelsIfNeeded(tss []prompbmarshal.TimeSeries) {

|

||||

if !*sortLabels {

|

||||

return

|

||||

}

|

||||

// The slc is used for avoiding memory allocation when passing labels to sort.Sort.

|

||||

slc := sortLabelsCtxPool.Get().(*sortLabelsCtx)

|

||||

for i := range tss {

|

||||

slc.labels = tss[i].Labels

|

||||

sort.Sort(&slc.labels)

|

||||

}

|

||||

slc.labels = nil

|

||||

sortLabelsCtxPool.Put(slc)

|

||||

}

|

||||

|

||||

type sortLabelsCtx struct {

|

||||

labels sortedLabels

|

||||

}

|

||||

|

||||

var sortLabelsCtxPool = &sync.Pool{

|

||||

New: func() interface{} {

|

||||

return &sortLabelsCtx{}

|

||||

},

|

||||

}

|

||||

|

||||

type sortedLabels []prompbmarshal.Label

|

||||

|

||||

func (sl *sortedLabels) Len() int { return len(*sl) }

|

||||

func (sl *sortedLabels) Less(i, j int) bool {

|

||||

a := *sl

|

||||

return a[i].Name < a[j].Name

|

||||

}

|

||||

func (sl *sortedLabels) Swap(i, j int) {

|

||||

a := *sl

|

||||

a[i], a[j] = a[j], a[i]

|

||||

}

|

||||

|

|

@ -1,10 +1,10 @@

|

|||

## vmalert

|

||||

# vmalert

|

||||

|

||||

`vmalert` executes a list of given [alerting](https://prometheus.io/docs/prometheus/latest/configuration/alerting_rules/)

|

||||

or [recording](https://prometheus.io/docs/prometheus/latest/configuration/recording_rules/)

|

||||

rules against configured address.

|

||||

|

||||

### Features:

|

||||

## Features

|

||||

* Integration with [VictoriaMetrics](https://github.com/VictoriaMetrics/VictoriaMetrics) TSDB;

|

||||

* VictoriaMetrics [MetricsQL](https://victoriametrics.github.io/MetricsQL.html)

|

||||

support and expressions validation;

|

||||

|

|

@ -15,7 +15,7 @@ rules against configured address.

|

|||

* Graphite datasource can be used for alerting and recording rules. See [these docs](#graphite) for details.

|

||||

* Lightweight without extra dependencies.

|

||||

|

||||

### Limitations:

|

||||

## Limitations

|

||||

* `vmalert` execute queries against remote datasource which has reliability risks because of network.

|

||||

It is recommended to configure alerts thresholds and rules expressions with understanding that network request

|

||||

may fail;

|

||||

|

|

@ -24,7 +24,7 @@ storage is asynchronous. Hence, user shouldn't rely on recording rules chaining

|

|||

recording rule is reused in next one;

|

||||

* `vmalert` has no UI, just an API for getting groups and rules statuses.

|

||||

|

||||

### QuickStart

|

||||

## QuickStart

|

||||

|

||||

To build `vmalert` from sources:

|

||||

```

|

||||

|

|

@ -67,7 +67,7 @@ groups:

|

|||

[ - <rule_group> ]

|

||||

```

|

||||

|

||||

#### Groups

|

||||

### Groups

|

||||

|

||||

Each group has following attributes:

|

||||

```yaml

|

||||

|

|

@ -89,7 +89,7 @@ rules:

|

|||

[ - <rule> ... ]

|

||||

```

|

||||

|

||||

#### Rules

|

||||

### Rules

|

||||

|

||||

There are two types of Rules:

|

||||

* [alerting](https://prometheus.io/docs/prometheus/latest/configuration/alerting_rules/) -

|

||||

|

|

@ -102,7 +102,7 @@ and save their result as a new set of time series.

|

|||

`vmalert` forbids to define duplicates - rules with the same combination of name, expression and labels

|

||||

within one group.

|

||||

|

||||

##### Alerting rules

|

||||

#### Alerting rules

|

||||

|

||||

The syntax for alerting rule is following:

|

||||

```yaml

|

||||

|

|

@ -131,7 +131,7 @@ annotations:

|

|||

[ <labelname>: <tmpl_string> ]

|

||||

```

|

||||

|

||||

##### Recording rules

|

||||

#### Recording rules

|

||||

|

||||

The syntax for recording rules is following:

|

||||

```yaml

|

||||

|

|

@ -155,7 +155,7 @@ labels:

|

|||

For recording rules to work `-remoteWrite.url` must specified.

|

||||

|

||||

|

||||

#### Alerts state on restarts

|

||||

### Alerts state on restarts

|

||||

|

||||

`vmalert` has no local storage, so alerts state is stored in the process memory. Hence, after reloading of `vmalert`

|

||||

the process alerts state will be lost. To avoid this situation, `vmalert` should be configured via the following flags:

|

||||

|

|

@ -171,7 +171,7 @@ in configured `-remoteRead.url`, weren't updated in the last `1h` or received st

|

|||

rules configuration.

|

||||

|

||||

|

||||

#### WEB

|

||||

### WEB

|

||||

|

||||

`vmalert` runs a web-server (`-httpListenAddr`) for serving metrics and alerts endpoints:

|

||||

* `http://<vmalert-addr>/api/v1/groups` - list of all loaded groups and rules;

|

||||

|

|

@ -182,7 +182,7 @@ Used as alert source in AlertManager.

|

|||

* `http://<vmalert-addr>/-/reload` - hot configuration reload.

|

||||

|

||||

|

||||

### Graphite

|

||||

## Graphite

|

||||

|

||||

vmalert sends requests to `<-datasource.url>/render?format=json` during evaluation of alerting and recording rules

|

||||

if the corresponding group or rule contains `type: "graphite"` config option. It is expected that the `<-datasource.url>/render`

|

||||

|

|

@ -191,7 +191,7 @@ When using vmalert with both `graphite` and `prometheus` rules configured agains

|

|||

to set `-datasource.appendTypePrefix` flag to `true`, so vmalert can adjust URL prefix automatically based on query type.

|

||||

|

||||

|

||||

### Configuration

|

||||

## Configuration

|

||||

|

||||

The shortlist of configuration flags is the following:

|

||||

```

|

||||

|

|

@ -375,43 +375,43 @@ command-line flags with their descriptions.

|

|||

To reload configuration without `vmalert` restart send SIGHUP signal

|

||||

or send GET request to `/-/reload` endpoint.

|

||||

|

||||

### Contributing

|

||||

## Contributing

|

||||

|

||||

`vmalert` is mostly designed and built by VictoriaMetrics community.

|

||||

Feel free to share your experience and ideas for improving this

|

||||

software. Please keep simplicity as the main priority.

|

||||

|

||||

### How to build from sources

|

||||

## How to build from sources

|

||||

|

||||

It is recommended using

|

||||

[binary releases](https://github.com/VictoriaMetrics/VictoriaMetrics/releases)

|

||||

- `vmalert` is located in `vmutils-*` archives there.

|

||||

|

||||

|

||||

#### Development build

|

||||

### Development build

|

||||

|

||||

1. [Install Go](https://golang.org/doc/install). The minimum supported version is Go 1.15.

|

||||

2. Run `make vmalert` from the root folder of [the repository](https://github.com/VictoriaMetrics/VictoriaMetrics).

|

||||

It builds `vmalert` binary and puts it into the `bin` folder.

|

||||

|

||||

#### Production build

|

||||

### Production build

|

||||

|

||||

1. [Install docker](https://docs.docker.com/install/).

|

||||

2. Run `make vmalert-prod` from the root folder of [the repository](https://github.com/VictoriaMetrics/VictoriaMetrics).

|

||||

It builds `vmalert-prod` binary and puts it into the `bin` folder.

|

||||

|

||||

|

||||

#### ARM build

|

||||

### ARM build

|

||||

|

||||

ARM build may run on Raspberry Pi or on [energy-efficient ARM servers](https://blog.cloudflare.com/arm-takes-wing/).

|

||||

|

||||

#### Development ARM build

|

||||

### Development ARM build

|

||||

|

||||

1. [Install Go](https://golang.org/doc/install). The minimum supported version is Go 1.15.

|

||||

2. Run `make vmalert-arm` or `make vmalert-arm64` from the root folder of [the repository](https://github.com/VictoriaMetrics/VictoriaMetrics).

|

||||

It builds `vmalert-arm` or `vmalert-arm64` binary respectively and puts it into the `bin` folder.

|

||||

|

||||

#### Production ARM build

|

||||

### Production ARM build

|

||||

|

||||

1. [Install docker](https://docs.docker.com/install/).

|

||||

2. Run `make vmalert-arm-prod` or `make vmalert-arm64-prod` from the root folder of [the repository](https://github.com/VictoriaMetrics/VictoriaMetrics).

|

||||

|

|

|

|||

|

|

@ -29,6 +29,9 @@ var pathList = [][]string{

|

|||

func (rh *requestHandler) handler(w http.ResponseWriter, r *http.Request) bool {

|

||||

switch r.URL.Path {

|

||||

case "/":

|

||||

if r.Method != "GET" {

|

||||

return false

|

||||

}

|

||||

for _, path := range pathList {

|

||||

p, doc := path[0], path[1]

|

||||

fmt.Fprintf(w, "<a href='%s'>%q</a> - %s<br/>", p, p, doc)

|

||||

|

|

|

|||

|

|

@ -1,4 +1,4 @@

|

|||

## vmauth

|

||||

# vmauth

|

||||

|

||||

`vmauth` is a simple auth proxy and router for [VictoriaMetrics](https://github.com/VictoriaMetrics/VictoriaMetrics).

|

||||

It reads username and password from [Basic Auth headers](https://en.wikipedia.org/wiki/Basic_access_authentication),

|

||||

|

|

@ -23,7 +23,8 @@ Docker images for `vmauth` are available [here](https://hub.docker.com/r/victori

|

|||

|

||||

Pass `-help` to `vmauth` in order to see all the supported command-line flags with their descriptions.

|

||||

|

||||

Feel free [contacting us](mailto:info@victoriametrics.com) if you need customized auth proxy for VictoriaMetrics with the support of LDAP, SSO, RBAC, SAML, accounting, limits, etc.

|

||||

Feel free [contacting us](mailto:info@victoriametrics.com) if you need customized auth proxy for VictoriaMetrics with the support of LDAP, SSO, RBAC, SAML,

|

||||

accounting and rate limiting such as [vmgateway](https://victoriametrics.github.io/vmgateway.html).

|

||||

|

||||

|

||||

## Auth config

|

||||

|

|

@ -36,11 +37,15 @@ Auth config is represented in the following simple `yml` format:

|

|||

# Usernames must be unique.

|

||||

|

||||

users:

|

||||

# Requests with the 'Authorization: Bearer XXXX' header are proxied to http://localhost:8428 .

|

||||

# For example, http://vmauth:8427/api/v1/query is proxied to http://localhost:8428/api/v1/query

|

||||

- bearer_token: "XXXX"

|

||||

url_prefix: "http://localhost:8428"

|

||||

|

||||

# The user for querying local single-node VictoriaMetrics.

|

||||

# All the requests to http://vmauth:8427 with the given Basic Auth (username:password)

|

||||

# will be routed to http://localhost:8428 .

|

||||

# For example, http://vmauth:8427/api/v1/query is routed to http://localhost:8428/api/v1/query

|

||||

# will be proxied to http://localhost:8428 .

|

||||

# For example, http://vmauth:8427/api/v1/query is proxied to http://localhost:8428/api/v1/query

|

||||

- username: "local-single-node"

|

||||

password: "***"

|

||||

url_prefix: "http://localhost:8428"

|

||||

|

|

@ -48,8 +53,8 @@ users:

|

|||

# The user for querying account 123 in VictoriaMetrics cluster

|

||||

# See https://victoriametrics.github.io/Cluster-VictoriaMetrics.html#url-format

|

||||

# All the requests to http://vmauth:8427 with the given Basic Auth (username:password)

|

||||

# will be routed to http://vmselect:8481/select/123/prometheus .

|

||||

# For example, http://vmauth:8427/api/v1/query is routed to http://vmselect:8481/select/123/prometheus/api/v1/select

|

||||

# will be proxied to http://vmselect:8481/select/123/prometheus .

|

||||

# For example, http://vmauth:8427/api/v1/query is proxied to http://vmselect:8481/select/123/prometheus/api/v1/select

|

||||

- username: "cluster-select-account-123"

|

||||

password: "***"

|

||||

url_prefix: "http://vmselect:8481/select/123/prometheus"

|

||||

|

|

@ -57,8 +62,8 @@ users:

|

|||

# The user for inserting Prometheus data into VictoriaMetrics cluster under account 42

|

||||

# See https://victoriametrics.github.io/Cluster-VictoriaMetrics.html#url-format

|

||||

# All the requests to http://vmauth:8427 with the given Basic Auth (username:password)

|

||||

# will be routed to http://vminsert:8480/insert/42/prometheus .

|

||||

# For example, http://vmauth:8427/api/v1/write is routed to http://vminsert:8480/insert/42/prometheus/api/v1/write

|

||||

# will be proxied to http://vminsert:8480/insert/42/prometheus .

|

||||

# For example, http://vmauth:8427/api/v1/write is proxied to http://vminsert:8480/insert/42/prometheus/api/v1/write

|

||||

- username: "cluster-insert-account-42"

|

||||

password: "***"

|

||||

url_prefix: "http://vminsert:8480/insert/42/prometheus"

|

||||

|

|

@ -66,9 +71,9 @@ users:

|

|||

|

||||

# A single user for querying and inserting data:

|

||||

# - Requests to http://vmauth:8427/api/v1/query, http://vmauth:8427/api/v1/query_range

|

||||

# and http://vmauth:8427/api/v1/label/<label_name>/values are routed to http://vmselect:8481/select/42/prometheus.

|

||||

# For example, http://vmauth:8427/api/v1/query is routed to http://vmselect:8480/select/42/prometheus/api/v1/query

|

||||

# - Requests to http://vmauth:8427/api/v1/write are routed to http://vminsert:8480/insert/42/prometheus/api/v1/write

|

||||

# and http://vmauth:8427/api/v1/label/<label_name>/values are proxied to http://vmselect:8481/select/42/prometheus.

|

||||

# For example, http://vmauth:8427/api/v1/query is proxied to http://vmselect:8480/select/42/prometheus/api/v1/query

|

||||

# - Requests to http://vmauth:8427/api/v1/write are proxied to http://vminsert:8480/insert/42/prometheus/api/v1/write

|

||||

- username: "foobar"

|

||||

url_map:

|

||||

- src_paths: ["/api/v1/query", "/api/v1/query_range", "/api/v1/label/[^/]+/values"]

|

||||

|

|

|

|||

|

|

@ -1,6 +1,7 @@

|

|||

package main

|

||||

|

||||

import (

|

||||

"encoding/base64"

|

||||

"flag"

|

||||

"fmt"

|

||||

"io/ioutil"

|

||||

|

|

@ -29,10 +30,11 @@ type AuthConfig struct {

|

|||

|

||||

// UserInfo is user information read from authConfigPath

|

||||

type UserInfo struct {

|

||||

Username string `yaml:"username"`

|

||||

Password string `yaml:"password"`

|

||||

URLPrefix string `yaml:"url_prefix"`

|

||||

URLMap []URLMap `yaml:"url_map"`

|

||||

BearerToken string `yaml:"bearer_token"`

|

||||

Username string `yaml:"username"`

|

||||

Password string `yaml:"password"`

|

||||

URLPrefix string `yaml:"url_prefix"`

|

||||

URLMap []URLMap `yaml:"url_map"`

|

||||

|

||||

requests *metrics.Counter

|

||||

}

|

||||

|

|

@ -150,12 +152,27 @@ func parseAuthConfig(data []byte) (map[string]*UserInfo, error) {

|

|||

if len(uis) == 0 {

|

||||

return nil, fmt.Errorf("`users` section cannot be empty in AuthConfig")

|

||||

}

|

||||

m := make(map[string]*UserInfo, len(uis))

|

||||

byAuthToken := make(map[string]*UserInfo, len(uis))

|

||||

byUsername := make(map[string]bool, len(uis))

|

||||

byBearerToken := make(map[string]bool, len(uis))

|

||||

for i := range uis {

|

||||

ui := &uis[i]

|

||||

if m[ui.Username] != nil {

|

||||

if ui.BearerToken == "" && ui.Username == "" {

|

||||

return nil, fmt.Errorf("either bearer_token or username must be set")

|

||||

}

|

||||

if ui.BearerToken != "" && ui.Username != "" {

|

||||

return nil, fmt.Errorf("bearer_token=%q and username=%q cannot be set simultaneously", ui.BearerToken, ui.Username)

|

||||

}

|

||||

if byBearerToken[ui.BearerToken] {

|

||||

return nil, fmt.Errorf("duplicate bearer_token found; bearer_token: %q", ui.BearerToken)

|

||||

}

|

||||

if byUsername[ui.Username] {

|

||||

return nil, fmt.Errorf("duplicate username found; username: %q", ui.Username)

|

||||

}

|

||||

authToken := getAuthToken(ui.BearerToken, ui.Username, ui.Password)

|

||||

if byAuthToken[authToken] != nil {

|

||||

return nil, fmt.Errorf("duplicate auth token found for bearer_token=%q, username=%q: %q", authToken, ui.BearerToken, ui.Username)

|

||||

}

|

||||

if len(ui.URLPrefix) > 0 {

|

||||

urlPrefix, err := sanitizeURLPrefix(ui.URLPrefix)

|

||||

if err != nil {

|

||||

|

|

@ -176,10 +193,29 @@ func parseAuthConfig(data []byte) (map[string]*UserInfo, error) {

|

|||

if len(ui.URLMap) == 0 && len(ui.URLPrefix) == 0 {

|

||||

return nil, fmt.Errorf("missing `url_prefix`")

|

||||

}

|

||||

ui.requests = metrics.GetOrCreateCounter(fmt.Sprintf(`vmauth_user_requests_total{username=%q}`, ui.Username))

|

||||

m[ui.Username] = ui

|

||||

if ui.BearerToken != "" {

|

||||

if ui.Password != "" {

|

||||

return nil, fmt.Errorf("password shouldn't be set for bearer_token %q", ui.BearerToken)

|

||||

}

|

||||

ui.requests = metrics.GetOrCreateCounter(`vmauth_user_requests_total{username="bearer_token"}`)

|

||||

byBearerToken[ui.BearerToken] = true

|

||||

}

|

||||

if ui.Username != "" {

|

||||

ui.requests = metrics.GetOrCreateCounter(fmt.Sprintf(`vmauth_user_requests_total{username=%q}`, ui.Username))

|

||||

byUsername[ui.Username] = true

|

||||

}

|

||||

byAuthToken[authToken] = ui

|

||||

}

|

||||

return m, nil

|

||||

return byAuthToken, nil

|

||||

}

|

||||

|

||||

func getAuthToken(bearerToken, username, password string) string {

|

||||

if bearerToken != "" {

|

||||

return "Bearer " + bearerToken

|

||||

}

|

||||

token := username + ":" + password

|

||||

token64 := base64.StdEncoding.EncodeToString([]byte(token))

|

||||

return "Basic " + token64

|

||||

}

|

||||

|

||||

func sanitizeURLPrefix(urlPrefix string) (string, error) {

|

||||

|

|

|

|||

|

|

@ -56,6 +56,22 @@ users:

|

|||

url_prefix: http:///bar

|

||||

`)

|

||||

|

||||

// Username and bearer_token in a single config

|

||||

f(`

|

||||

users:

|

||||

- username: foo

|

||||

bearer_token: bbb

|

||||

url_prefix: http://foo.bar

|

||||

`)

|

||||

|

||||

// Bearer_token and password in a single config

|

||||

f(`

|

||||

users:

|

||||

- password: foo

|

||||

bearer_token: bbb

|

||||

url_prefix: http://foo.bar

|

||||

`)

|

||||

|

||||

// Duplicate users

|

||||

f(`

|

||||

users:

|

||||

|

|

@ -67,6 +83,17 @@ users:

|

|||

url_prefix: https://sss.sss

|

||||

`)

|

||||

|

||||

// Duplicate bearer_tokens

|

||||

f(`

|

||||

users:

|

||||

- bearer_token: foo

|

||||

url_prefix: http://foo.bar

|

||||

- username: bar

|

||||

url_prefix: http://xxx.yyy

|

||||

- bearer_token: foo

|

||||

url_prefix: https://sss.sss

|

||||

`)

|

||||

|

||||

// Missing url_prefix in url_map

|

||||

f(`

|

||||

users:

|

||||

|

|

@ -113,7 +140,7 @@ users:

|

|||

password: bar

|

||||

url_prefix: http://aaa:343/bbb

|

||||

`, map[string]*UserInfo{

|

||||

"foo": {

|

||||

getAuthToken("", "foo", "bar"): {

|

||||

Username: "foo",

|

||||

Password: "bar",

|

||||

URLPrefix: "http://aaa:343/bbb",

|

||||

|

|

@ -128,11 +155,11 @@ users:

|

|||

- username: bar

|

||||

url_prefix: https://bar/x///

|

||||

`, map[string]*UserInfo{

|

||||

"foo": {

|

||||

getAuthToken("", "foo", ""): {

|

||||

Username: "foo",

|

||||

URLPrefix: "http://foo",

|

||||

},

|

||||

"bar": {

|

||||

getAuthToken("", "bar", ""): {

|

||||

Username: "bar",

|

||||

URLPrefix: "https://bar/x",

|

||||

},

|

||||

|

|

@ -141,15 +168,15 @@ users:

|

|||

// non-empty URLMap

|

||||

f(`

|

||||

users:

|

||||

- username: foo

|

||||

- bearer_token: foo

|

||||

url_map:

|

||||

- src_paths: ["/api/v1/query","/api/v1/query_range","/api/v1/label/[^./]+/.+"]

|

||||

url_prefix: http://vmselect/select/0/prometheus

|

||||

- src_paths: ["/api/v1/write"]

|

||||

url_prefix: http://vminsert/insert/0/prometheus

|

||||

`, map[string]*UserInfo{

|

||||

"foo": {

|

||||

Username: "foo",

|

||||

getAuthToken("foo", "", ""): {

|

||||

BearerToken: "foo",

|

||||

URLMap: []URLMap{

|

||||

{

|

||||

SrcPaths: getSrcPaths([]string{"/api/v1/query", "/api/v1/query_range", "/api/v1/label/[^./]+/.+"}),

|

||||

|

|

|

|||

|

|

@ -47,16 +47,16 @@ func main() {

|

|||

}

|

||||

|

||||

func requestHandler(w http.ResponseWriter, r *http.Request) bool {

|

||||

username, password, ok := r.BasicAuth()

|

||||

if !ok {

|

||||

authToken := r.Header.Get("Authorization")

|

||||

if authToken == "" {

|

||||

w.Header().Set("WWW-Authenticate", `Basic realm="Restricted"`)

|

||||

http.Error(w, "missing `Authorization: Basic *` header", http.StatusUnauthorized)

|

||||

http.Error(w, "missing `Authorization` request header", http.StatusUnauthorized)

|

||||

return true

|

||||

}

|

||||

ac := authConfig.Load().(map[string]*UserInfo)

|

||||

ui := ac[username]

|

||||

if ui == nil || ui.Password != password {

|

||||

httpserver.Errorf(w, r, "cannot find the provided username %q or password in config", username)

|

||||

ui := ac[authToken]

|

||||

if ui == nil {

|

||||

httpserver.Errorf(w, r, "cannot find the provided auth token %q in config", authToken)

|

||||

return true

|

||||

}

|

||||

ui.requests.Inc()

|

||||

|

|

|

|||

|

|

@ -1,4 +1,4 @@

|

|||

## vmbackup

|

||||

# vmbackup

|

||||

|

||||

`vmbackup` creates VictoriaMetrics data backups from [instant snapshots](https://victoriametrics.github.io/Single-server-VictoriaMetrics.html#how-to-work-with-snapshots).

|

||||

|

||||

|

|

|

|||

|

|

@ -9,33 +9,6 @@ Features:

|

|||

- [x] InfluxDB: migrate data from InfluxDB to VictoriaMetrics

|

||||

- [ ] Storage Management: data re-balancing between nodes

|

||||

|

||||

# Table of contents

|

||||

|

||||

* [Articles](#articles)

|

||||

* [How to build](#how-to-build)

|

||||

* [Migrating data from InfluxDB 1.x](#migrating-data-from-influxdb-1x)

|

||||

* [Data mapping](#data-mapping)

|

||||

* [Configuration](#configuration)

|

||||

* [Filtering](#filtering)

|

||||

* [Migrating data from InfluxDB 2.x](#migrating-data-from-influxdb-2x)

|

||||

* [Migrating data from Prometheus](#migrating-data-from-prometheus)

|

||||

* [Data mapping](#data-mapping-1)

|

||||

* [Configuration](#configuration-1)

|

||||

* [Filtering](#filtering-1)

|

||||

* [Migrating data from Thanos](#migrating-data-from-thanos)

|

||||

* [Current data](#current-data)

|

||||

* [Historical data](#historical-data)

|

||||

* [Migrating data from VictoriaMetrics](#migrating-data-from-victoriametrics)

|

||||

* [Native protocol](#native-protocol)

|

||||

* [Tuning](#tuning)

|

||||

* [Influx mode](#influx-mode)

|

||||

* [Prometheus mode](#prometheus-mode)

|

||||

* [VictoriaMetrics importer](#victoriametrics-importer)

|

||||

* [Importer stats](#importer-stats)

|

||||

* [Significant figures](#significant-figures)

|

||||

* [Adding extra labels](#adding-extra-labels)

|

||||

|

||||

|

||||

## Articles

|

||||

|

||||

* [How to migrate data from Prometheus](https://medium.com/@romanhavronenko/victoriametrics-how-to-migrate-data-from-prometheus-d44a6728f043)

|

||||

|

|

|

|||

287

app/vmgateway/README.md

Normal file

287

app/vmgateway/README.md

Normal file

|

|

@ -0,0 +1,287 @@

|

|||

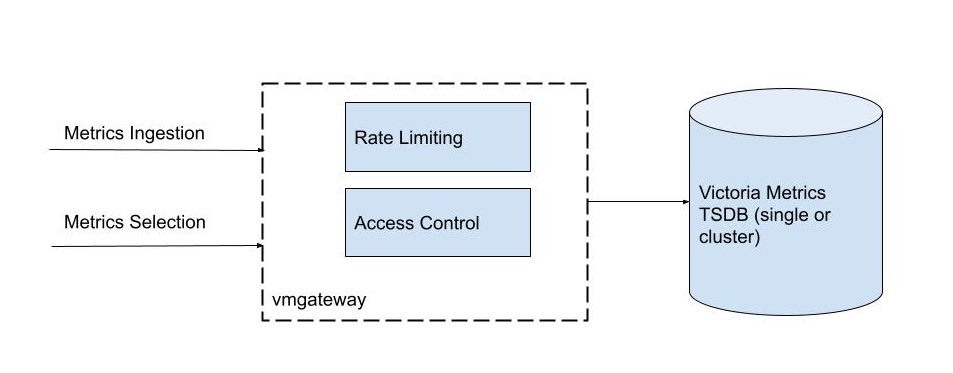

# vmgateway

|

||||

|

||||

|

||||

<img alt="vmgateway" src="vmgateway-overview.jpeg">

|

||||

|

||||

`vmgateway` is a proxy for Victoria Metrics TSDB. It provides the following features:

|

||||

|

||||

* Rate Limiter

|

||||

* Based on cluster tenants' utilization supports multiple time interval limits for ingestion/retrieving metrics

|

||||

* Token Access Control

|

||||

* Supports additional per-label access control for Single and Cluster versions of Victoria Metrics TSDB

|

||||

* Provides access by tenantID at Cluster version

|

||||

* Allows to separate write/read/admin access to data

|

||||

|

||||

`vmgateway` is included in an [enterprise package](https://victoriametrics.com/enterprise.html).

|

||||

|

||||

|

||||

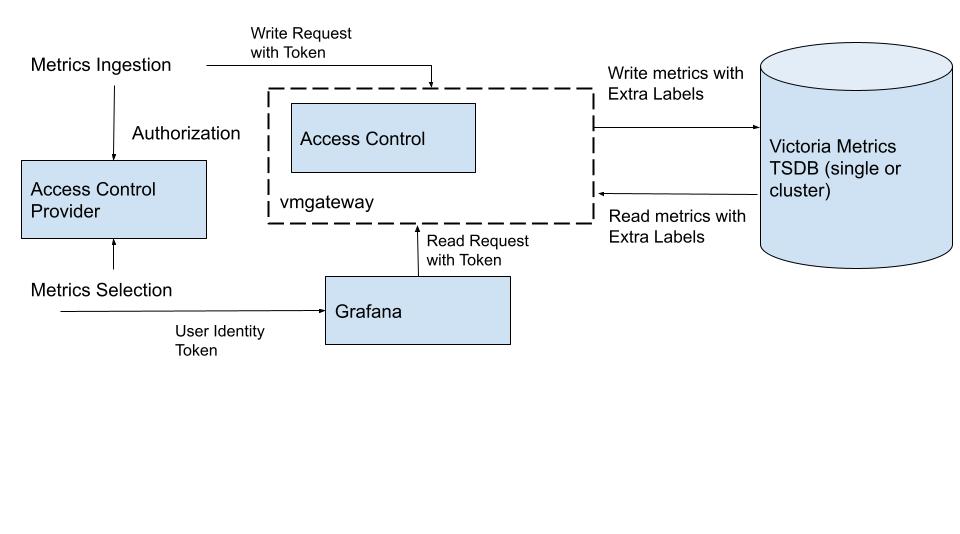

## Access Control

|

||||

|

||||

<img alt="vmgateway-ac" src="vmgateway-access-control.jpg">

|

||||

|

||||

`vmgateway` supports jwt based authentication. With jwt payload can be configured access to specific tenant, labels, read/write.

|

||||

|

||||

jwt token must be in following format:

|

||||

```json

|

||||

{

|

||||

"exp": 1617304574,

|

||||

"vm_access": {

|

||||

"tenant_id": {

|

||||

"account_id": 1,

|

||||

"project_id": 5

|

||||

},

|

||||

"extra_labels": {

|

||||

"team": "dev",

|

||||

"project": "mobile"

|

||||

},

|

||||

"mode": 1

|

||||

}

|

||||

}

|

||||

```

|

||||

Where:

|

||||

- `exp` - required, expire time in unix_timestamp. If token expires, `vmgateway` rejects request.

|

||||

- `vm_access` - required, dict with claim info, minimum form: `{"vm_access": {"tenand_id": {}}`

|

||||

- `tenant_id` - optional, make sense only for cluster mode, routes request to corresponding tenant.

|

||||

- `extra_labels` - optional, key-value pairs for label filters - added to ingested or selected metrics.

|

||||

- `mode` - optional, access mode for api - read, write, full. supported values: 0 - full (default value), 1 - read, 2 - write.

|

||||

|

||||

## QuickStart

|

||||

|

||||

Start single version of Victoria Metrics

|

||||

|

||||

```bash

|

||||

# single

|

||||

# start node

|

||||

./bin/victoria-metrics --selfScrapeInterval=10s

|

||||

```

|

||||

|

||||

Start vmgateway

|

||||

|

||||

```bash

|

||||

./bin/vmgateway -eula -enable.auth -read.url http://localhost:8428 --write.url http://localhost:8428

|

||||

```

|

||||

|

||||

Retrieve data from database

|

||||

```bash

|

||||

curl 'http://localhost:8431/api/v1/series/count' -H 'Authorization: Bearer eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJ2bV9hY2Nlc3MiOnsidGVuYW50X2lkIjp7fSwicm9sZSI6MX0sImV4cCI6MTkzOTM0NjIxMH0.5WUxEfdcV9hKo4CtQdtuZYOGpGXWwaqM9VuVivMMrVg'

|

||||

```

|

||||

|

||||

Request with incorrect token or with out token will be rejected:

|

||||

```bash

|

||||

curl 'http://localhost:8431/api/v1/series/count'

|

||||

|

||||

curl 'http://localhost:8431/api/v1/series/count' -H 'Authorization: Bearer incorrect-token'

|

||||

```

|

||||

|

||||

|

||||

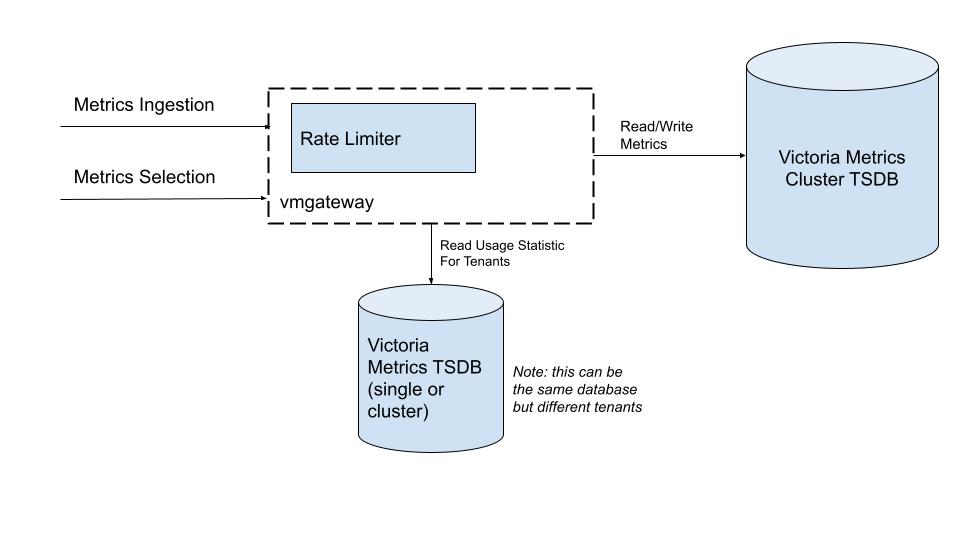

## Rate Limiter

|

||||

|

||||

<img alt="vmgateway-rl" src="vmgateway-rate-limiting.jpg">

|

||||

|

||||

Limits incoming requests by given pre-configured limits. It supports read and write limiting by a tenant.

|

||||

|

||||

`vmgateway` needs datasource for rate limits queries. It can be single-node or cluster version of `victoria-metrics`.

|

||||

It must have metrics scrapped from cluster, that you want to rate limit.

|

||||

|

||||

List of supported limit types:

|

||||

- `queries` - count of api requests made at tenant to read api, such as `/api/v1/query`, `/api/v1/series` and others.

|

||||

- `active_series` - count of current active series at given tenant.

|

||||

- `new_series` - count of created series aka churn rate

|

||||

- `rows_inserted` - count of inserted rows per tenant.

|

||||

|

||||

List of supported time windows:

|

||||

- `minute`

|

||||

- `hour`

|

||||

|

||||

Limits can be specified per tenant or at global level, if you omit `project_id` and `account_id`.

|

||||

|

||||

Example of configuration file:

|

||||

|

||||

```yaml

|

||||

limits:

|

||||

- type: queries

|

||||

value: 1000

|

||||

resolution: minute

|

||||

- type: queries

|

||||

value: 10000

|

||||

resolution: hour

|

||||

- type: queries

|

||||

value: 10

|

||||

resolution: minute

|

||||

project_id: 5

|

||||

account_id: 1

|

||||

```

|

||||

|

||||

## QuickStart

|

||||

|

||||

cluster version required for rate limiting.

|

||||

```bash

|

||||

# start datasource for cluster metrics

|

||||

|

||||

cat << EOF > cluster.yaml

|

||||

scrape_configs:

|

||||

- job_name: cluster

|

||||

scrape_interval: 5s

|

||||

static_configs:

|

||||

- targets: ['127.0.0.1:8481','127.0.0.1:8482','127.0.0.1:8480']

|

||||

EOF

|

||||

|

||||

./bin/victoria-metrics --promscrape.config cluster.yaml

|

||||

|

||||

# start cluster

|

||||

|

||||

# start vmstorage, vmselect and vminsert

|

||||

./bin/vmstorage -eula

|

||||

./bin/vmselect -eula -storageNode 127.0.0.1:8401

|

||||

./bin/vminsert -eula -storageNode 127.0.0.1:8400

|

||||

|

||||

# create base rate limitng config:

|

||||

cat << EOF > limit.yaml

|

||||

limits:

|

||||

- type: queries

|

||||

value: 100

|

||||

- type: rows_inserted

|

||||

value: 100000

|

||||

- type: new_series

|

||||

value: 1000

|

||||

- type: active_series

|

||||

value: 100000

|

||||

- type: queries

|

||||

value: 1

|

||||

account_id: 15

|

||||

EOF

|

||||

|

||||

# start gateway with clusterMoe

|

||||

./bin/vmgateway -eula -enable.rateLimit -ratelimit.config limit.yaml -datasource.url http://localhost:8428 -enable.auth -clusterMode -write.url=http://localhost:8480 --read.url=http://localhost:8481

|

||||

|

||||

# ingest simple metric to tenant 1:5

|

||||

curl 'http://localhost:8431/api/v1/import/prometheus' -X POST -d 'foo{bar="baz1"} 123' -H 'Authorization: Bearer eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2MjAxNjIwMDAwMDAsInZtX2FjY2VzcyI6eyJ0ZW5hbnRfaWQiOnsiYWNjb3VudF9pZCI6MTV9fX0.PB1_KXDKPUp-40pxOGk6lt_jt9Yq80PIMpWVJqSForQ'

|

||||

# read metric from tenant 1:5

|

||||

curl 'http://localhost:8431/api/v1/labels' -H 'Authorization: Bearer eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2MjAxNjIwMDAwMDAsInZtX2FjY2VzcyI6eyJ0ZW5hbnRfaWQiOnsiYWNjb3VudF9pZCI6MTV9fX0.PB1_KXDKPUp-40pxOGk6lt_jt9Yq80PIMpWVJqSForQ'

|

||||

|

||||

# check rate limit

|

||||

```

|

||||

|

||||

## Configuration

|

||||

|

||||

The shortlist of configuration flags is the following:

|

||||

```bash

|

||||

-clusterMode

|

||||

enable it for cluster version

|

||||

-datasource.appendTypePrefix

|

||||

Whether to add type prefix to -datasource.url based on the query type. Set to true if sending different query types to VMSelect URL.

|

||||

-datasource.basicAuth.password string

|

||||

Optional basic auth password for -datasource.url

|

||||

-datasource.basicAuth.username string

|

||||

Optional basic auth username for -datasource.url

|

||||

-datasource.lookback duration

|

||||

Lookback defines how far to look into past when evaluating queries. For example, if datasource.lookback=5m then param "time" with value now()-5m will be added to every query.

|

||||

-datasource.maxIdleConnections int

|

||||

Defines the number of idle (keep-alive connections) to configured datasource.Consider to set this value equal to the value: groups_total * group.concurrency. Too low value may result into high number of sockets in TIME_WAIT state. (default 100)

|

||||

-datasource.queryStep duration

|

||||

queryStep defines how far a value can fallback to when evaluating queries. For example, if datasource.queryStep=15s then param "step" with value "15s" will be added to every query.

|

||||

-datasource.tlsCAFile string

|

||||

Optional path to TLS CA file to use for verifying connections to -datasource.url. By default system CA is used

|

||||

-datasource.tlsCertFile string

|

||||

Optional path to client-side TLS certificate file to use when connecting to -datasource.url

|

||||

-datasource.tlsInsecureSkipVerify

|

||||

Whether to skip tls verification when connecting to -datasource.url

|

||||

-datasource.tlsKeyFile string

|

||||

Optional path to client-side TLS certificate key to use when connecting to -datasource.url

|

||||

-datasource.tlsServerName string

|

||||

Optional TLS server name to use for connections to -datasource.url. By default the server name from -datasource.url is used

|

||||

-datasource.url string

|

||||

Victoria Metrics or VMSelect url. Required parameter. E.g. http://127.0.0.1:8428

|

||||

-enable.auth

|

||||

enables auth with jwt token

|

||||

-enable.rateLimit

|

||||

enables rate limiter

|

||||

-enableTCP6

|

||||

Whether to enable IPv6 for listening and dialing. By default only IPv4 TCP and UDP is used

|

||||

-envflag.enable

|

||||

Whether to enable reading flags from environment variables additionally to command line. Command line flag values have priority over values from environment vars. Flags are read only from command line if this flag isnt set

|

||||

-envflag.prefix string

|

||||

Prefix for environment variables if -envflag.enable is set

|

||||

-eula

|

||||

By specifying this flag you confirm that you have an enterprise license and accept the EULA https://victoriametrics.com/assets/VM_EULA.pdf

|

||||

-fs.disableMmap

|

||||

Whether to use pread() instead of mmap() for reading data files. By default mmap() is used for 64-bit arches and pread() is used for 32-bit arches, since they cannot read data files bigger than 2^32 bytes in memory. mmap() is usually faster for reading small data chunks than pread()

|

||||

-http.connTimeout duration

|

||||

Incoming http connections are closed after the configured timeout. This may help spreading incoming load among a cluster of services behind load balancer. Note that the real timeout may be bigger by up to 10% as a protection from Thundering herd problem (default 2m0s)

|

||||

-http.disableResponseCompression

|

||||

Disable compression of HTTP responses for saving CPU resources. By default compression is enabled to save network bandwidth

|

||||

-http.idleConnTimeout duration

|

||||

Timeout for incoming idle http connections (default 1m0s)

|

||||

-http.maxGracefulShutdownDuration duration

|

||||

The maximum duration for graceful shutdown of HTTP server. Highly loaded server may require increased value for graceful shutdown (default 7s)

|

||||

-http.pathPrefix string

|

||||

An optional prefix to add to all the paths handled by http server. For example, if '-http.pathPrefix=/foo/bar' is set, then all the http requests will be handled on '/foo/bar/*' paths. This may be useful for proxied requests. See https://www.robustperception.io/using-external-urls-and-proxies-with-prometheus

|

||||

-http.shutdownDelay duration

|

||||

Optional delay before http server shutdown. During this dealy the servier returns non-OK responses from /health page, so load balancers can route new requests to other servers

|

||||

-httpAuth.password string

|

||||

Password for HTTP Basic Auth. The authentication is disabled if -httpAuth.username is empty

|

||||

-httpAuth.username string

|

||||

Username for HTTP Basic Auth. The authentication is disabled if empty. See also -httpAuth.password

|

||||

-httpListenAddr string

|

||||

TCP address to listen for http connections (default ":8431")

|

||||

-loggerDisableTimestamps

|

||||

Whether to disable writing timestamps in logs

|

||||

-loggerErrorsPerSecondLimit int

|

||||

Per-second limit on the number of ERROR messages. If more than the given number of errors are emitted per second, then the remaining errors are suppressed. Zero value disables the rate limit

|

||||

-loggerFormat string

|

||||

Format for logs. Possible values: default, json (default "default")

|

||||

-loggerLevel string

|

||||

Minimum level of errors to log. Possible values: INFO, WARN, ERROR, FATAL, PANIC (default "INFO")

|

||||

-loggerOutput string

|

||||

Output for the logs. Supported values: stderr, stdout (default "stderr")

|

||||

-loggerTimezone string

|

||||

Timezone to use for timestamps in logs. Timezone must be a valid IANA Time Zone. For example: America/New_York, Europe/Berlin, Etc/GMT+3 or Local (default "UTC")

|

||||

-loggerWarnsPerSecondLimit int

|

||||

Per-second limit on the number of WARN messages. If more than the given number of warns are emitted per second, then the remaining warns are suppressed. Zero value disables the rate limit

|

||||

-memory.allowedBytes size

|

||||

Allowed size of system memory VictoriaMetrics caches may occupy. This option overrides -memory.allowedPercent if set to non-zero value. Too low value may increase cache miss rate, which usually results in higher CPU and disk IO usage. Too high value may evict too much data from OS page cache, which will result in higher disk IO usage

|

||||

Supports the following optional suffixes for size values: KB, MB, GB, KiB, MiB, GiB (default 0)

|

||||

-memory.allowedPercent float

|

||||

Allowed percent of system memory VictoriaMetrics caches may occupy. See also -memory.allowedBytes. Too low value may increase cache miss rate, which usually results in higher CPU and disk IO usage. Too high value may evict too much data from OS page cache, which will result in higher disk IO usage (default 60)

|

||||

-metricsAuthKey string

|

||||

Auth key for /metrics. It overrides httpAuth settings

|

||||

-pprofAuthKey string

|

||||

Auth key for /debug/pprof. It overrides httpAuth settings

|

||||

-ratelimit.config string

|

||||

path for configuration file

|

||||

-ratelimit.extraLabels array

|

||||

additional labels, that will be applied to fetchdata from datasource

|

||||

Supports array of values separated by comma or specified via multiple flags.

|

||||

-ratelimit.refreshInterval duration

|

||||

(default 5s)

|

||||

-read.url string

|

||||

read access url address, example: http://vmselect:8481

|

||||

-tls

|

||||

Whether to enable TLS (aka HTTPS) for incoming requests. -tlsCertFile and -tlsKeyFile must be set if -tls is set

|

||||

-tlsCertFile string

|

||||

Path to file with TLS certificate. Used only if -tls is set. Prefer ECDSA certs instead of RSA certs, since RSA certs are slow

|

||||

-tlsKeyFile string

|

||||

Path to file with TLS key. Used only if -tls is set

|

||||

-version

|

||||

Show VictoriaMetrics version

|

||||

-write.url string

|

||||

write access url address, example: http://vminsert:8480

|

||||

|

||||

```

|

||||

|

||||

## TroubleShooting

|

||||

|

||||

* Access control:

|

||||

* incorrect `jwt` format, try https://jwt.io/#debugger-io with our tokens

|

||||

* expired token, check `exp` field.

|

||||

* Rate Limiting:

|

||||

* `scrape_interval` at datasource, reduce it to apply limits faster.

|

||||

|

||||

|

||||

## Limitations

|

||||

|

||||

* Access Control:

|

||||

* `jwt` token must be validated by external system, currently `vmgateway` can't validate the signature.

|

||||

* RateLimiting:

|

||||

* limits applied based on queries to `datasource.url`

|

||||

* only cluster version can be rate-limited.

|

||||

BIN

app/vmgateway/vmgateway-access-control.jpg

Normal file

BIN

app/vmgateway/vmgateway-access-control.jpg

Normal file

Binary file not shown.

|

After

(image error) Size: 40 KiB |

BIN

app/vmgateway/vmgateway-overview.jpeg

Normal file

BIN

app/vmgateway/vmgateway-overview.jpeg

Normal file

Binary file not shown.

|

After

(image error) Size: 48 KiB |

BIN

app/vmgateway/vmgateway-rate-limiting.jpg

Normal file

BIN

app/vmgateway/vmgateway-rate-limiting.jpg

Normal file

Binary file not shown.

|

After

(image error) Size: 35 KiB |

BIN

app/vmgateway/vmgateway.png

Normal file

BIN

app/vmgateway/vmgateway.png

Normal file

Binary file not shown.

|

After

(image error) Size: 48 KiB |

|

|

@ -14,7 +14,7 @@ import (

|

|||

|

||||

// InsertCtx contains common bits for data points insertion.

|

||||

type InsertCtx struct {

|

||||

Labels []prompb.Label

|

||||

Labels sortedLabels

|

||||

|

||||

mrs []storage.MetricRow

|

||||

metricNamesBuf []byte

|

||||

|

|

|

|||

32

app/vminsert/common/sort_labels.go

Normal file

32

app/vminsert/common/sort_labels.go

Normal file

|

|

@ -0,0 +1,32 @@

|

|||

package common

|

||||

|

||||

import (

|

||||

"flag"

|

||||

"sort"

|

||||

|

||||

"github.com/VictoriaMetrics/VictoriaMetrics/lib/prompb"

|

||||

)

|

||||

|

||||

var sortLabels = flag.Bool("sortLabels", false, `Whether to sort labels for incoming samples before writing them to storage. `+

|

||||

`This may be needed for reducing memory usage at storage when the order of labels in incoming samples is random. `+

|

||||

`For example, if m{k1="v1",k2="v2"} may be sent as m{k2="v2",k1="v1"}. `+

|

||||

`Enabled sorting for labels can slow down ingestion performance a bit`)

|

||||

|

||||

// SortLabelsIfNeeded sorts labels if -sortLabels command-line flag is set

|

||||

func (ctx *InsertCtx) SortLabelsIfNeeded() {

|

||||

if *sortLabels {

|

||||

sort.Sort(&ctx.Labels)

|

||||

}

|

||||

}

|

||||

|

||||

type sortedLabels []prompb.Label

|

||||

|

||||

func (sl *sortedLabels) Len() int { return len(*sl) }

|

||||

func (sl *sortedLabels) Less(i, j int) bool {

|

||||

a := *sl

|

||||

return string(a[i].Name) < string(a[j].Name)

|

||||

}

|

||||

func (sl *sortedLabels) Swap(i, j int) {

|

||||

a := *sl

|

||||

a[i], a[j] = a[j], a[i]

|

||||

}

|

||||

|

|

@ -55,6 +55,7 @@ func insertRows(rows []parser.Row, extraLabels []prompbmarshal.Label) error {

|

|||

// Skip metric without labels.

|

||||

continue

|

||||

}

|

||||

ctx.SortLabelsIfNeeded()

|

||||

if err := ctx.WriteDataPoint(nil, ctx.Labels, r.Timestamp, r.Value); err != nil {

|

||||

return err

|

||||

}

|

||||

|

|

|

|||

|

|

@ -45,6 +45,7 @@ func insertRows(rows []parser.Row) error {

|

|||

// Skip metric without labels.

|

||||

continue

|

||||

}

|

||||

ctx.SortLabelsIfNeeded()

|

||||