mirror of

https://github.com/VictoriaMetrics/VictoriaMetrics.git

synced 2024-11-21 14:44:00 +00:00

vendor: make vendor-update

This commit is contained in:

parent

7c1daade15

commit

a7f8ce5e3d

39 changed files with 2660 additions and 2172 deletions

18

go.mod

18

go.mod

|

|

@ -3,7 +3,7 @@ module github.com/VictoriaMetrics/VictoriaMetrics

|

|||

go 1.19

|

||||

|

||||

require (

|

||||

cloud.google.com/go/storage v1.28.1

|

||||

cloud.google.com/go/storage v1.29.0

|

||||

github.com/Azure/azure-sdk-for-go/sdk/azcore v1.3.0

|

||||

github.com/Azure/azure-sdk-for-go/sdk/storage/azblob v0.6.1

|

||||

github.com/VictoriaMetrics/fastcache v1.12.0

|

||||

|

|

@ -23,9 +23,9 @@ require (

|

|||

github.com/golang/snappy v0.0.4

|

||||

github.com/googleapis/gax-go/v2 v2.7.0

|

||||

github.com/influxdata/influxdb v1.11.0

|

||||

github.com/klauspost/compress v1.15.14

|

||||

github.com/klauspost/compress v1.15.15

|

||||

github.com/prometheus/prometheus v0.41.0

|

||||

github.com/urfave/cli/v2 v2.23.7

|

||||

github.com/urfave/cli/v2 v2.24.1

|

||||

github.com/valyala/fastjson v1.6.4

|

||||

github.com/valyala/fastrand v1.1.0

|

||||

github.com/valyala/fasttemplate v1.2.2

|

||||

|

|

@ -35,19 +35,19 @@ require (

|

|||

golang.org/x/net v0.5.0

|

||||

golang.org/x/oauth2 v0.4.0

|

||||

golang.org/x/sys v0.4.0

|

||||

google.golang.org/api v0.107.0

|

||||

google.golang.org/api v0.108.0

|

||||

gopkg.in/yaml.v2 v2.4.0

|

||||

)

|

||||

|

||||

require (

|

||||

cloud.google.com/go v0.108.0 // indirect

|

||||

cloud.google.com/go v0.109.0 // indirect

|

||||

cloud.google.com/go/compute v1.15.1 // indirect

|

||||

cloud.google.com/go/compute/metadata v0.2.3 // indirect

|

||||

cloud.google.com/go/iam v0.10.0 // indirect

|

||||

github.com/Azure/azure-sdk-for-go/sdk/internal v1.1.2 // indirect

|

||||

github.com/VividCortex/ewma v1.2.0 // indirect

|

||||

github.com/alecthomas/units v0.0.0-20211218093645-b94a6e3cc137 // indirect

|

||||

github.com/aws/aws-sdk-go v1.44.180 // indirect

|

||||

github.com/aws/aws-sdk-go v1.44.184 // indirect

|

||||

github.com/aws/aws-sdk-go-v2/aws/protocol/eventstream v1.4.10 // indirect

|

||||

github.com/aws/aws-sdk-go-v2/credentials v1.13.8 // indirect

|

||||

github.com/aws/aws-sdk-go-v2/feature/ec2/imds v1.12.21 // indirect

|

||||

|

|

@ -67,7 +67,7 @@ require (

|

|||

github.com/cpuguy83/go-md2man/v2 v2.0.2 // indirect

|

||||

github.com/davecgh/go-spew v1.1.1 // indirect

|

||||

github.com/dennwc/varint v1.0.0 // indirect

|

||||

github.com/fatih/color v1.13.0 // indirect

|

||||

github.com/fatih/color v1.14.0 // indirect

|

||||

github.com/felixge/httpsnoop v1.0.3 // indirect

|

||||

github.com/go-kit/log v0.2.1 // indirect

|

||||

github.com/go-logfmt/logfmt v0.5.1 // indirect

|

||||

|

|

@ -106,13 +106,13 @@ require (

|

|||

go.opentelemetry.io/otel/trace v1.11.2 // indirect

|

||||

go.uber.org/atomic v1.10.0 // indirect

|

||||

go.uber.org/goleak v1.2.0 // indirect

|

||||

golang.org/x/exp v0.0.0-20230113213754-f9f960f08ad4 // indirect

|

||||

golang.org/x/exp v0.0.0-20230118134722-a68e582fa157 // indirect

|

||||

golang.org/x/sync v0.1.0 // indirect

|

||||

golang.org/x/text v0.6.0 // indirect

|

||||

golang.org/x/time v0.3.0 // indirect

|

||||

golang.org/x/xerrors v0.0.0-20220907171357-04be3eba64a2 // indirect

|

||||

google.golang.org/appengine v1.6.7 // indirect

|

||||

google.golang.org/genproto v0.0.0-20230113154510-dbe35b8444a5 // indirect

|

||||

google.golang.org/genproto v0.0.0-20230119192704-9d59e20e5cd1 // indirect

|

||||

google.golang.org/grpc v1.52.0 // indirect

|

||||

google.golang.org/protobuf v1.28.1 // indirect

|

||||

gopkg.in/yaml.v3 v3.0.1 // indirect

|

||||

|

|

|

|||

40

go.sum

40

go.sum

|

|

@ -13,8 +13,8 @@ cloud.google.com/go v0.56.0/go.mod h1:jr7tqZxxKOVYizybht9+26Z/gUq7tiRzu+ACVAMbKV

|

|||

cloud.google.com/go v0.57.0/go.mod h1:oXiQ6Rzq3RAkkY7N6t3TcE6jE+CIBBbA36lwQ1JyzZs=

|

||||

cloud.google.com/go v0.62.0/go.mod h1:jmCYTdRCQuc1PHIIJ/maLInMho30T/Y0M4hTdTShOYc=

|

||||

cloud.google.com/go v0.65.0/go.mod h1:O5N8zS7uWy9vkA9vayVHs65eM1ubvY4h553ofrNHObY=

|

||||

cloud.google.com/go v0.108.0 h1:xntQwnfn8oHGX0crLVinvHM+AhXvi3QHQIEcX/2hiWk=

|

||||

cloud.google.com/go v0.108.0/go.mod h1:lNUfQqusBJp0bgAg6qrHgYFYbTB+dOiob1itwnlD33Q=

|

||||

cloud.google.com/go v0.109.0 h1:38CZoKGlCnPZjGdyj0ZfpoGae0/wgNfy5F0byyxg0Gk=

|

||||

cloud.google.com/go v0.109.0/go.mod h1:2sYycXt75t/CSB5R9M2wPU1tJmire7AQZTPtITcGBVE=

|

||||

cloud.google.com/go/bigquery v1.0.1/go.mod h1:i/xbL2UlR5RvWAURpBYZTtm/cXjCha9lbfbpx4poX+o=

|

||||

cloud.google.com/go/bigquery v1.3.0/go.mod h1:PjpwJnslEMmckchkHFfq+HTD2DmtT67aNFKH1/VBDHE=

|

||||

cloud.google.com/go/bigquery v1.4.0/go.mod h1:S8dzgnTigyfTmLBfrtrhyYhwRxG72rYxvftPBK2Dvzc=

|

||||

|

|

@ -39,8 +39,8 @@ cloud.google.com/go/storage v1.5.0/go.mod h1:tpKbwo567HUNpVclU5sGELwQWBDZ8gh0Zeo

|

|||

cloud.google.com/go/storage v1.6.0/go.mod h1:N7U0C8pVQ/+NIKOBQyamJIeKQKkZ+mxpohlUTyfDhBk=

|

||||

cloud.google.com/go/storage v1.8.0/go.mod h1:Wv1Oy7z6Yz3DshWRJFhqM/UCfaWIRTdp0RXyy7KQOVs=

|

||||

cloud.google.com/go/storage v1.10.0/go.mod h1:FLPqc6j+Ki4BU591ie1oL6qBQGu2Bl/tZ9ullr3+Kg0=

|

||||

cloud.google.com/go/storage v1.28.1 h1:F5QDG5ChchaAVQhINh24U99OWHURqrW8OmQcGKXcbgI=

|

||||

cloud.google.com/go/storage v1.28.1/go.mod h1:Qnisd4CqDdo6BGs2AD5LLnEsmSQ80wQ5ogcBBKhU86Y=

|

||||

cloud.google.com/go/storage v1.29.0 h1:6weCgzRvMg7lzuUurI4697AqIRPU1SvzHhynwpW31jI=

|

||||

cloud.google.com/go/storage v1.29.0/go.mod h1:4puEjyTKnku6gfKoTfNOU/W+a9JyuVNxjpS5GBrB8h4=

|

||||

dmitri.shuralyov.com/gpu/mtl v0.0.0-20190408044501-666a987793e9/go.mod h1:H6x//7gZCb22OMCxBHrMx7a5I7Hp++hsVxbQ4BYO7hU=

|

||||

github.com/Azure/azure-sdk-for-go v65.0.0+incompatible h1:HzKLt3kIwMm4KeJYTdx9EbjRYTySD/t8i1Ee/W5EGXw=

|

||||

github.com/Azure/azure-sdk-for-go/sdk/azcore v1.3.0 h1:VuHAcMq8pU1IWNT/m5yRaGqbK0BiQKHT8X4DTp9CHdI=

|

||||

|

|

@ -87,8 +87,8 @@ github.com/andybalholm/brotli v1.0.2/go.mod h1:loMXtMfwqflxFJPmdbJO0a3KNoPuLBgiu

|

|||

github.com/andybalholm/brotli v1.0.3/go.mod h1:fO7iG3H7G2nSZ7m0zPUDn85XEX2GTukHGRSepvi9Eig=

|

||||

github.com/armon/go-metrics v0.3.10 h1:FR+drcQStOe+32sYyJYyZ7FIdgoGGBnwLl+flodp8Uo=

|

||||

github.com/aws/aws-sdk-go v1.38.35/go.mod h1:hcU610XS61/+aQV88ixoOzUoG7v3b31pl2zKMmprdro=

|

||||

github.com/aws/aws-sdk-go v1.44.180 h1:VLZuAHI9fa/3WME5JjpVjcPCNfpGHVMiHx8sLHWhMgI=

|

||||

github.com/aws/aws-sdk-go v1.44.180/go.mod h1:aVsgQcEevwlmQ7qHE9I3h+dtQgpqhFB+i8Phjh7fkwI=

|

||||

github.com/aws/aws-sdk-go v1.44.184 h1:/MggyE66rOImXJKl1HqhLQITvWvqIV7w1Q4MaG6FHUo=

|

||||

github.com/aws/aws-sdk-go v1.44.184/go.mod h1:aVsgQcEevwlmQ7qHE9I3h+dtQgpqhFB+i8Phjh7fkwI=

|

||||

github.com/aws/aws-sdk-go-v2 v1.17.3 h1:shN7NlnVzvDUgPQ+1rLMSxY8OWRNDRYtiqe0p/PgrhY=

|

||||

github.com/aws/aws-sdk-go-v2 v1.17.3/go.mod h1:uzbQtefpm44goOPmdKyAlXSNcwlRgF3ePWVW6EtJvvw=

|

||||

github.com/aws/aws-sdk-go-v2/aws/protocol/eventstream v1.4.10 h1:dK82zF6kkPeCo8J1e+tGx4JdvDIQzj7ygIoLg8WMuGs=

|

||||

|

|

@ -166,8 +166,8 @@ github.com/envoyproxy/go-control-plane v0.10.3 h1:xdCVXxEe0Y3FQith+0cj2irwZudqGY

|

|||

github.com/envoyproxy/protoc-gen-validate v0.1.0/go.mod h1:iSmxcyjqTsJpI2R4NaDN7+kN2VEUnK/pcBlmesArF7c=

|

||||

github.com/envoyproxy/protoc-gen-validate v0.9.1 h1:PS7VIOgmSVhWUEeZwTe7z7zouA22Cr590PzXKbZHOVY=

|

||||

github.com/fatih/color v1.10.0/go.mod h1:ELkj/draVOlAH/xkhN6mQ50Qd0MPOk5AAr3maGEBuJM=

|

||||

github.com/fatih/color v1.13.0 h1:8LOYc1KYPPmyKMuN8QV2DNRWNbLo6LZ0iLs8+mlH53w=

|

||||

github.com/fatih/color v1.13.0/go.mod h1:kLAiJbzzSOZDVNGyDpeOxJ47H46qBXwg5ILebYFFOfk=

|

||||

github.com/fatih/color v1.14.0 h1:AD//feEuOKJSzxN81txMW47CNX1EW6kMVEPt4lePhtE=

|

||||

github.com/fatih/color v1.14.0/go.mod h1:Ywr2WOhTEN4nsWMWU8I8GWIG5z8rhJEa0ukvJDOfSPY=

|

||||

github.com/felixge/httpsnoop v1.0.3 h1:s/nj+GCswXYzN5v2DpNMuMQYe+0DDwt5WVCU6CWBdXk=

|

||||

github.com/felixge/httpsnoop v1.0.3/go.mod h1:m8KPJKqk1gH5J9DgRY2ASl2lWCfGKXixSwevea8zH2U=

|

||||

github.com/fsnotify/fsnotify v1.6.0 h1:n+5WquG0fcWoWp6xPWfHdbskMCQaFnG6PfBrh1Ky4HY=

|

||||

|

|

@ -314,8 +314,8 @@ github.com/kisielk/errcheck v1.5.0/go.mod h1:pFxgyoBC7bSaBwPgfKdkLd5X25qrDl4LWUI

|

|||

github.com/kisielk/gotool v1.0.0/go.mod h1:XhKaO+MFFWcvkIS/tQcRk01m1F5IRFswLeQ+oQHNcck=

|

||||

github.com/klauspost/compress v1.13.4/go.mod h1:8dP1Hq4DHOhN9w426knH3Rhby4rFm6D8eO+e+Dq5Gzg=

|

||||

github.com/klauspost/compress v1.13.5/go.mod h1:/3/Vjq9QcHkK5uEr5lBEmyoZ1iFhe47etQ6QUkpK6sk=

|

||||

github.com/klauspost/compress v1.15.14 h1:i7WCKDToww0wA+9qrUZ1xOjp218vfFo3nTU6UHp+gOc=

|

||||

github.com/klauspost/compress v1.15.14/go.mod h1:QPwzmACJjUTFsnSHH934V6woptycfrDDJnH7hvFVbGM=

|

||||

github.com/klauspost/compress v1.15.15 h1:EF27CXIuDsYJ6mmvtBRlEuB2UVOqHG1tAXgZ7yIO+lw=

|

||||

github.com/klauspost/compress v1.15.15/go.mod h1:ZcK2JAFqKOpnBlxcLsJzYfrS9X1akm9fHZNnD9+Vo/4=

|

||||

github.com/kolo/xmlrpc v0.0.0-20220921171641-a4b6fa1dd06b h1:udzkj9S/zlT5X367kqJis0QP7YMxobob6zhzq6Yre00=

|

||||

github.com/konsorten/go-windows-terminal-sequences v1.0.1/go.mod h1:T0+1ngSBFLxvqU3pZ+m/2kptfBszLMUkC4ZK/EgS/cQ=

|

||||

github.com/konsorten/go-windows-terminal-sequences v1.0.3/go.mod h1:T0+1ngSBFLxvqU3pZ+m/2kptfBszLMUkC4ZK/EgS/cQ=

|

||||

|

|

@ -329,11 +329,9 @@ github.com/kylelemons/godebug v1.1.0 h1:RPNrshWIDI6G2gRW9EHilWtl7Z6Sb1BR0xunSBf0

|

|||

github.com/linode/linodego v1.9.3 h1:+lxNZw4avRxhCqGjwfPgQ2PvMT+vOL0OMsTdzixR7hQ=

|

||||

github.com/mailru/easyjson v0.7.7 h1:UGYAvKxe3sBsEDzO8ZeWOSlIQfWFlxbzLZe7hwFURr0=

|

||||

github.com/mattn/go-colorable v0.1.8/go.mod h1:u6P/XSegPjTcexA+o6vUJrdnUu04hMope9wVRipJSqc=

|

||||

github.com/mattn/go-colorable v0.1.9/go.mod h1:u6P/XSegPjTcexA+o6vUJrdnUu04hMope9wVRipJSqc=

|

||||

github.com/mattn/go-colorable v0.1.13 h1:fFA4WZxdEF4tXPZVKMLwD8oUnCTTo08duU7wxecdEvA=

|

||||

github.com/mattn/go-colorable v0.1.13/go.mod h1:7S9/ev0klgBDR4GtXTXX8a3vIGJpMovkB8vQcUbaXHg=

|

||||

github.com/mattn/go-isatty v0.0.12/go.mod h1:cbi8OIDigv2wuxKPP5vlRcQ1OAZbq2CE4Kysco4FUpU=

|

||||

github.com/mattn/go-isatty v0.0.14/go.mod h1:7GGIvUiUoEMVVmxf/4nioHXj79iQHKdU27kJ6hsGG94=

|

||||

github.com/mattn/go-isatty v0.0.16/go.mod h1:kYGgaQfpe5nmfYZH+SKPsOc2e4SrIfOl2e/yFXSvRLM=

|

||||

github.com/mattn/go-isatty v0.0.17 h1:BTarxUcIeDqL27Mc+vyvdWYSL28zpIhv3RoTdsLMPng=

|

||||

github.com/mattn/go-isatty v0.0.17/go.mod h1:kYGgaQfpe5nmfYZH+SKPsOc2e4SrIfOl2e/yFXSvRLM=

|

||||

|

|

@ -421,8 +419,8 @@ github.com/stretchr/testify v1.7.1/go.mod h1:6Fq8oRcR53rry900zMqJjRRixrwX3KX962/

|

|||

github.com/stretchr/testify v1.8.0/go.mod h1:yNjHg4UonilssWZ8iaSj1OCr/vHnekPRkoO+kdMU+MU=

|

||||

github.com/stretchr/testify v1.8.1 h1:w7B6lhMri9wdJUVmEZPGGhZzrYTPvgJArz7wNPgYKsk=

|

||||

github.com/stretchr/testify v1.8.1/go.mod h1:w2LPCIKwWwSfY2zedu0+kehJoqGctiVI29o6fzry7u4=

|

||||

github.com/urfave/cli/v2 v2.23.7 h1:YHDQ46s3VghFHFf1DdF+Sh7H4RqhcM+t0TmZRJx4oJY=

|

||||

github.com/urfave/cli/v2 v2.23.7/go.mod h1:GHupkWPMM0M/sj1a2b4wUrWBPzazNrIjouW6fmdJLxc=

|

||||

github.com/urfave/cli/v2 v2.24.1 h1:/QYYr7g0EhwXEML8jO+8OYt5trPnLHS0p3mrgExJ5NU=

|

||||

github.com/urfave/cli/v2 v2.24.1/go.mod h1:GHupkWPMM0M/sj1a2b4wUrWBPzazNrIjouW6fmdJLxc=

|

||||

github.com/valyala/bytebufferpool v1.0.0 h1:GqA5TC/0021Y/b9FG4Oi9Mr3q7XYx6KllzawFIhcdPw=

|

||||

github.com/valyala/bytebufferpool v1.0.0/go.mod h1:6bBcMArwyJ5K/AmCkWv1jt77kVWyCJ6HpOuEn7z0Csc=

|

||||

github.com/valyala/fasthttp v1.30.0/go.mod h1:2rsYD01CKFrjjsvFxx75KlEUNpWNBY9JWD3K/7o2Cus=

|

||||

|

|

@ -485,8 +483,8 @@ golang.org/x/exp v0.0.0-20191227195350-da58074b4299/go.mod h1:2RIsYlXP63K8oxa1u0

|

|||

golang.org/x/exp v0.0.0-20200119233911-0405dc783f0a/go.mod h1:2RIsYlXP63K8oxa1u096TMicItID8zy7Y6sNkU49FU4=

|

||||

golang.org/x/exp v0.0.0-20200207192155-f17229e696bd/go.mod h1:J/WKrq2StrnmMY6+EHIKF9dgMWnmCNThgcyBT1FY9mM=

|

||||

golang.org/x/exp v0.0.0-20200224162631-6cc2880d07d6/go.mod h1:3jZMyOhIsHpP37uCMkUooju7aAi5cS1Q23tOzKc+0MU=

|

||||

golang.org/x/exp v0.0.0-20230113213754-f9f960f08ad4 h1:CNkDRtCj8otM5CFz5jYvbr8ioXX8flVsLfDWEj0M5kk=

|

||||

golang.org/x/exp v0.0.0-20230113213754-f9f960f08ad4/go.mod h1:CxIveKay+FTh1D0yPZemJVgC/95VzuuOLq5Qi4xnoYc=

|

||||

golang.org/x/exp v0.0.0-20230118134722-a68e582fa157 h1:fiNkyhJPUvxbRPbCqY/D9qdjmPzfHcpK3P4bM4gioSY=

|

||||

golang.org/x/exp v0.0.0-20230118134722-a68e582fa157/go.mod h1:CxIveKay+FTh1D0yPZemJVgC/95VzuuOLq5Qi4xnoYc=

|

||||

golang.org/x/image v0.0.0-20190227222117-0694c2d4d067/go.mod h1:kZ7UVZpmo3dzQBMxlp+ypCbDeSB+sBbTgSJuh5dn5js=

|

||||

golang.org/x/image v0.0.0-20190802002840-cff245a6509b/go.mod h1:FeLwcggjj3mMvU+oOTbSwawSJRM1uh48EjtB4UJZlP0=

|

||||

golang.org/x/lint v0.0.0-20181026193005-c67002cb31c3/go.mod h1:UVdnD1Gm6xHRNCYTkRU2/jEulfH38KcIWyp/GAMgvoE=

|

||||

|

|

@ -607,13 +605,13 @@ golang.org/x/sys v0.0.0-20210423082822-04245dca01da/go.mod h1:h1NjWce9XRLGQEsW7w

|

|||

golang.org/x/sys v0.0.0-20210514084401-e8d321eab015/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

golang.org/x/sys v0.0.0-20210603081109-ebe580a85c40/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

golang.org/x/sys v0.0.0-20210615035016-665e8c7367d1/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

golang.org/x/sys v0.0.0-20210630005230-0f9fa26af87c/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

golang.org/x/sys v0.0.0-20220405052023-b1e9470b6e64/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

golang.org/x/sys v0.0.0-20220503163025-988cb79eb6c6/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

golang.org/x/sys v0.0.0-20220520151302-bc2c85ada10a/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

golang.org/x/sys v0.0.0-20220722155257-8c9f86f7a55f/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

golang.org/x/sys v0.0.0-20220811171246-fbc7d0a398ab/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

golang.org/x/sys v0.1.0/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

golang.org/x/sys v0.3.0/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

golang.org/x/sys v0.4.0 h1:Zr2JFtRQNX3BCZ8YtxRE9hNJYC8J6I1MVbMg6owUp18=

|

||||

golang.org/x/sys v0.4.0/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

golang.org/x/term v0.0.0-20201126162022-7de9c90e9dd1/go.mod h1:bj7SfCRtBDWHUb9snDiAeCFNEtKQo2Wmx5Cou7ajbmo=

|

||||

|

|

@ -701,8 +699,8 @@ google.golang.org/api v0.24.0/go.mod h1:lIXQywCXRcnZPGlsd8NbLnOjtAoL6em04bJ9+z0M

|

|||

google.golang.org/api v0.28.0/go.mod h1:lIXQywCXRcnZPGlsd8NbLnOjtAoL6em04bJ9+z0MncE=

|

||||

google.golang.org/api v0.29.0/go.mod h1:Lcubydp8VUV7KeIHD9z2Bys/sm/vGKnG1UHuDBSrHWM=

|

||||

google.golang.org/api v0.30.0/go.mod h1:QGmEvQ87FHZNiUVJkT14jQNYJ4ZJjdRF23ZXz5138Fc=

|

||||

google.golang.org/api v0.107.0 h1:I2SlFjD8ZWabaIFOfeEDg3pf0BHJDh6iYQ1ic3Yu/UU=

|

||||

google.golang.org/api v0.107.0/go.mod h1:2Ts0XTHNVWxypznxWOYUeI4g3WdP9Pk2Qk58+a/O9MY=

|

||||

google.golang.org/api v0.108.0 h1:WVBc/faN0DkKtR43Q/7+tPny9ZoLZdIiAyG5Q9vFClg=

|

||||

google.golang.org/api v0.108.0/go.mod h1:2Ts0XTHNVWxypznxWOYUeI4g3WdP9Pk2Qk58+a/O9MY=

|

||||

google.golang.org/appengine v1.1.0/go.mod h1:EbEs0AVv82hx2wNQdGPgUI5lhzA/G0D9YwlJXL52JkM=

|

||||

google.golang.org/appengine v1.4.0/go.mod h1:xpcJRLb0r/rnEns0DIKYYv+WjYCduHsrkT7/EB5XEv4=

|

||||

google.golang.org/appengine v1.5.0/go.mod h1:xpcJRLb0r/rnEns0DIKYYv+WjYCduHsrkT7/EB5XEv4=

|

||||

|

|

@ -740,8 +738,8 @@ google.golang.org/genproto v0.0.0-20200618031413-b414f8b61790/go.mod h1:jDfRM7Fc

|

|||

google.golang.org/genproto v0.0.0-20200729003335-053ba62fc06f/go.mod h1:FWY/as6DDZQgahTzZj3fqbO1CbirC29ZNUFHwi0/+no=

|

||||

google.golang.org/genproto v0.0.0-20200804131852-c06518451d9c/go.mod h1:FWY/as6DDZQgahTzZj3fqbO1CbirC29ZNUFHwi0/+no=

|

||||

google.golang.org/genproto v0.0.0-20200825200019-8632dd797987/go.mod h1:FWY/as6DDZQgahTzZj3fqbO1CbirC29ZNUFHwi0/+no=

|

||||

google.golang.org/genproto v0.0.0-20230113154510-dbe35b8444a5 h1:wJT65XLOzhpSPCdAmmKfz94SlmnQzDzjm3Cj9k3fsXY=

|

||||

google.golang.org/genproto v0.0.0-20230113154510-dbe35b8444a5/go.mod h1:RGgjbofJ8xD9Sq1VVhDM1Vok1vRONV+rg+CjzG4SZKM=

|

||||

google.golang.org/genproto v0.0.0-20230119192704-9d59e20e5cd1 h1:wSjSSQW7LuPdv3m1IrSN33nVxH/kID6OIKy+FMwGB2k=

|

||||

google.golang.org/genproto v0.0.0-20230119192704-9d59e20e5cd1/go.mod h1:RGgjbofJ8xD9Sq1VVhDM1Vok1vRONV+rg+CjzG4SZKM=

|

||||

google.golang.org/grpc v1.19.0/go.mod h1:mqu4LbDTu4XGKhr4mRzUsmM4RtVoemTSY81AxZiDr8c=

|

||||

google.golang.org/grpc v1.20.1/go.mod h1:10oTOabMzJvdu6/UiuZezV6QK5dSlG84ov/aaiqXj38=

|

||||

google.golang.org/grpc v1.21.1/go.mod h1:oYelfM1adQP15Ek0mdvEgi9Df8B9CZIaU1084ijfRaM=

|

||||

|

|

|

|||

9

vendor/cloud.google.com/go/internal/.repo-metadata-full.json

generated

vendored

9

vendor/cloud.google.com/go/internal/.repo-metadata-full.json

generated

vendored

|

|

@ -548,6 +548,15 @@

|

|||

"release_level": "beta",

|

||||

"library_type": "GAPIC_AUTO"

|

||||

},

|

||||

"cloud.google.com/go/datacatalog/lineage/apiv1": {

|

||||

"distribution_name": "cloud.google.com/go/datacatalog/lineage/apiv1",

|

||||

"description": "Data Lineage API",

|

||||

"language": "Go",

|

||||

"client_library_type": "generated",

|

||||

"docs_url": "https://cloud.google.com/go/docs/reference/cloud.google.com/go/datacatalog/latest/lineage/apiv1",

|

||||

"release_level": "beta",

|

||||

"library_type": "GAPIC_AUTO"

|

||||

},

|

||||

"cloud.google.com/go/dataflow/apiv1beta3": {

|

||||

"distribution_name": "cloud.google.com/go/dataflow/apiv1beta3",

|

||||

"description": "Dataflow API",

|

||||

|

|

|

|||

8

vendor/cloud.google.com/go/internal/README.md

generated

vendored

8

vendor/cloud.google.com/go/internal/README.md

generated

vendored

|

|

@ -16,3 +16,11 @@ each package, which is the pattern followed by some other languages. External

|

|||

tools would then talk to pkg.go.dev or some other service to get the overall

|

||||

list of packages and use the `.repo-metadata.json` files to get the additional

|

||||

metadata required. For now, `.repo-metadata-full.json` includes everything.

|

||||

|

||||

## cloudbuild.yaml

|

||||

|

||||

To kick off a build locally run from the repo root:

|

||||

|

||||

```bash

|

||||

gcloud builds submit --project=cloud-devrel-kokoro-resources --config=internal/cloudbuild.yaml

|

||||

```

|

||||

|

|

|

|||

25

vendor/cloud.google.com/go/internal/cloudbuild.yaml

generated

vendored

Normal file

25

vendor/cloud.google.com/go/internal/cloudbuild.yaml

generated

vendored

Normal file

|

|

@ -0,0 +1,25 @@

|

|||

# Copyright 2023 Google LLC

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

|

||||

# note: /workspace is a special directory in the docker image where all the files in this folder

|

||||

# get placed on your behalf

|

||||

|

||||

timeout: 7200s # 2 hours

|

||||

steps:

|

||||

- name: gcr.io/cloud-builders/docker

|

||||

args: ['build', '-t', 'gcr.io/cloud-devrel-public-resources/owlbot-go', '-f', 'postprocessor/Dockerfile', '.']

|

||||

dir: internal

|

||||

|

||||

images:

|

||||

- gcr.io/cloud-devrel-public-resources/owlbot-go:latest

|

||||

13

vendor/cloud.google.com/go/storage/CHANGES.md

generated

vendored

13

vendor/cloud.google.com/go/storage/CHANGES.md

generated

vendored

|

|

@ -1,6 +1,19 @@

|

|||

# Changes

|

||||

|

||||

|

||||

## [1.29.0](https://github.com/googleapis/google-cloud-go/compare/storage/v1.28.1...storage/v1.29.0) (2023-01-19)

|

||||

|

||||

|

||||

### Features

|

||||

|

||||

* **storage:** Add ComponentCount as part of ObjectAttrs ([#7230](https://github.com/googleapis/google-cloud-go/issues/7230)) ([a19bca6](https://github.com/googleapis/google-cloud-go/commit/a19bca60704b4fbb674cf51d828580aa653c8210))

|

||||

* **storage:** Add REST client ([06a54a1](https://github.com/googleapis/google-cloud-go/commit/06a54a16a5866cce966547c51e203b9e09a25bc0))

|

||||

|

||||

|

||||

### Documentation

|

||||

|

||||

* **storage/internal:** Corrected typos and spellings ([7357077](https://github.com/googleapis/google-cloud-go/commit/735707796d81d7f6f32fc3415800c512fe62297e))

|

||||

|

||||

## [1.28.1](https://github.com/googleapis/google-cloud-go/compare/storage/v1.28.0...storage/v1.28.1) (2022-12-02)

|

||||

|

||||

|

||||

|

|

|

|||

9

vendor/cloud.google.com/go/storage/bucket.go

generated

vendored

9

vendor/cloud.google.com/go/storage/bucket.go

generated

vendored

|

|

@ -35,6 +35,7 @@ import (

|

|||

raw "google.golang.org/api/storage/v1"

|

||||

dpb "google.golang.org/genproto/googleapis/type/date"

|

||||

"google.golang.org/protobuf/proto"

|

||||

"google.golang.org/protobuf/types/known/durationpb"

|

||||

)

|

||||

|

||||

// BucketHandle provides operations on a Google Cloud Storage bucket.

|

||||

|

|

@ -1389,12 +1390,12 @@ func (rp *RetentionPolicy) toProtoRetentionPolicy() *storagepb.Bucket_RetentionP

|

|||

}

|

||||

// RetentionPeriod must be greater than 0, so if it is 0, the user left it

|

||||

// unset, and so we should not send it in the request i.e. nil is sent.

|

||||

var period *int64

|

||||

var dur *durationpb.Duration

|

||||

if rp.RetentionPeriod != 0 {

|

||||

period = proto.Int64(int64(rp.RetentionPeriod / time.Second))

|

||||

dur = durationpb.New(rp.RetentionPeriod)

|

||||

}

|

||||

return &storagepb.Bucket_RetentionPolicy{

|

||||

RetentionPeriod: period,

|

||||

RetentionDuration: dur,

|

||||

}

|

||||

}

|

||||

|

||||

|

|

@ -1418,7 +1419,7 @@ func toRetentionPolicyFromProto(rp *storagepb.Bucket_RetentionPolicy) *Retention

|

|||

return nil

|

||||

}

|

||||

return &RetentionPolicy{

|

||||

RetentionPeriod: time.Duration(rp.GetRetentionPeriod()) * time.Second,

|

||||

RetentionPeriod: rp.GetRetentionDuration().AsDuration(),

|

||||

EffectiveTime: rp.GetEffectiveTime().AsTime(),

|

||||

IsLocked: rp.GetIsLocked(),

|

||||

}

|

||||

|

|

|

|||

30

vendor/cloud.google.com/go/storage/grpc_client.go

generated

vendored

30

vendor/cloud.google.com/go/storage/grpc_client.go

generated

vendored

|

|

@ -34,7 +34,6 @@ import (

|

|||

"google.golang.org/grpc/codes"

|

||||

"google.golang.org/grpc/metadata"

|

||||

"google.golang.org/grpc/status"

|

||||

"google.golang.org/protobuf/proto"

|

||||

fieldmaskpb "google.golang.org/protobuf/types/known/fieldmaskpb"

|

||||

)

|

||||

|

||||

|

|

@ -1496,11 +1495,16 @@ func (w *gRPCWriter) startResumableUpload() error {

|

|||

if err != nil {

|

||||

return err

|

||||

}

|

||||

return run(w.ctx, func() error {

|

||||

upres, err := w.c.raw.StartResumableWrite(w.ctx, &storagepb.StartResumableWriteRequest{

|

||||

req := &storagepb.StartResumableWriteRequest{

|

||||

WriteObjectSpec: spec,

|

||||

CommonObjectRequestParams: toProtoCommonObjectRequestParams(w.encryptionKey),

|

||||

})

|

||||

}

|

||||

// TODO: Currently the checksums are only sent on the request to initialize

|

||||

// the upload, but in the future, we must also support sending it

|

||||

// on the *last* message of the stream.

|

||||

req.ObjectChecksums = toProtoChecksums(w.sendCRC32C, w.attrs)

|

||||

return run(w.ctx, func() error {

|

||||

upres, err := w.c.raw.StartResumableWrite(w.ctx, req)

|

||||

w.upid = upres.GetUploadId()

|

||||

return err

|

||||

}, w.settings.retry, w.settings.idempotent, setRetryHeaderGRPC(w.ctx))

|

||||

|

|

@ -1585,25 +1589,13 @@ func (w *gRPCWriter) uploadBuffer(recvd int, start int64, doneReading bool) (*st

|

|||

WriteObjectSpec: spec,

|

||||

}

|

||||

req.CommonObjectRequestParams = toProtoCommonObjectRequestParams(w.encryptionKey)

|

||||

}

|

||||

|

||||

// For a non-resumable upload, checksums must be sent in this message.

|

||||

// TODO: Currently the checksums are only sent on the first message

|

||||

// of the stream, but in the future, we must also support sending it

|

||||

// on the *last* message of the stream (instead of the first).

|

||||

if w.sendCRC32C {

|

||||

req.ObjectChecksums = &storagepb.ObjectChecksums{

|

||||

Crc32C: proto.Uint32(w.attrs.CRC32C),

|

||||

}

|

||||

}

|

||||

if len(w.attrs.MD5) != 0 {

|

||||

if cs := req.GetObjectChecksums(); cs == nil {

|

||||

req.ObjectChecksums = &storagepb.ObjectChecksums{

|

||||

Md5Hash: w.attrs.MD5,

|

||||

}

|

||||

} else {

|

||||

cs.Md5Hash = w.attrs.MD5

|

||||

}

|

||||

req.ObjectChecksums = toProtoChecksums(w.sendCRC32C, w.attrs)

|

||||

}

|

||||

|

||||

}

|

||||

|

||||

err = w.stream.Send(req)

|

||||

|

|

|

|||

10

vendor/cloud.google.com/go/storage/internal/apiv2/doc.go

generated

vendored

10

vendor/cloud.google.com/go/storage/internal/apiv2/doc.go

generated

vendored

|

|

@ -1,4 +1,4 @@

|

|||

// Copyright 2022 Google LLC

|

||||

// Copyright 2023 Google LLC

|

||||

//

|

||||

// Licensed under the Apache License, Version 2.0 (the "License");

|

||||

// you may not use this file except in compliance with the License.

|

||||

|

|

@ -21,6 +21,11 @@

|

|||

//

|

||||

// NOTE: This package is in alpha. It is not stable, and is likely to change.

|

||||

//

|

||||

// # General documentation

|

||||

//

|

||||

// For information about setting deadlines, reusing contexts, and more

|

||||

// please visit https://pkg.go.dev/cloud.google.com/go.

|

||||

//

|

||||

// # Example usage

|

||||

//

|

||||

// To get started with this package, create a client.

|

||||

|

|

@ -73,9 +78,6 @@

|

|||

// Individual methods on the client use the ctx given to them.

|

||||

//

|

||||

// To close the open connection, use the Close() method.

|

||||

//

|

||||

// For information about setting deadlines, reusing contexts, and more

|

||||

// please visit https://pkg.go.dev/cloud.google.com/go.

|

||||

package storage // import "cloud.google.com/go/storage/internal/apiv2"

|

||||

|

||||

import (

|

||||

|

|

|

|||

12

vendor/cloud.google.com/go/storage/internal/apiv2/storage_client.go

generated

vendored

12

vendor/cloud.google.com/go/storage/internal/apiv2/storage_client.go

generated

vendored

|

|

@ -1,4 +1,4 @@

|

|||

// Copyright 2022 Google LLC

|

||||

// Copyright 2023 Google LLC

|

||||

//

|

||||

// Licensed under the Apache License, Version 2.0 (the "License");

|

||||

// you may not use this file except in compliance with the License.

|

||||

|

|

@ -238,17 +238,26 @@ func (c *Client) LockBucketRetentionPolicy(ctx context.Context, req *storagepb.L

|

|||

}

|

||||

|

||||

// GetIamPolicy gets the IAM policy for a specified bucket or object.

|

||||

// The resource field in the request should be

|

||||

// projects//buckets/<bucket_name> for a bucket or

|

||||

// projects//buckets/<bucket_name>/objects/<object_name> for an object.

|

||||

func (c *Client) GetIamPolicy(ctx context.Context, req *iampb.GetIamPolicyRequest, opts ...gax.CallOption) (*iampb.Policy, error) {

|

||||

return c.internalClient.GetIamPolicy(ctx, req, opts...)

|

||||

}

|

||||

|

||||

// SetIamPolicy updates an IAM policy for the specified bucket or object.

|

||||

// The resource field in the request should be

|

||||

// projects//buckets/<bucket_name> for a bucket or

|

||||

// projects//buckets/<bucket_name>/objects/<object_name> for an object.

|

||||

func (c *Client) SetIamPolicy(ctx context.Context, req *iampb.SetIamPolicyRequest, opts ...gax.CallOption) (*iampb.Policy, error) {

|

||||

return c.internalClient.SetIamPolicy(ctx, req, opts...)

|

||||

}

|

||||

|

||||

// TestIamPermissions tests a set of permissions on the given bucket or object to see which, if

|

||||

// any, are held by the caller.

|

||||

// The resource field in the request should be

|

||||

// projects//buckets/<bucket_name> for a bucket or

|

||||

// projects//buckets/<bucket_name>/objects/<object_name> for an object.

|

||||

func (c *Client) TestIamPermissions(ctx context.Context, req *iampb.TestIamPermissionsRequest, opts ...gax.CallOption) (*iampb.TestIamPermissionsResponse, error) {

|

||||

return c.internalClient.TestIamPermissions(ctx, req, opts...)

|

||||

}

|

||||

|

|

@ -1048,6 +1057,7 @@ func (c *gRPCClient) ReadObject(ctx context.Context, req *storagepb.ReadObjectRe

|

|||

md := metadata.Pairs("x-goog-request-params", routingHeaders)

|

||||

|

||||

ctx = insertMetadata(ctx, c.xGoogMetadata, md)

|

||||

opts = append((*c.CallOptions).ReadObject[0:len((*c.CallOptions).ReadObject):len((*c.CallOptions).ReadObject)], opts...)

|

||||

var resp storagepb.Storage_ReadObjectClient

|

||||

err := gax.Invoke(ctx, func(ctx context.Context, settings gax.CallSettings) error {

|

||||

var err error

|

||||

|

|

|

|||

3212

vendor/cloud.google.com/go/storage/internal/apiv2/stubs/storage.pb.go

generated

vendored

3212

vendor/cloud.google.com/go/storage/internal/apiv2/stubs/storage.pb.go

generated

vendored

File diff suppressed because it is too large

Load diff

2

vendor/cloud.google.com/go/storage/internal/apiv2/version.go

generated

vendored

2

vendor/cloud.google.com/go/storage/internal/apiv2/version.go

generated

vendored

|

|

@ -1,4 +1,4 @@

|

|||

// Copyright 2022 Google LLC

|

||||

// Copyright 2023 Google LLC

|

||||

//

|

||||

// Licensed under the Apache License, Version 2.0 (the "License");

|

||||

// you may not use this file except in compliance with the License.

|

||||

|

|

|

|||

2

vendor/cloud.google.com/go/storage/internal/version.go

generated

vendored

2

vendor/cloud.google.com/go/storage/internal/version.go

generated

vendored

|

|

@ -15,4 +15,4 @@

|

|||

package internal

|

||||

|

||||

// Version is the current tagged release of the library.

|

||||

const Version = "1.28.1"

|

||||

const Version = "1.29.0"

|

||||

|

|

|

|||

28

vendor/cloud.google.com/go/storage/storage.go

generated

vendored

28

vendor/cloud.google.com/go/storage/storage.go

generated

vendored

|

|

@ -1315,6 +1315,11 @@ type ObjectAttrs struct {

|

|||

// later value but not to an earlier one. For more information see

|

||||

// https://cloud.google.com/storage/docs/metadata#custom-time .

|

||||

CustomTime time.Time

|

||||

|

||||

// ComponentCount is the number of objects contained within a composite object.

|

||||

// For non-composite objects, the value will be zero.

|

||||

// This field is read-only.

|

||||

ComponentCount int64

|

||||

}

|

||||

|

||||

// convertTime converts a time in RFC3339 format to time.Time.

|

||||

|

|

@ -1385,6 +1390,7 @@ func newObject(o *raw.Object) *ObjectAttrs {

|

|||

Updated: convertTime(o.Updated),

|

||||

Etag: o.Etag,

|

||||

CustomTime: convertTime(o.CustomTime),

|

||||

ComponentCount: o.ComponentCount,

|

||||

}

|

||||

}

|

||||

|

||||

|

|

@ -1419,6 +1425,7 @@ func newObjectFromProto(o *storagepb.Object) *ObjectAttrs {

|

|||

Deleted: convertProtoTime(o.GetDeleteTime()),

|

||||

Updated: convertProtoTime(o.GetUpdateTime()),

|

||||

CustomTime: convertProtoTime(o.GetCustomTime()),

|

||||

ComponentCount: int64(o.ComponentCount),

|

||||

}

|

||||

}

|

||||

|

||||

|

|

@ -1547,6 +1554,7 @@ var attrToFieldMap = map[string]string{

|

|||

"Updated": "updated",

|

||||

"Etag": "etag",

|

||||

"CustomTime": "customTime",

|

||||

"ComponentCount": "componentCount",

|

||||

}

|

||||

|

||||

// attrToProtoFieldMap maps the field names of ObjectAttrs to the underlying field

|

||||

|

|

@ -1578,6 +1586,7 @@ var attrToProtoFieldMap = map[string]string{

|

|||

"Owner": "owner",

|

||||

"CustomerKeySHA256": "customer_encryption",

|

||||

"CustomTime": "custom_time",

|

||||

"ComponentCount": "component_count",

|

||||

// MediaLink was explicitly excluded from the proto as it is an HTTP-ism.

|

||||

// "MediaLink": "mediaLink",

|

||||

}

|

||||

|

|

@ -2079,6 +2088,25 @@ func toProtoCommonObjectRequestParams(key []byte) *storagepb.CommonObjectRequest

|

|||

}

|

||||

}

|

||||

|

||||

func toProtoChecksums(sendCRC32C bool, attrs *ObjectAttrs) *storagepb.ObjectChecksums {

|

||||

var checksums *storagepb.ObjectChecksums

|

||||

if sendCRC32C {

|

||||

checksums = &storagepb.ObjectChecksums{

|

||||

Crc32C: proto.Uint32(attrs.CRC32C),

|

||||

}

|

||||

}

|

||||

if len(attrs.MD5) != 0 {

|

||||

if checksums == nil {

|

||||

checksums = &storagepb.ObjectChecksums{

|

||||

Md5Hash: attrs.MD5,

|

||||

}

|

||||

} else {

|

||||

checksums.Md5Hash = attrs.MD5

|

||||

}

|

||||

}

|

||||

return checksums

|

||||

}

|

||||

|

||||

// ServiceAccount fetches the email address of the given project's Google Cloud Storage service account.

|

||||

func (c *Client) ServiceAccount(ctx context.Context, projectID string) (string, error) {

|

||||

o := makeStorageOpts(true, c.retry, "")

|

||||

|

|

|

|||

149

vendor/github.com/aws/aws-sdk-go/aws/endpoints/defaults.go

generated

vendored

149

vendor/github.com/aws/aws-sdk-go/aws/endpoints/defaults.go

generated

vendored

|

|

@ -1733,9 +1733,15 @@ var awsPartition = partition{

|

|||

},

|

||||

"api.mediatailor": service{

|

||||

Endpoints: serviceEndpoints{

|

||||

endpointKey{

|

||||

Region: "af-south-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "ap-northeast-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "ap-south-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "ap-southeast-1",

|

||||

}: endpoint{},

|

||||

|

|

@ -1751,6 +1757,9 @@ var awsPartition = partition{

|

|||

endpointKey{

|

||||

Region: "us-east-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "us-east-2",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "us-west-2",

|

||||

}: endpoint{},

|

||||

|

|

@ -3716,6 +3725,9 @@ var awsPartition = partition{

|

|||

endpointKey{

|

||||

Region: "eu-west-3",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "me-central-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "me-south-1",

|

||||

}: endpoint{},

|

||||

|

|

@ -4951,6 +4963,9 @@ var awsPartition = partition{

|

|||

endpointKey{

|

||||

Region: "eu-west-3",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "me-central-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "me-south-1",

|

||||

}: endpoint{},

|

||||

|

|

@ -8330,6 +8345,9 @@ var awsPartition = partition{

|

|||

endpointKey{

|

||||

Region: "ap-south-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "ap-south-2",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "ap-southeast-1",

|

||||

}: endpoint{},

|

||||

|

|

@ -8351,12 +8369,18 @@ var awsPartition = partition{

|

|||

endpointKey{

|

||||

Region: "eu-central-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "eu-central-2",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "eu-north-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "eu-south-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "eu-south-2",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "eu-west-1",

|

||||

}: endpoint{},

|

||||

|

|

@ -9373,6 +9397,15 @@ var awsPartition = partition{

|

|||

}: endpoint{

|

||||

Hostname: "elasticfilesystem-fips.eu-south-1.amazonaws.com",

|

||||

},

|

||||

endpointKey{

|

||||

Region: "eu-south-2",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "eu-south-2",

|

||||

Variant: fipsVariant,

|

||||

}: endpoint{

|

||||

Hostname: "elasticfilesystem-fips.eu-south-2.amazonaws.com",

|

||||

},

|

||||

endpointKey{

|

||||

Region: "eu-west-1",

|

||||

}: endpoint{},

|

||||

|

|

@ -9526,6 +9559,15 @@ var awsPartition = partition{

|

|||

},

|

||||

Deprecated: boxedTrue,

|

||||

},

|

||||

endpointKey{

|

||||

Region: "fips-eu-south-2",

|

||||

}: endpoint{

|

||||

Hostname: "elasticfilesystem-fips.eu-south-2.amazonaws.com",

|

||||

CredentialScope: credentialScope{

|

||||

Region: "eu-south-2",

|

||||

},

|

||||

Deprecated: boxedTrue,

|

||||

},

|

||||

endpointKey{

|

||||

Region: "fips-eu-west-1",

|

||||

}: endpoint{

|

||||

|

|

@ -15965,6 +16007,9 @@ var awsPartition = partition{

|

|||

endpointKey{

|

||||

Region: "ap-northeast-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "ap-northeast-2",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "ap-south-1",

|

||||

}: endpoint{},

|

||||

|

|

@ -16005,6 +16050,18 @@ var awsPartition = partition{

|

|||

|

||||

Deprecated: boxedTrue,

|

||||

},

|

||||

endpointKey{

|

||||

Region: "fips-us-east-2",

|

||||

}: endpoint{

|

||||

|

||||

Deprecated: boxedTrue,

|

||||

},

|

||||

endpointKey{

|

||||

Region: "fips-us-west-1",

|

||||

}: endpoint{

|

||||

|

||||

Deprecated: boxedTrue,

|

||||

},

|

||||

endpointKey{

|

||||

Region: "fips-us-west-2",

|

||||

}: endpoint{

|

||||

|

|

@ -16021,6 +16078,20 @@ var awsPartition = partition{

|

|||

Region: "us-east-1",

|

||||

Variant: fipsVariant,

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "us-east-2",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "us-east-2",

|

||||

Variant: fipsVariant,

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "us-west-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "us-west-1",

|

||||

Variant: fipsVariant,

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "us-west-2",

|

||||

}: endpoint{},

|

||||

|

|

@ -23202,6 +23273,9 @@ var awsPartition = partition{

|

|||

},

|

||||

Deprecated: boxedTrue,

|

||||

},

|

||||

endpointKey{

|

||||

Region: "me-central-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "me-south-1",

|

||||

}: endpoint{},

|

||||

|

|

@ -24831,12 +24905,72 @@ var awsPartition = partition{

|

|||

},

|

||||

"ssm-sap": service{

|

||||

Endpoints: serviceEndpoints{

|

||||

endpointKey{

|

||||

Region: "af-south-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "ap-east-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "ap-northeast-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "ap-northeast-2",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "ap-northeast-3",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "ap-south-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "ap-southeast-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "ap-southeast-2",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "ap-southeast-3",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "ca-central-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "eu-central-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "eu-north-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "eu-south-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "eu-west-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "eu-west-2",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "eu-west-3",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "me-south-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "sa-east-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "us-east-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "us-east-2",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "us-west-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "us-west-2",

|

||||

}: endpoint{},

|

||||

},

|

||||

},

|

||||

"sso": service{

|

||||

|

|

@ -31472,9 +31606,24 @@ var awsusgovPartition = partition{

|

|||

},

|

||||

"databrew": service{

|

||||

Endpoints: serviceEndpoints{

|

||||

endpointKey{

|

||||

Region: "fips-us-gov-west-1",

|

||||

}: endpoint{

|

||||

Hostname: "databrew.us-gov-west-1.amazonaws.com",

|

||||

CredentialScope: credentialScope{

|

||||

Region: "us-gov-west-1",

|

||||

},

|

||||

Deprecated: boxedTrue,

|

||||

},

|

||||

endpointKey{

|

||||

Region: "us-gov-west-1",

|

||||

}: endpoint{},

|

||||

endpointKey{

|

||||

Region: "us-gov-west-1",

|

||||

Variant: fipsVariant,

|

||||

}: endpoint{

|

||||

Hostname: "databrew.us-gov-west-1.amazonaws.com",

|

||||

},

|

||||

},

|

||||

},

|

||||

"datasync": service{

|

||||

|

|

|

|||

2

vendor/github.com/aws/aws-sdk-go/aws/version.go

generated

vendored

2

vendor/github.com/aws/aws-sdk-go/aws/version.go

generated

vendored

|

|

@ -5,4 +5,4 @@ package aws

|

|||

const SDKName = "aws-sdk-go"

|

||||

|

||||

// SDKVersion is the version of this SDK

|

||||

const SDKVersion = "1.44.180"

|

||||

const SDKVersion = "1.44.184"

|

||||

|

|

|

|||

12

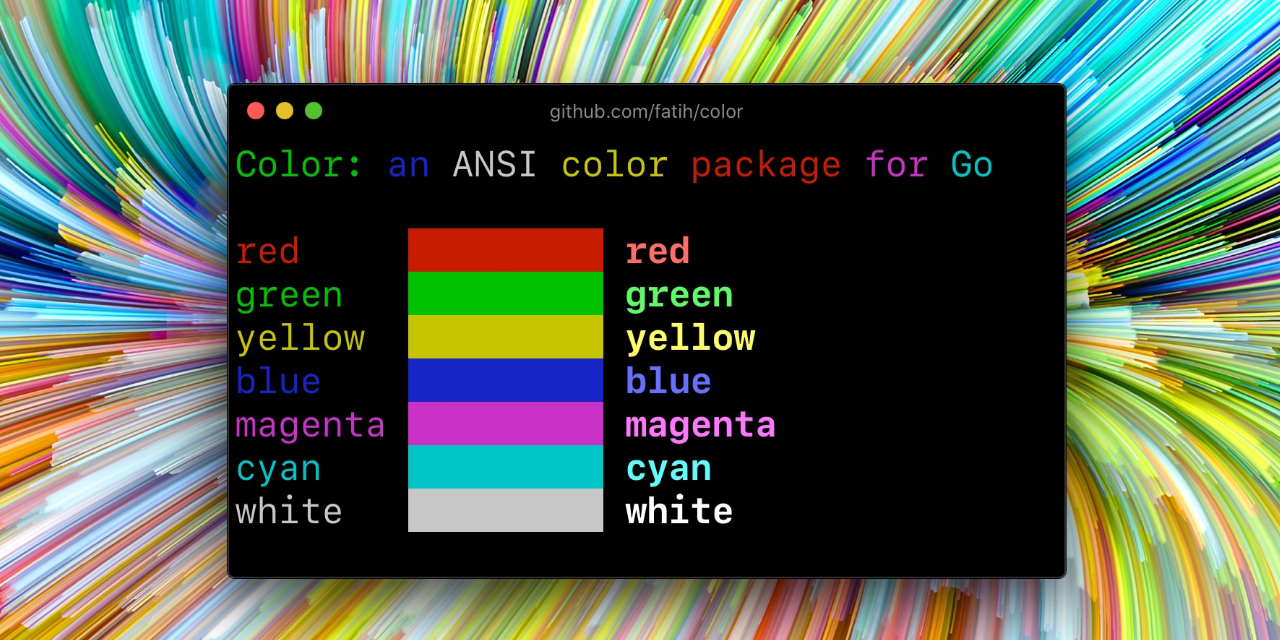

vendor/github.com/fatih/color/README.md

generated

vendored

12

vendor/github.com/fatih/color/README.md

generated

vendored

|

|

@ -7,7 +7,6 @@ suits you.

|

|||

|

||||

|

||||

|

||||

|

||||

## Install

|

||||

|

||||

```bash

|

||||

|

|

@ -130,11 +129,11 @@ There might be a case where you want to explicitly disable/enable color output.

|

|||

(for example if the output were piped directly to `less`).

|

||||

|

||||

The `color` package also disables color output if the [`NO_COLOR`](https://no-color.org) environment

|

||||

variable is set (regardless of its value).

|

||||

variable is set to a non-empty string.

|

||||

|

||||

`Color` has support to disable/enable colors programatically both globally and

|

||||

`Color` has support to disable/enable colors programmatically both globally and

|

||||

for single color definitions. For example suppose you have a CLI app and a

|

||||

`--no-color` bool flag. You can easily disable the color output with:

|

||||

`-no-color` bool flag. You can easily disable the color output with:

|

||||

|

||||

```go

|

||||

var flagNoColor = flag.Bool("no-color", false, "Disable color output")

|

||||

|

|

@ -167,11 +166,10 @@ To output color in GitHub Actions (or other CI systems that support ANSI colors)

|

|||

* Save/Return previous values

|

||||

* Evaluate fmt.Formatter interface

|

||||

|

||||

|

||||

## Credits

|

||||

|

||||

* [Fatih Arslan](https://github.com/fatih)

|

||||

* Windows support via @mattn: [colorable](https://github.com/mattn/go-colorable)

|

||||

* [Fatih Arslan](https://github.com/fatih)

|

||||

* Windows support via @mattn: [colorable](https://github.com/mattn/go-colorable)

|

||||

|

||||

## License

|

||||

|

||||

|

|

|

|||

46

vendor/github.com/fatih/color/color.go

generated

vendored

46

vendor/github.com/fatih/color/color.go

generated

vendored

|

|

@ -19,10 +19,10 @@ var (

|

|||

// set (regardless of its value). This is a global option and affects all

|

||||

// colors. For more control over each color block use the methods

|

||||

// DisableColor() individually.

|

||||

NoColor = noColorExists() || os.Getenv("TERM") == "dumb" ||

|

||||

NoColor = noColorIsSet() || os.Getenv("TERM") == "dumb" ||

|

||||

(!isatty.IsTerminal(os.Stdout.Fd()) && !isatty.IsCygwinTerminal(os.Stdout.Fd()))

|

||||

|

||||

// Output defines the standard output of the print functions. By default

|

||||

// Output defines the standard output of the print functions. By default,

|

||||

// os.Stdout is used.

|

||||

Output = colorable.NewColorableStdout()

|

||||

|

||||

|

|

@ -35,10 +35,9 @@ var (

|

|||

colorsCacheMu sync.Mutex // protects colorsCache

|

||||

)

|

||||

|

||||

// noColorExists returns true if the environment variable NO_COLOR exists.

|

||||

func noColorExists() bool {

|

||||

_, exists := os.LookupEnv("NO_COLOR")

|

||||

return exists

|

||||

// noColorIsSet returns true if the environment variable NO_COLOR is set to a non-empty string.

|

||||

func noColorIsSet() bool {

|

||||

return os.Getenv("NO_COLOR") != ""

|

||||

}

|

||||

|

||||

// Color defines a custom color object which is defined by SGR parameters.

|

||||

|

|

@ -120,7 +119,7 @@ func New(value ...Attribute) *Color {

|

|||

params: make([]Attribute, 0),

|

||||

}

|

||||

|

||||

if noColorExists() {

|

||||

if noColorIsSet() {

|

||||

c.noColor = boolPtr(true)

|

||||

}

|

||||

|

||||

|

|

@ -152,7 +151,7 @@ func (c *Color) Set() *Color {

|

|||

return c

|

||||

}

|

||||

|

||||

fmt.Fprintf(Output, c.format())

|

||||

fmt.Fprint(Output, c.format())

|

||||

return c

|

||||

}

|

||||

|

||||

|

|

@ -164,16 +163,21 @@ func (c *Color) unset() {

|

|||

Unset()

|

||||

}

|

||||

|

||||

func (c *Color) setWriter(w io.Writer) *Color {

|

||||

// SetWriter is used to set the SGR sequence with the given io.Writer. This is

|

||||

// a low-level function, and users should use the higher-level functions, such

|

||||

// as color.Fprint, color.Print, etc.

|

||||

func (c *Color) SetWriter(w io.Writer) *Color {

|

||||

if c.isNoColorSet() {

|

||||

return c

|

||||

}

|

||||

|

||||

fmt.Fprintf(w, c.format())

|

||||

fmt.Fprint(w, c.format())

|

||||

return c

|

||||

}

|

||||

|

||||

func (c *Color) unsetWriter(w io.Writer) {

|

||||

// UnsetWriter resets all escape attributes and clears the output with the give

|

||||

// io.Writer. Usually should be called after SetWriter().

|

||||

func (c *Color) UnsetWriter(w io.Writer) {

|

||||

if c.isNoColorSet() {

|

||||

return

|

||||

}

|

||||

|

|

@ -192,20 +196,14 @@ func (c *Color) Add(value ...Attribute) *Color {

|

|||

return c

|

||||

}

|

||||

|

||||

func (c *Color) prepend(value Attribute) {

|

||||

c.params = append(c.params, 0)

|

||||

copy(c.params[1:], c.params[0:])

|

||||

c.params[0] = value

|

||||

}

|

||||

|

||||

// Fprint formats using the default formats for its operands and writes to w.

|

||||

// Spaces are added between operands when neither is a string.

|

||||

// It returns the number of bytes written and any write error encountered.

|

||||

// On Windows, users should wrap w with colorable.NewColorable() if w is of

|

||||

// type *os.File.

|

||||

func (c *Color) Fprint(w io.Writer, a ...interface{}) (n int, err error) {

|

||||

c.setWriter(w)

|

||||

defer c.unsetWriter(w)

|

||||

c.SetWriter(w)

|

||||

defer c.UnsetWriter(w)

|

||||

|

||||

return fmt.Fprint(w, a...)

|

||||

}

|

||||

|

|

@ -227,8 +225,8 @@ func (c *Color) Print(a ...interface{}) (n int, err error) {

|

|||

// On Windows, users should wrap w with colorable.NewColorable() if w is of

|

||||

// type *os.File.

|

||||

func (c *Color) Fprintf(w io.Writer, format string, a ...interface{}) (n int, err error) {

|

||||

c.setWriter(w)

|

||||

defer c.unsetWriter(w)

|

||||

c.SetWriter(w)

|

||||

defer c.UnsetWriter(w)

|

||||

|

||||

return fmt.Fprintf(w, format, a...)

|

||||

}

|

||||

|

|

@ -248,8 +246,8 @@ func (c *Color) Printf(format string, a ...interface{}) (n int, err error) {

|

|||

// On Windows, users should wrap w with colorable.NewColorable() if w is of

|

||||

// type *os.File.

|

||||

func (c *Color) Fprintln(w io.Writer, a ...interface{}) (n int, err error) {

|

||||

c.setWriter(w)

|

||||

defer c.unsetWriter(w)

|

||||

c.SetWriter(w)

|

||||

defer c.UnsetWriter(w)

|

||||

|

||||

return fmt.Fprintln(w, a...)

|

||||

}

|

||||

|

|

@ -396,7 +394,7 @@ func (c *Color) DisableColor() {

|

|||

}

|

||||

|

||||

// EnableColor enables the color output. Use it in conjunction with

|

||||

// DisableColor(). Otherwise this method has no side effects.

|

||||

// DisableColor(). Otherwise, this method has no side effects.

|

||||

func (c *Color) EnableColor() {

|

||||

c.noColor = boolPtr(false)

|

||||

}

|

||||

|

|

|

|||

5

vendor/github.com/fatih/color/doc.go

generated

vendored

5

vendor/github.com/fatih/color/doc.go

generated

vendored

|

|

@ -20,7 +20,7 @@ Use simple and default helper functions with predefined foreground colors:

|

|||

color.HiBlack("Bright black means gray..")

|

||||

color.HiWhite("Shiny white color!")

|

||||

|

||||

However there are times where custom color mixes are required. Below are some

|

||||

However, there are times when custom color mixes are required. Below are some

|

||||

examples to create custom color objects and use the print functions of each

|

||||

separate color object.

|

||||

|

||||

|

|

@ -68,7 +68,6 @@ You can also FprintXxx functions to pass your own io.Writer:

|

|||

success := color.New(color.Bold, color.FgGreen).FprintlnFunc()

|

||||

success(myWriter, don't forget this...")

|

||||

|

||||

|

||||

Or create SprintXxx functions to mix strings with other non-colorized strings:

|

||||

|

||||

yellow := New(FgYellow).SprintFunc()

|

||||

|

|

@ -80,7 +79,7 @@ Or create SprintXxx functions to mix strings with other non-colorized strings:

|

|||

fmt.Printf("this %s rocks!\n", info("package"))

|

||||

|

||||

Windows support is enabled by default. All Print functions work as intended.

|

||||

However only for color.SprintXXX functions, user should use fmt.FprintXXX and

|

||||

However, only for color.SprintXXX functions, user should use fmt.FprintXXX and

|

||||

set the output to color.Output:

|

||||

|

||||

fmt.Fprintf(color.Output, "Windows support: %s", color.GreenString("PASS"))

|

||||

|

|

|

|||

7

vendor/github.com/klauspost/compress/README.md

generated

vendored

7

vendor/github.com/klauspost/compress/README.md

generated

vendored

|

|

@ -16,6 +16,13 @@ This package provides various compression algorithms.

|

|||

|

||||

# changelog

|

||||

|

||||

* Jan 3rd, 2023 (v1.15.14)

|

||||

|

||||

* flate: Improve speed in big stateless blocks https://github.com/klauspost/compress/pull/718

|

||||

* zstd: Minor speed tweaks by @greatroar in https://github.com/klauspost/compress/pull/716 https://github.com/klauspost/compress/pull/720

|

||||

* export NoGzipResponseWriter for custom ResponseWriter wrappers by @harshavardhana in https://github.com/klauspost/compress/pull/722

|

||||

* s2: Add example for indexing and existing stream https://github.com/klauspost/compress/pull/723

|

||||

|

||||

* Dec 11, 2022 (v1.15.13)

|

||||

* zstd: Add [MaxEncodedSize](https://pkg.go.dev/github.com/klauspost/compress@v1.15.13/zstd#Encoder.MaxEncodedSize) to encoder https://github.com/klauspost/compress/pull/691

|

||||

* zstd: Various tweaks and improvements https://github.com/klauspost/compress/pull/693 https://github.com/klauspost/compress/pull/695 https://github.com/klauspost/compress/pull/696 https://github.com/klauspost/compress/pull/701 https://github.com/klauspost/compress/pull/702 https://github.com/klauspost/compress/pull/703 https://github.com/klauspost/compress/pull/704 https://github.com/klauspost/compress/pull/705 https://github.com/klauspost/compress/pull/706 https://github.com/klauspost/compress/pull/707 https://github.com/klauspost/compress/pull/708

|

||||

|

|

|

|||

107

vendor/github.com/klauspost/compress/flate/deflate.go

generated

vendored

107

vendor/github.com/klauspost/compress/flate/deflate.go

generated

vendored

|

|

@ -294,7 +294,6 @@ func (d *compressor) findMatch(pos int, prevHead int, lookahead int) (length, of

|

|||

}

|

||||

offset = 0

|

||||

|

||||

cGain := 0

|

||||

if d.chain < 100 {

|

||||

for i := prevHead; tries > 0; tries-- {

|

||||

if wEnd == win[i+length] {

|

||||

|

|

@ -322,10 +321,14 @@ func (d *compressor) findMatch(pos int, prevHead int, lookahead int) (length, of

|

|||

return

|

||||

}

|

||||

|

||||

// Minimum gain to accept a match.

|

||||

cGain := 4

|

||||

|

||||

// Some like it higher (CSV), some like it lower (JSON)

|

||||

const baseCost = 6

|

||||

const baseCost = 3

|

||||

// Base is 4 bytes at with an additional cost.

|

||||

// Matches must be better than this.

|

||||

|

||||

for i := prevHead; tries > 0; tries-- {

|

||||

if wEnd == win[i+length] {

|

||||

n := matchLen(win[i:i+minMatchLook], wPos)

|

||||

|

|

@ -333,7 +336,7 @@ func (d *compressor) findMatch(pos int, prevHead int, lookahead int) (length, of

|

|||

// Calculate gain. Estimate

|

||||

newGain := d.h.bitLengthRaw(wPos[:n]) - int(offsetExtraBits[offsetCode(uint32(pos-i))]) - baseCost - int(lengthExtraBits[lengthCodes[(n-3)&255]])

|

||||

|

||||

//fmt.Println(n, "gain:", newGain, "prev:", cGain, "raw:", d.h.bitLengthRaw(wPos[:n]))

|

||||

//fmt.Println("gain:", newGain, "prev:", cGain, "raw:", d.h.bitLengthRaw(wPos[:n]), "this-len:", n, "prev-len:", length)

|

||||

if newGain > cGain {

|

||||

length = n

|

||||

offset = pos - i

|

||||

|

|

@ -490,27 +493,103 @@ func (d *compressor) deflateLazy() {

|

|||

}

|

||||

|

||||

if prevLength >= minMatchLength && s.length <= prevLength {

|

||||

// Check for better match at end...

|

||||

// No better match, but check for better match at end...

|

||||

//

|

||||

// checkOff must be >=2 since we otherwise risk checking s.index

|

||||

// Offset of 2 seems to yield best results.

|

||||

// Skip forward a number of bytes.

|

||||

// Offset of 2 seems to yield best results. 3 is sometimes better.

|

||||

const checkOff = 2

|

||||

|

||||

// Check all, except full length

|

||||

if prevLength < maxMatchLength-checkOff {

|

||||

prevIndex := s.index - 1

|

||||

if prevIndex+prevLength+checkOff < s.maxInsertIndex {

|

||||

if prevIndex+prevLength < s.maxInsertIndex {

|

||||

end := lookahead

|

||||

if lookahead > maxMatchLength {

|

||||

end = maxMatchLength

|

||||

if lookahead > maxMatchLength+checkOff {

|

||||

end = maxMatchLength + checkOff

|

||||

}

|

||||

end += prevIndex

|

||||

idx := prevIndex + prevLength - (4 - checkOff)

|

||||

h := hash4(d.window[idx:])

|

||||

ch2 := int(s.hashHead[h]) - s.hashOffset - prevLength + (4 - checkOff)

|

||||

if ch2 > minIndex {

|

||||

length := matchLen(d.window[prevIndex:end], d.window[ch2:])

|

||||

|

||||

// Hash at match end.

|

||||

h := hash4(d.window[prevIndex+prevLength:])

|

||||

ch2 := int(s.hashHead[h]) - s.hashOffset - prevLength

|

||||

if prevIndex-ch2 != prevOffset && ch2 > minIndex+checkOff {

|

||||

length := matchLen(d.window[prevIndex+checkOff:end], d.window[ch2+checkOff:])

|

||||

// It seems like a pure length metric is best.

|

||||

if length > prevLength {

|

||||

prevLength = length

|

||||

prevOffset = prevIndex - ch2

|

||||

|

||||

// Extend back...

|

||||

for i := checkOff - 1; i >= 0; i-- {

|

||||

if prevLength >= maxMatchLength || d.window[prevIndex+i] != d.window[ch2+i] {

|

||||

// Emit tokens we "owe"

|

||||

for j := 0; j <= i; j++ {

|

||||

d.tokens.AddLiteral(d.window[prevIndex+j])

|

||||

if d.tokens.n == maxFlateBlockTokens {

|

||||

// The block includes the current character

|

||||

if d.err = d.writeBlock(&d.tokens, s.index, false); d.err != nil {

|

||||

return

|

||||

}

|

||||

d.tokens.Reset()

|

||||

}

|

||||

s.index++

|

||||

if s.index < s.maxInsertIndex {

|

||||

h := hash4(d.window[s.index:])

|

||||

ch := s.hashHead[h]

|

||||

s.chainHead = int(ch)

|

||||

s.hashPrev[s.index&windowMask] = ch

|

||||

s.hashHead[h] = uint32(s.index + s.hashOffset)

|

||||

}

|

||||

}

|

||||

break

|

||||

} else {

|

||||

prevLength++

|

||||

}

|

||||

}

|

||||

} else if false {

|

||||

// Check one further ahead.

|

||||

// Only rarely better, disabled for now.

|

||||

prevIndex++

|

||||

h := hash4(d.window[prevIndex+prevLength:])

|

||||

ch2 := int(s.hashHead[h]) - s.hashOffset - prevLength

|

||||

if prevIndex-ch2 != prevOffset && ch2 > minIndex+checkOff {

|

||||

length := matchLen(d.window[prevIndex+checkOff:end], d.window[ch2+checkOff:])

|

||||

// It seems like a pure length metric is best.

|

||||

if length > prevLength+checkOff {

|

||||

prevLength = length

|

||||

prevOffset = prevIndex - ch2

|

||||

prevIndex--

|

||||

|

||||

// Extend back...

|

||||

for i := checkOff; i >= 0; i-- {

|

||||

if prevLength >= maxMatchLength || d.window[prevIndex+i] != d.window[ch2+i-1] {

|

||||

// Emit tokens we "owe"

|

||||

for j := 0; j <= i; j++ {

|

||||

d.tokens.AddLiteral(d.window[prevIndex+j])

|

||||

if d.tokens.n == maxFlateBlockTokens {

|

||||

// The block includes the current character

|

||||

if d.err = d.writeBlock(&d.tokens, s.index, false); d.err != nil {

|

||||

return

|

||||

}

|

||||

d.tokens.Reset()

|

||||

}

|

||||

s.index++

|

||||

if s.index < s.maxInsertIndex {

|

||||

h := hash4(d.window[s.index:])

|

||||

ch := s.hashHead[h]

|

||||

s.chainHead = int(ch)

|

||||

s.hashPrev[s.index&windowMask] = ch

|

||||

s.hashHead[h] = uint32(s.index + s.hashOffset)

|

||||

}

|

||||

}

|

||||

break

|

||||

} else {

|

||||

prevLength++

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

|

|

|||

31

vendor/github.com/klauspost/compress/fse/compress.go

generated

vendored

31

vendor/github.com/klauspost/compress/fse/compress.go

generated

vendored

|

|

@ -146,54 +146,51 @@ func (s *Scratch) compress(src []byte) error {

|

|||