mirror of

https://github.com/VictoriaMetrics/VictoriaMetrics.git

synced 2024-11-21 14:44:00 +00:00

vendor: make vendor-update

This commit is contained in:

parent

35cf2fe3a3

commit

f279ba3585

156 changed files with 5276 additions and 2968 deletions

38

go.mod

38

go.mod

|

|

@ -3,15 +3,15 @@ module github.com/VictoriaMetrics/VictoriaMetrics

|

||||||

go 1.19

|

go 1.19

|

||||||

|

|

||||||

require (

|

require (

|

||||||

cloud.google.com/go/storage v1.28.1

|

cloud.google.com/go/storage v1.29.0

|

||||||

github.com/VictoriaMetrics/fastcache v1.12.0

|

github.com/VictoriaMetrics/fastcache v1.12.0

|

||||||

|

|

||||||

// Do not use the original github.com/valyala/fasthttp because of issues

|

// Do not use the original github.com/valyala/fasthttp because of issues

|

||||||

// like https://github.com/valyala/fasthttp/commit/996610f021ff45fdc98c2ce7884d5fa4e7f9199b

|

// like https://github.com/valyala/fasthttp/commit/996610f021ff45fdc98c2ce7884d5fa4e7f9199b

|

||||||

github.com/VictoriaMetrics/fasthttp v1.1.0

|

github.com/VictoriaMetrics/fasthttp v1.1.0

|

||||||

github.com/VictoriaMetrics/metrics v1.23.1

|

github.com/VictoriaMetrics/metrics v1.23.1

|

||||||

github.com/VictoriaMetrics/metricsql v0.51.2

|

github.com/VictoriaMetrics/metricsql v0.56.1

|

||||||

github.com/aws/aws-sdk-go v1.44.177

|

github.com/aws/aws-sdk-go v1.44.204

|

||||||

github.com/cespare/xxhash/v2 v2.2.0

|

github.com/cespare/xxhash/v2 v2.2.0

|

||||||

|

|

||||||

// TODO: switch back to https://github.com/cheggaaa/pb/v3 when v3-pooling branch

|

// TODO: switch back to https://github.com/cheggaaa/pb/v3 when v3-pooling branch

|

||||||

|

|

@ -20,38 +20,38 @@ require (

|

||||||

github.com/dmitryk-dk/pb/v3 v3.0.9

|

github.com/dmitryk-dk/pb/v3 v3.0.9

|

||||||

github.com/golang/snappy v0.0.4

|

github.com/golang/snappy v0.0.4

|

||||||

github.com/influxdata/influxdb v1.11.0

|

github.com/influxdata/influxdb v1.11.0

|

||||||

github.com/klauspost/compress v1.15.14

|

github.com/klauspost/compress v1.15.15

|

||||||

github.com/prometheus/prometheus v1.8.2-0.20201119142752-3ad25a6dc3d9

|

github.com/prometheus/prometheus v1.8.2-0.20201119142752-3ad25a6dc3d9

|

||||||

github.com/urfave/cli/v2 v2.23.7

|

github.com/urfave/cli/v2 v2.24.4

|

||||||

github.com/valyala/fastjson v1.6.4

|

github.com/valyala/fastjson v1.6.4

|

||||||

github.com/valyala/fastrand v1.1.0

|

github.com/valyala/fastrand v1.1.0

|

||||||

github.com/valyala/fasttemplate v1.2.2

|

github.com/valyala/fasttemplate v1.2.2

|

||||||

github.com/valyala/gozstd v1.17.0

|

github.com/valyala/gozstd v1.18.0

|

||||||

github.com/valyala/quicktemplate v1.7.0

|

github.com/valyala/quicktemplate v1.7.0

|

||||||

golang.org/x/net v0.5.0

|

golang.org/x/net v0.7.0

|

||||||

golang.org/x/oauth2 v0.4.0

|

golang.org/x/oauth2 v0.5.0

|

||||||

golang.org/x/sys v0.4.0

|

golang.org/x/sys v0.5.0

|

||||||

google.golang.org/api v0.106.0

|

google.golang.org/api v0.110.0

|

||||||

gopkg.in/yaml.v2 v2.4.0

|

gopkg.in/yaml.v2 v2.4.0

|

||||||

)

|

)

|

||||||

|

|

||||||

require (

|

require (

|

||||||

cloud.google.com/go v0.108.0 // indirect

|

cloud.google.com/go v0.110.0 // indirect

|

||||||

cloud.google.com/go/compute v1.15.1 // indirect

|

cloud.google.com/go/compute v1.18.0 // indirect

|

||||||

cloud.google.com/go/compute/metadata v0.2.3 // indirect

|

cloud.google.com/go/compute/metadata v0.2.3 // indirect

|

||||||

cloud.google.com/go/iam v0.10.0 // indirect

|

cloud.google.com/go/iam v0.12.0 // indirect

|

||||||

github.com/VividCortex/ewma v1.2.0 // indirect

|

github.com/VividCortex/ewma v1.2.0 // indirect

|

||||||

github.com/beorn7/perks v1.0.1 // indirect

|

github.com/beorn7/perks v1.0.1 // indirect

|

||||||

github.com/cpuguy83/go-md2man/v2 v2.0.2 // indirect

|

github.com/cpuguy83/go-md2man/v2 v2.0.2 // indirect

|

||||||

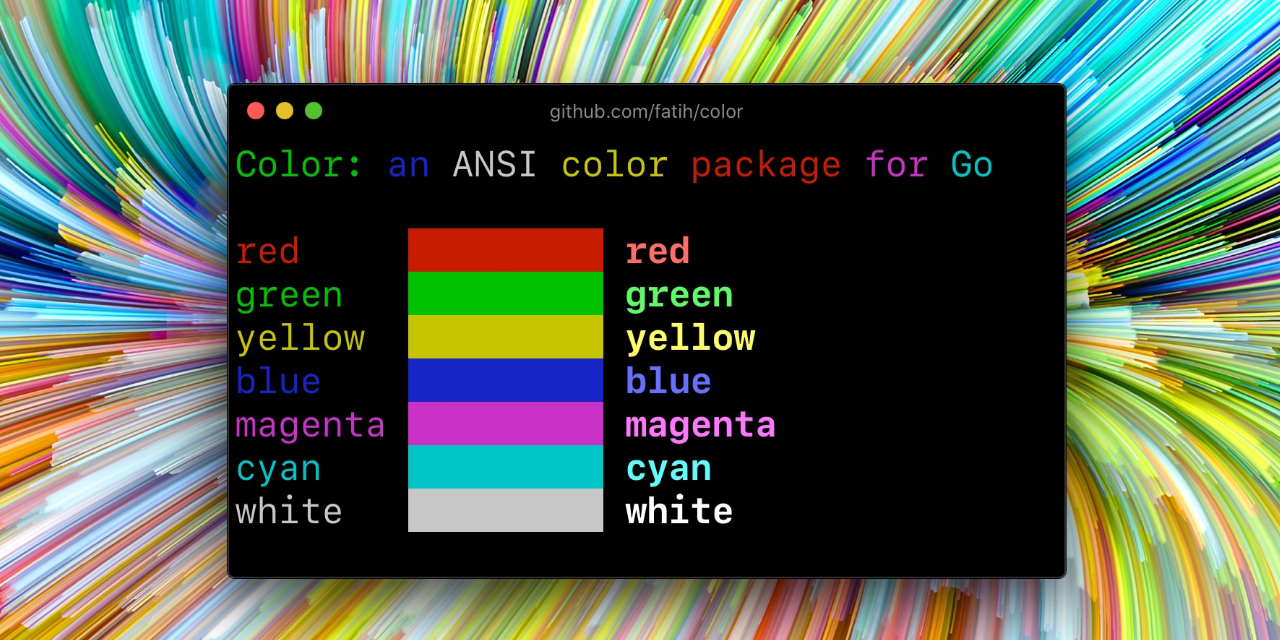

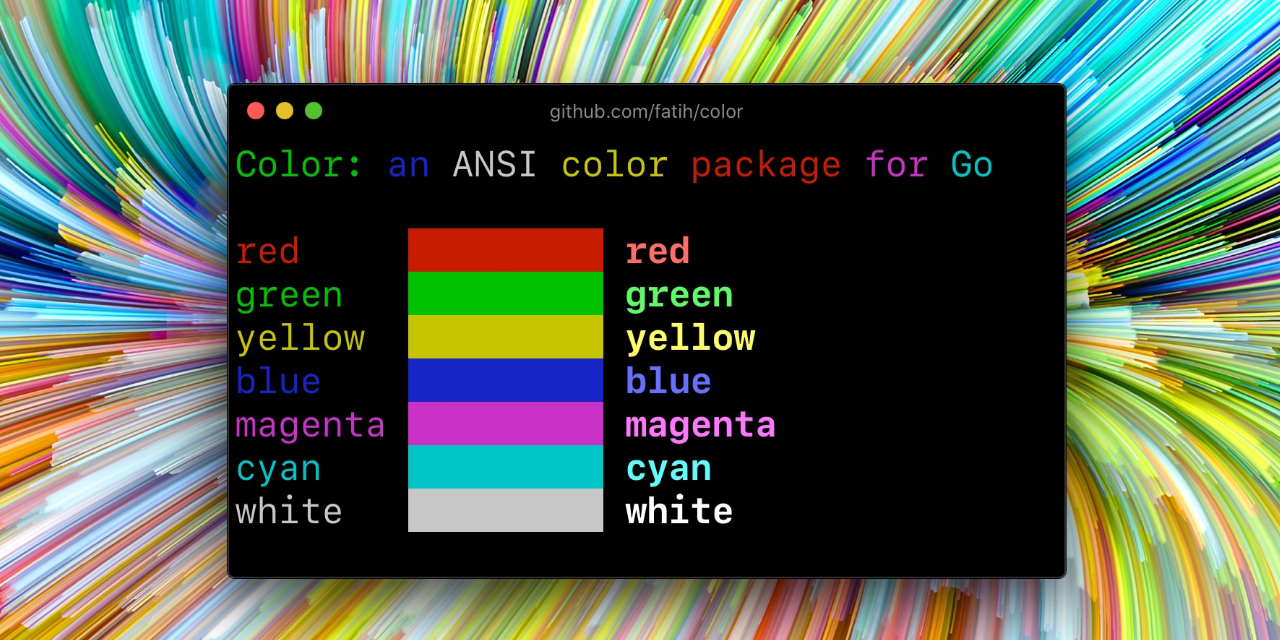

github.com/fatih/color v1.13.0 // indirect

|

github.com/fatih/color v1.14.1 // indirect

|

||||||

github.com/go-kit/kit v0.12.0 // indirect

|

github.com/go-kit/kit v0.12.0 // indirect

|

||||||

github.com/go-kit/log v0.2.1 // indirect

|

github.com/go-kit/log v0.2.1 // indirect

|

||||||

github.com/go-logfmt/logfmt v0.5.1 // indirect

|

github.com/go-logfmt/logfmt v0.6.0 // indirect

|

||||||

github.com/golang/groupcache v0.0.0-20210331224755-41bb18bfe9da // indirect

|

github.com/golang/groupcache v0.0.0-20210331224755-41bb18bfe9da // indirect

|

||||||

github.com/golang/protobuf v1.5.2 // indirect

|

github.com/golang/protobuf v1.5.2 // indirect

|

||||||

github.com/google/go-cmp v0.5.9 // indirect

|

github.com/google/go-cmp v0.5.9 // indirect

|

||||||

github.com/google/uuid v1.3.0 // indirect

|

github.com/google/uuid v1.3.0 // indirect

|

||||||

github.com/googleapis/enterprise-certificate-proxy v0.2.1 // indirect

|

github.com/googleapis/enterprise-certificate-proxy v0.2.3 // indirect

|

||||||

github.com/googleapis/gax-go/v2 v2.7.0 // indirect

|

github.com/googleapis/gax-go/v2 v2.7.0 // indirect

|

||||||

github.com/jmespath/go-jmespath v0.4.0 // indirect

|

github.com/jmespath/go-jmespath v0.4.0 // indirect

|

||||||

github.com/mattn/go-colorable v0.1.13 // indirect

|

github.com/mattn/go-colorable v0.1.13 // indirect

|

||||||

|

|

@ -73,10 +73,10 @@ require (

|

||||||

go.uber.org/atomic v1.10.0 // indirect

|

go.uber.org/atomic v1.10.0 // indirect

|

||||||

go.uber.org/goleak v1.1.11-0.20210813005559-691160354723 // indirect

|

go.uber.org/goleak v1.1.11-0.20210813005559-691160354723 // indirect

|

||||||

golang.org/x/sync v0.1.0 // indirect

|

golang.org/x/sync v0.1.0 // indirect

|

||||||

golang.org/x/text v0.6.0 // indirect

|

golang.org/x/text v0.7.0 // indirect

|

||||||

golang.org/x/xerrors v0.0.0-20220907171357-04be3eba64a2 // indirect

|

golang.org/x/xerrors v0.0.0-20220907171357-04be3eba64a2 // indirect

|

||||||

google.golang.org/appengine v1.6.7 // indirect

|

google.golang.org/appengine v1.6.7 // indirect

|

||||||

google.golang.org/genproto v0.0.0-20230110181048-76db0878b65f // indirect

|

google.golang.org/genproto v0.0.0-20230216225411-c8e22ba71e44 // indirect

|

||||||

google.golang.org/grpc v1.52.0 // indirect

|

google.golang.org/grpc v1.53.0 // indirect

|

||||||

google.golang.org/protobuf v1.28.1 // indirect

|

google.golang.org/protobuf v1.28.1 // indirect

|

||||||

)

|

)

|

||||||

|

|

|

||||||

81

go.sum

81

go.sum

|

|

@ -15,8 +15,8 @@ cloud.google.com/go v0.56.0/go.mod h1:jr7tqZxxKOVYizybht9+26Z/gUq7tiRzu+ACVAMbKV

|

||||||

cloud.google.com/go v0.57.0/go.mod h1:oXiQ6Rzq3RAkkY7N6t3TcE6jE+CIBBbA36lwQ1JyzZs=

|

cloud.google.com/go v0.57.0/go.mod h1:oXiQ6Rzq3RAkkY7N6t3TcE6jE+CIBBbA36lwQ1JyzZs=

|

||||||

cloud.google.com/go v0.62.0/go.mod h1:jmCYTdRCQuc1PHIIJ/maLInMho30T/Y0M4hTdTShOYc=

|

cloud.google.com/go v0.62.0/go.mod h1:jmCYTdRCQuc1PHIIJ/maLInMho30T/Y0M4hTdTShOYc=

|

||||||

cloud.google.com/go v0.65.0/go.mod h1:O5N8zS7uWy9vkA9vayVHs65eM1ubvY4h553ofrNHObY=

|

cloud.google.com/go v0.65.0/go.mod h1:O5N8zS7uWy9vkA9vayVHs65eM1ubvY4h553ofrNHObY=

|

||||||

cloud.google.com/go v0.108.0 h1:xntQwnfn8oHGX0crLVinvHM+AhXvi3QHQIEcX/2hiWk=

|

cloud.google.com/go v0.110.0 h1:Zc8gqp3+a9/Eyph2KDmcGaPtbKRIoqq4YTlL4NMD0Ys=

|

||||||

cloud.google.com/go v0.108.0/go.mod h1:lNUfQqusBJp0bgAg6qrHgYFYbTB+dOiob1itwnlD33Q=

|

cloud.google.com/go v0.110.0/go.mod h1:SJnCLqQ0FCFGSZMUNUf84MV3Aia54kn7pi8st7tMzaY=

|

||||||

cloud.google.com/go/bigquery v1.0.1/go.mod h1:i/xbL2UlR5RvWAURpBYZTtm/cXjCha9lbfbpx4poX+o=

|

cloud.google.com/go/bigquery v1.0.1/go.mod h1:i/xbL2UlR5RvWAURpBYZTtm/cXjCha9lbfbpx4poX+o=

|

||||||

cloud.google.com/go/bigquery v1.3.0/go.mod h1:PjpwJnslEMmckchkHFfq+HTD2DmtT67aNFKH1/VBDHE=

|

cloud.google.com/go/bigquery v1.3.0/go.mod h1:PjpwJnslEMmckchkHFfq+HTD2DmtT67aNFKH1/VBDHE=

|

||||||

cloud.google.com/go/bigquery v1.4.0/go.mod h1:S8dzgnTigyfTmLBfrtrhyYhwRxG72rYxvftPBK2Dvzc=

|

cloud.google.com/go/bigquery v1.4.0/go.mod h1:S8dzgnTigyfTmLBfrtrhyYhwRxG72rYxvftPBK2Dvzc=

|

||||||

|

|

@ -24,14 +24,14 @@ cloud.google.com/go/bigquery v1.5.0/go.mod h1:snEHRnqQbz117VIFhE8bmtwIDY80NLUZUM

|

||||||

cloud.google.com/go/bigquery v1.7.0/go.mod h1://okPTzCYNXSlb24MZs83e2Do+h+VXtc4gLoIoXIAPc=

|

cloud.google.com/go/bigquery v1.7.0/go.mod h1://okPTzCYNXSlb24MZs83e2Do+h+VXtc4gLoIoXIAPc=

|

||||||

cloud.google.com/go/bigquery v1.8.0/go.mod h1:J5hqkt3O0uAFnINi6JXValWIb1v0goeZM77hZzJN/fQ=

|

cloud.google.com/go/bigquery v1.8.0/go.mod h1:J5hqkt3O0uAFnINi6JXValWIb1v0goeZM77hZzJN/fQ=

|

||||||

cloud.google.com/go/bigtable v1.2.0/go.mod h1:JcVAOl45lrTmQfLj7T6TxyMzIN/3FGGcFm+2xVAli2o=

|

cloud.google.com/go/bigtable v1.2.0/go.mod h1:JcVAOl45lrTmQfLj7T6TxyMzIN/3FGGcFm+2xVAli2o=

|

||||||

cloud.google.com/go/compute v1.15.1 h1:7UGq3QknM33pw5xATlpzeoomNxsacIVvTqTTvbfajmE=

|

cloud.google.com/go/compute v1.18.0 h1:FEigFqoDbys2cvFkZ9Fjq4gnHBP55anJ0yQyau2f9oY=

|

||||||

cloud.google.com/go/compute v1.15.1/go.mod h1:bjjoF/NtFUrkD/urWfdHaKuOPDR5nWIs63rR+SXhcpA=

|

cloud.google.com/go/compute v1.18.0/go.mod h1:1X7yHxec2Ga+Ss6jPyjxRxpu2uu7PLgsOVXvgU0yacs=

|

||||||

cloud.google.com/go/compute/metadata v0.2.3 h1:mg4jlk7mCAj6xXp9UJ4fjI9VUI5rubuGBW5aJ7UnBMY=

|

cloud.google.com/go/compute/metadata v0.2.3 h1:mg4jlk7mCAj6xXp9UJ4fjI9VUI5rubuGBW5aJ7UnBMY=

|

||||||

cloud.google.com/go/compute/metadata v0.2.3/go.mod h1:VAV5nSsACxMJvgaAuX6Pk2AawlZn8kiOGuCv6gTkwuA=

|

cloud.google.com/go/compute/metadata v0.2.3/go.mod h1:VAV5nSsACxMJvgaAuX6Pk2AawlZn8kiOGuCv6gTkwuA=

|

||||||

cloud.google.com/go/datastore v1.0.0/go.mod h1:LXYbyblFSglQ5pkeyhO+Qmw7ukd3C+pD7TKLgZqpHYE=

|

cloud.google.com/go/datastore v1.0.0/go.mod h1:LXYbyblFSglQ5pkeyhO+Qmw7ukd3C+pD7TKLgZqpHYE=

|

||||||

cloud.google.com/go/datastore v1.1.0/go.mod h1:umbIZjpQpHh4hmRpGhH4tLFup+FVzqBi1b3c64qFpCk=

|

cloud.google.com/go/datastore v1.1.0/go.mod h1:umbIZjpQpHh4hmRpGhH4tLFup+FVzqBi1b3c64qFpCk=

|

||||||

cloud.google.com/go/iam v0.10.0 h1:fpP/gByFs6US1ma53v7VxhvbJpO2Aapng6wabJ99MuI=

|

cloud.google.com/go/iam v0.12.0 h1:DRtTY29b75ciH6Ov1PHb4/iat2CLCvrOm40Q0a6DFpE=

|

||||||

cloud.google.com/go/iam v0.10.0/go.mod h1:nXAECrMt2qHpF6RZUZseteD6QyanL68reN4OXPw0UWM=

|

cloud.google.com/go/iam v0.12.0/go.mod h1:knyHGviacl11zrtZUoDuYpDgLjvr28sLQaG0YB2GYAY=

|

||||||

cloud.google.com/go/longrunning v0.3.0 h1:NjljC+FYPV3uh5/OwWT6pVU+doBqMg2x/rZlE+CamDs=

|

cloud.google.com/go/longrunning v0.3.0 h1:NjljC+FYPV3uh5/OwWT6pVU+doBqMg2x/rZlE+CamDs=

|

||||||

cloud.google.com/go/pubsub v1.0.1/go.mod h1:R0Gpsv3s54REJCy4fxDixWD93lHJMoZTyQ2kNxGRt3I=

|

cloud.google.com/go/pubsub v1.0.1/go.mod h1:R0Gpsv3s54REJCy4fxDixWD93lHJMoZTyQ2kNxGRt3I=

|

||||||

cloud.google.com/go/pubsub v1.1.0/go.mod h1:EwwdRX2sKPjnvnqCa270oGRyludottCI76h+R3AArQw=

|

cloud.google.com/go/pubsub v1.1.0/go.mod h1:EwwdRX2sKPjnvnqCa270oGRyludottCI76h+R3AArQw=

|

||||||

|

|

@ -42,8 +42,8 @@ cloud.google.com/go/storage v1.5.0/go.mod h1:tpKbwo567HUNpVclU5sGELwQWBDZ8gh0Zeo

|

||||||

cloud.google.com/go/storage v1.6.0/go.mod h1:N7U0C8pVQ/+NIKOBQyamJIeKQKkZ+mxpohlUTyfDhBk=

|

cloud.google.com/go/storage v1.6.0/go.mod h1:N7U0C8pVQ/+NIKOBQyamJIeKQKkZ+mxpohlUTyfDhBk=

|

||||||

cloud.google.com/go/storage v1.8.0/go.mod h1:Wv1Oy7z6Yz3DshWRJFhqM/UCfaWIRTdp0RXyy7KQOVs=

|

cloud.google.com/go/storage v1.8.0/go.mod h1:Wv1Oy7z6Yz3DshWRJFhqM/UCfaWIRTdp0RXyy7KQOVs=

|

||||||

cloud.google.com/go/storage v1.10.0/go.mod h1:FLPqc6j+Ki4BU591ie1oL6qBQGu2Bl/tZ9ullr3+Kg0=

|

cloud.google.com/go/storage v1.10.0/go.mod h1:FLPqc6j+Ki4BU591ie1oL6qBQGu2Bl/tZ9ullr3+Kg0=

|

||||||

cloud.google.com/go/storage v1.28.1 h1:F5QDG5ChchaAVQhINh24U99OWHURqrW8OmQcGKXcbgI=

|

cloud.google.com/go/storage v1.29.0 h1:6weCgzRvMg7lzuUurI4697AqIRPU1SvzHhynwpW31jI=

|

||||||

cloud.google.com/go/storage v1.28.1/go.mod h1:Qnisd4CqDdo6BGs2AD5LLnEsmSQ80wQ5ogcBBKhU86Y=

|

cloud.google.com/go/storage v1.29.0/go.mod h1:4puEjyTKnku6gfKoTfNOU/W+a9JyuVNxjpS5GBrB8h4=

|

||||||

collectd.org v0.3.0/go.mod h1:A/8DzQBkF6abtvrT2j/AU/4tiBgJWYyh0y/oB/4MlWE=

|

collectd.org v0.3.0/go.mod h1:A/8DzQBkF6abtvrT2j/AU/4tiBgJWYyh0y/oB/4MlWE=

|

||||||

dmitri.shuralyov.com/gpu/mtl v0.0.0-20190408044501-666a987793e9/go.mod h1:H6x//7gZCb22OMCxBHrMx7a5I7Hp++hsVxbQ4BYO7hU=

|

dmitri.shuralyov.com/gpu/mtl v0.0.0-20190408044501-666a987793e9/go.mod h1:H6x//7gZCb22OMCxBHrMx7a5I7Hp++hsVxbQ4BYO7hU=

|

||||||

github.com/Azure/azure-sdk-for-go v48.2.0+incompatible/go.mod h1:9XXNKU+eRnpl9moKnB4QOLf1HestfXbmab5FXxiDBjc=

|

github.com/Azure/azure-sdk-for-go v48.2.0+incompatible/go.mod h1:9XXNKU+eRnpl9moKnB4QOLf1HestfXbmab5FXxiDBjc=

|

||||||

|

|

@ -91,8 +91,8 @@ github.com/VictoriaMetrics/fasthttp v1.1.0/go.mod h1:/7DMcogqd+aaD3G3Hg5kFgoFwlR

|

||||||

github.com/VictoriaMetrics/metrics v1.18.1/go.mod h1:ArjwVz7WpgpegX/JpB0zpNF2h2232kErkEnzH1sxMmA=

|

github.com/VictoriaMetrics/metrics v1.18.1/go.mod h1:ArjwVz7WpgpegX/JpB0zpNF2h2232kErkEnzH1sxMmA=

|

||||||

github.com/VictoriaMetrics/metrics v1.23.1 h1:/j8DzeJBxSpL2qSIdqnRFLvQQhbJyJbbEi22yMm7oL0=

|

github.com/VictoriaMetrics/metrics v1.23.1 h1:/j8DzeJBxSpL2qSIdqnRFLvQQhbJyJbbEi22yMm7oL0=

|

||||||

github.com/VictoriaMetrics/metrics v1.23.1/go.mod h1:rAr/llLpEnAdTehiNlUxKgnjcOuROSzpw0GvjpEbvFc=

|

github.com/VictoriaMetrics/metrics v1.23.1/go.mod h1:rAr/llLpEnAdTehiNlUxKgnjcOuROSzpw0GvjpEbvFc=

|

||||||

github.com/VictoriaMetrics/metricsql v0.51.2 h1:GCbxti0I46x3Ld/WhcUyawvLr6J0x90IaMftkjosHJI=

|

github.com/VictoriaMetrics/metricsql v0.56.1 h1:j+W4fA/gtozsZb4PlKDU0Ma2VOgl88xla4FEf29w94g=

|

||||||

github.com/VictoriaMetrics/metricsql v0.51.2/go.mod h1:6pP1ZeLVJHqJrHlF6Ij3gmpQIznSsgktEcZgsAWYel0=

|

github.com/VictoriaMetrics/metricsql v0.56.1/go.mod h1:6pP1ZeLVJHqJrHlF6Ij3gmpQIznSsgktEcZgsAWYel0=

|

||||||

github.com/VividCortex/ewma v1.2.0 h1:f58SaIzcDXrSy3kWaHNvuJgJ3Nmz59Zji6XoJR/q1ow=

|

github.com/VividCortex/ewma v1.2.0 h1:f58SaIzcDXrSy3kWaHNvuJgJ3Nmz59Zji6XoJR/q1ow=

|

||||||

github.com/VividCortex/ewma v1.2.0/go.mod h1:nz4BbCtbLyFDeC9SUHbtcT5644juEuWfUAUnGx7j5l4=

|

github.com/VividCortex/ewma v1.2.0/go.mod h1:nz4BbCtbLyFDeC9SUHbtcT5644juEuWfUAUnGx7j5l4=

|

||||||

github.com/VividCortex/gohistogram v1.0.0/go.mod h1:Pf5mBqqDxYaXu3hDrrU+w6nw50o/4+TcAqDqk/vUH7g=

|

github.com/VividCortex/gohistogram v1.0.0/go.mod h1:Pf5mBqqDxYaXu3hDrrU+w6nw50o/4+TcAqDqk/vUH7g=

|

||||||

|

|

@ -128,8 +128,8 @@ github.com/aws/aws-lambda-go v1.13.3/go.mod h1:4UKl9IzQMoD+QF79YdCuzCwp8VbmG4VAQ

|

||||||

github.com/aws/aws-sdk-go v1.27.0/go.mod h1:KmX6BPdI08NWTb3/sm4ZGu5ShLoqVDhKgpiN924inxo=

|

github.com/aws/aws-sdk-go v1.27.0/go.mod h1:KmX6BPdI08NWTb3/sm4ZGu5ShLoqVDhKgpiN924inxo=

|

||||||

github.com/aws/aws-sdk-go v1.34.28/go.mod h1:H7NKnBqNVzoTJpGfLrQkkD+ytBA93eiDYi/+8rV9s48=

|

github.com/aws/aws-sdk-go v1.34.28/go.mod h1:H7NKnBqNVzoTJpGfLrQkkD+ytBA93eiDYi/+8rV9s48=

|

||||||

github.com/aws/aws-sdk-go v1.35.31/go.mod h1:hcU610XS61/+aQV88ixoOzUoG7v3b31pl2zKMmprdro=

|

github.com/aws/aws-sdk-go v1.35.31/go.mod h1:hcU610XS61/+aQV88ixoOzUoG7v3b31pl2zKMmprdro=

|

||||||

github.com/aws/aws-sdk-go v1.44.177 h1:ckMJhU5Gj+4Rta+bJIUiUd7jvHom84aim3zkGPblq0s=

|

github.com/aws/aws-sdk-go v1.44.204 h1:7/tPUXfNOHB390A63t6fJIwmlwVQAkAwcbzKsU2/6OQ=

|

||||||

github.com/aws/aws-sdk-go v1.44.177/go.mod h1:aVsgQcEevwlmQ7qHE9I3h+dtQgpqhFB+i8Phjh7fkwI=

|

github.com/aws/aws-sdk-go v1.44.204/go.mod h1:aVsgQcEevwlmQ7qHE9I3h+dtQgpqhFB+i8Phjh7fkwI=

|

||||||

github.com/aws/aws-sdk-go-v2 v0.18.0/go.mod h1:JWVYvqSMppoMJC0x5wdwiImzgXTI9FuZwxzkQq9wy+g=

|

github.com/aws/aws-sdk-go-v2 v0.18.0/go.mod h1:JWVYvqSMppoMJC0x5wdwiImzgXTI9FuZwxzkQq9wy+g=

|

||||||

github.com/beorn7/perks v0.0.0-20180321164747-3a771d992973/go.mod h1:Dwedo/Wpr24TaqPxmxbtue+5NUziq4I4S80YR8gNf3Q=

|

github.com/beorn7/perks v0.0.0-20180321164747-3a771d992973/go.mod h1:Dwedo/Wpr24TaqPxmxbtue+5NUziq4I4S80YR8gNf3Q=

|

||||||

github.com/beorn7/perks v1.0.0/go.mod h1:KWe93zE9D1o94FZ5RNwFwVgaQK1VOXiVxmqh+CedLV8=

|

github.com/beorn7/perks v1.0.0/go.mod h1:KWe93zE9D1o94FZ5RNwFwVgaQK1VOXiVxmqh+CedLV8=

|

||||||

|

|

@ -200,8 +200,8 @@ github.com/envoyproxy/protoc-gen-validate v0.1.0/go.mod h1:iSmxcyjqTsJpI2R4NaDN7

|

||||||

github.com/evanphx/json-patch v4.9.0+incompatible/go.mod h1:50XU6AFN0ol/bzJsmQLiYLvXMP4fmwYFNcr97nuDLSk=

|

github.com/evanphx/json-patch v4.9.0+incompatible/go.mod h1:50XU6AFN0ol/bzJsmQLiYLvXMP4fmwYFNcr97nuDLSk=

|

||||||

github.com/fatih/color v1.7.0/go.mod h1:Zm6kSWBoL9eyXnKyktHP6abPY2pDugNf5KwzbycvMj4=

|

github.com/fatih/color v1.7.0/go.mod h1:Zm6kSWBoL9eyXnKyktHP6abPY2pDugNf5KwzbycvMj4=

|

||||||

github.com/fatih/color v1.9.0/go.mod h1:eQcE1qtQxscV5RaZvpXrrb8Drkc3/DdQ+uUYCNjL+zU=

|

github.com/fatih/color v1.9.0/go.mod h1:eQcE1qtQxscV5RaZvpXrrb8Drkc3/DdQ+uUYCNjL+zU=

|

||||||

github.com/fatih/color v1.13.0 h1:8LOYc1KYPPmyKMuN8QV2DNRWNbLo6LZ0iLs8+mlH53w=

|

github.com/fatih/color v1.14.1 h1:qfhVLaG5s+nCROl1zJsZRxFeYrHLqWroPOQ8BWiNb4w=

|

||||||

github.com/fatih/color v1.13.0/go.mod h1:kLAiJbzzSOZDVNGyDpeOxJ47H46qBXwg5ILebYFFOfk=

|

github.com/fatih/color v1.14.1/go.mod h1:2oHN61fhTpgcxD3TSWCgKDiH1+x4OiDVVGH8WlgGZGg=

|

||||||

github.com/fogleman/gg v1.2.1-0.20190220221249-0403632d5b90/go.mod h1:R/bRT+9gY/C5z7JzPU0zXsXHKM4/ayA+zqcVNZzPa1k=

|

github.com/fogleman/gg v1.2.1-0.20190220221249-0403632d5b90/go.mod h1:R/bRT+9gY/C5z7JzPU0zXsXHKM4/ayA+zqcVNZzPa1k=

|

||||||

github.com/form3tech-oss/jwt-go v3.2.2+incompatible/go.mod h1:pbq4aXjuKjdthFRnoDwaVPLA+WlJuPGy+QneDUgJi2k=

|

github.com/form3tech-oss/jwt-go v3.2.2+incompatible/go.mod h1:pbq4aXjuKjdthFRnoDwaVPLA+WlJuPGy+QneDUgJi2k=

|

||||||

github.com/franela/goblin v0.0.0-20200105215937-c9ffbefa60db/go.mod h1:7dvUGVsVBjqR7JHJk0brhHOZYGmfBYOrK0ZhYMEtBr4=

|

github.com/franela/goblin v0.0.0-20200105215937-c9ffbefa60db/go.mod h1:7dvUGVsVBjqR7JHJk0brhHOZYGmfBYOrK0ZhYMEtBr4=

|

||||||

|

|

@ -227,8 +227,8 @@ github.com/go-kit/log v0.2.1/go.mod h1:NwTd00d/i8cPZ3xOwwiv2PO5MOcx78fFErGNcVmBj

|

||||||

github.com/go-logfmt/logfmt v0.3.0/go.mod h1:Qt1PoO58o5twSAckw1HlFXLmHsOX5/0LbT9GBnD5lWE=

|

github.com/go-logfmt/logfmt v0.3.0/go.mod h1:Qt1PoO58o5twSAckw1HlFXLmHsOX5/0LbT9GBnD5lWE=

|

||||||

github.com/go-logfmt/logfmt v0.4.0/go.mod h1:3RMwSq7FuexP4Kalkev3ejPJsZTpXXBr9+V4qmtdjCk=

|

github.com/go-logfmt/logfmt v0.4.0/go.mod h1:3RMwSq7FuexP4Kalkev3ejPJsZTpXXBr9+V4qmtdjCk=

|

||||||

github.com/go-logfmt/logfmt v0.5.0/go.mod h1:wCYkCAKZfumFQihp8CzCvQ3paCTfi41vtzG1KdI/P7A=

|

github.com/go-logfmt/logfmt v0.5.0/go.mod h1:wCYkCAKZfumFQihp8CzCvQ3paCTfi41vtzG1KdI/P7A=

|

||||||

github.com/go-logfmt/logfmt v0.5.1 h1:otpy5pqBCBZ1ng9RQ0dPu4PN7ba75Y/aA+UpowDyNVA=

|

github.com/go-logfmt/logfmt v0.6.0 h1:wGYYu3uicYdqXVgoYbvnkrPVXkuLM1p1ifugDMEdRi4=

|

||||||

github.com/go-logfmt/logfmt v0.5.1/go.mod h1:WYhtIu8zTZfxdn5+rREduYbwxfcBr/Vr6KEVveWlfTs=

|

github.com/go-logfmt/logfmt v0.6.0/go.mod h1:WYhtIu8zTZfxdn5+rREduYbwxfcBr/Vr6KEVveWlfTs=

|

||||||

github.com/go-logr/logr v0.1.0/go.mod h1:ixOQHD9gLJUVQQ2ZOR7zLEifBX6tGkNJF4QyIY7sIas=

|

github.com/go-logr/logr v0.1.0/go.mod h1:ixOQHD9gLJUVQQ2ZOR7zLEifBX6tGkNJF4QyIY7sIas=

|

||||||

github.com/go-logr/logr v0.2.0/go.mod h1:z6/tIYblkpsD+a4lm/fGIIU9mZ+XfAiaFtq7xTgseGU=

|

github.com/go-logr/logr v0.2.0/go.mod h1:z6/tIYblkpsD+a4lm/fGIIU9mZ+XfAiaFtq7xTgseGU=

|

||||||

github.com/go-openapi/analysis v0.0.0-20180825180245-b006789cd277/go.mod h1:k70tL6pCuVxPJOHXQ+wIac1FUrvNkHolPie/cLEU6hI=

|

github.com/go-openapi/analysis v0.0.0-20180825180245-b006789cd277/go.mod h1:k70tL6pCuVxPJOHXQ+wIac1FUrvNkHolPie/cLEU6hI=

|

||||||

|

|

@ -399,7 +399,7 @@ github.com/google/gofuzz v1.1.0/go.mod h1:dBl0BpW6vV/+mYPU4Po3pmUjxk6FQPldtuIdl/

|

||||||

github.com/google/martian v2.1.0+incompatible h1:/CP5g8u/VJHijgedC/Legn3BAbAaWPgecwXBIDzw5no=

|

github.com/google/martian v2.1.0+incompatible h1:/CP5g8u/VJHijgedC/Legn3BAbAaWPgecwXBIDzw5no=

|

||||||

github.com/google/martian v2.1.0+incompatible/go.mod h1:9I4somxYTbIHy5NJKHRl3wXiIaQGbYVAs8BPL6v8lEs=

|

github.com/google/martian v2.1.0+incompatible/go.mod h1:9I4somxYTbIHy5NJKHRl3wXiIaQGbYVAs8BPL6v8lEs=

|

||||||

github.com/google/martian/v3 v3.0.0/go.mod h1:y5Zk1BBys9G+gd6Jrk0W3cC1+ELVxBWuIGO+w/tUAp0=

|

github.com/google/martian/v3 v3.0.0/go.mod h1:y5Zk1BBys9G+gd6Jrk0W3cC1+ELVxBWuIGO+w/tUAp0=

|

||||||

github.com/google/martian/v3 v3.2.1 h1:d8MncMlErDFTwQGBK1xhv026j9kqhvw1Qv9IbWT1VLQ=

|

github.com/google/martian/v3 v3.3.2 h1:IqNFLAmvJOgVlpdEBiQbDc2EwKW77amAycfTuWKdfvw=

|

||||||

github.com/google/pprof v0.0.0-20181206194817-3ea8567a2e57/go.mod h1:zfwlbNMJ+OItoe0UupaVj+oy1omPYYDuagoSzA8v9mc=

|

github.com/google/pprof v0.0.0-20181206194817-3ea8567a2e57/go.mod h1:zfwlbNMJ+OItoe0UupaVj+oy1omPYYDuagoSzA8v9mc=

|

||||||

github.com/google/pprof v0.0.0-20190515194954-54271f7e092f/go.mod h1:zfwlbNMJ+OItoe0UupaVj+oy1omPYYDuagoSzA8v9mc=

|

github.com/google/pprof v0.0.0-20190515194954-54271f7e092f/go.mod h1:zfwlbNMJ+OItoe0UupaVj+oy1omPYYDuagoSzA8v9mc=

|

||||||

github.com/google/pprof v0.0.0-20191218002539-d4f498aebedc/go.mod h1:ZgVRPoUq/hfqzAqh7sHMqb3I9Rq5C59dIz2SbBwJ4eM=

|

github.com/google/pprof v0.0.0-20191218002539-d4f498aebedc/go.mod h1:ZgVRPoUq/hfqzAqh7sHMqb3I9Rq5C59dIz2SbBwJ4eM=

|

||||||

|

|

@ -414,8 +414,8 @@ github.com/google/uuid v1.1.1/go.mod h1:TIyPZe4MgqvfeYDBFedMoGGpEw/LqOeaOT+nhxU+

|

||||||

github.com/google/uuid v1.1.2/go.mod h1:TIyPZe4MgqvfeYDBFedMoGGpEw/LqOeaOT+nhxU+yHo=

|

github.com/google/uuid v1.1.2/go.mod h1:TIyPZe4MgqvfeYDBFedMoGGpEw/LqOeaOT+nhxU+yHo=

|

||||||

github.com/google/uuid v1.3.0 h1:t6JiXgmwXMjEs8VusXIJk2BXHsn+wx8BZdTaoZ5fu7I=

|

github.com/google/uuid v1.3.0 h1:t6JiXgmwXMjEs8VusXIJk2BXHsn+wx8BZdTaoZ5fu7I=

|

||||||

github.com/google/uuid v1.3.0/go.mod h1:TIyPZe4MgqvfeYDBFedMoGGpEw/LqOeaOT+nhxU+yHo=

|

github.com/google/uuid v1.3.0/go.mod h1:TIyPZe4MgqvfeYDBFedMoGGpEw/LqOeaOT+nhxU+yHo=

|

||||||

github.com/googleapis/enterprise-certificate-proxy v0.2.1 h1:RY7tHKZcRlk788d5WSo/e83gOyyy742E8GSs771ySpg=

|

github.com/googleapis/enterprise-certificate-proxy v0.2.3 h1:yk9/cqRKtT9wXZSsRH9aurXEpJX+U6FLtpYTdC3R06k=

|

||||||

github.com/googleapis/enterprise-certificate-proxy v0.2.1/go.mod h1:AwSRAtLfXpU5Nm3pW+v7rGDHp09LsPtGY9MduiEsR9k=

|

github.com/googleapis/enterprise-certificate-proxy v0.2.3/go.mod h1:AwSRAtLfXpU5Nm3pW+v7rGDHp09LsPtGY9MduiEsR9k=

|

||||||

github.com/googleapis/gax-go/v2 v2.0.4/go.mod h1:0Wqv26UfaUD9n4G6kQubkQ+KchISgw+vpHVxEJEs9eg=

|

github.com/googleapis/gax-go/v2 v2.0.4/go.mod h1:0Wqv26UfaUD9n4G6kQubkQ+KchISgw+vpHVxEJEs9eg=

|

||||||

github.com/googleapis/gax-go/v2 v2.0.5/go.mod h1:DWXyrwAJ9X0FpwwEdw+IPEYBICEFu5mhpdKc/us6bOk=

|

github.com/googleapis/gax-go/v2 v2.0.5/go.mod h1:DWXyrwAJ9X0FpwwEdw+IPEYBICEFu5mhpdKc/us6bOk=

|

||||||

github.com/googleapis/gax-go/v2 v2.7.0 h1:IcsPKeInNvYi7eqSaDjiZqDDKu5rsmunY0Y1YupQSSQ=

|

github.com/googleapis/gax-go/v2 v2.7.0 h1:IcsPKeInNvYi7eqSaDjiZqDDKu5rsmunY0Y1YupQSSQ=

|

||||||

|

|

@ -516,8 +516,8 @@ github.com/klauspost/compress v1.4.0/go.mod h1:RyIbtBH6LamlWaDj8nUwkbUhJ87Yi3uG0

|

||||||

github.com/klauspost/compress v1.9.5/go.mod h1:RyIbtBH6LamlWaDj8nUwkbUhJ87Yi3uG0guNDohfE1A=

|

github.com/klauspost/compress v1.9.5/go.mod h1:RyIbtBH6LamlWaDj8nUwkbUhJ87Yi3uG0guNDohfE1A=

|

||||||

github.com/klauspost/compress v1.13.4/go.mod h1:8dP1Hq4DHOhN9w426knH3Rhby4rFm6D8eO+e+Dq5Gzg=

|

github.com/klauspost/compress v1.13.4/go.mod h1:8dP1Hq4DHOhN9w426knH3Rhby4rFm6D8eO+e+Dq5Gzg=

|

||||||

github.com/klauspost/compress v1.13.5/go.mod h1:/3/Vjq9QcHkK5uEr5lBEmyoZ1iFhe47etQ6QUkpK6sk=

|

github.com/klauspost/compress v1.13.5/go.mod h1:/3/Vjq9QcHkK5uEr5lBEmyoZ1iFhe47etQ6QUkpK6sk=

|

||||||

github.com/klauspost/compress v1.15.14 h1:i7WCKDToww0wA+9qrUZ1xOjp218vfFo3nTU6UHp+gOc=

|

github.com/klauspost/compress v1.15.15 h1:EF27CXIuDsYJ6mmvtBRlEuB2UVOqHG1tAXgZ7yIO+lw=

|

||||||

github.com/klauspost/compress v1.15.14/go.mod h1:QPwzmACJjUTFsnSHH934V6woptycfrDDJnH7hvFVbGM=

|

github.com/klauspost/compress v1.15.15/go.mod h1:ZcK2JAFqKOpnBlxcLsJzYfrS9X1akm9fHZNnD9+Vo/4=

|

||||||

github.com/klauspost/cpuid v0.0.0-20170728055534-ae7887de9fa5/go.mod h1:Pj4uuM528wm8OyEC2QMXAi2YiTZ96dNQPGgoMS4s3ek=

|

github.com/klauspost/cpuid v0.0.0-20170728055534-ae7887de9fa5/go.mod h1:Pj4uuM528wm8OyEC2QMXAi2YiTZ96dNQPGgoMS4s3ek=

|

||||||

github.com/klauspost/crc32 v0.0.0-20161016154125-cb6bfca970f6/go.mod h1:+ZoRqAPRLkC4NPOvfYeR5KNOrY6TD+/sAC3HXPZgDYg=

|

github.com/klauspost/crc32 v0.0.0-20161016154125-cb6bfca970f6/go.mod h1:+ZoRqAPRLkC4NPOvfYeR5KNOrY6TD+/sAC3HXPZgDYg=

|

||||||

github.com/klauspost/pgzip v1.0.2-0.20170402124221-0bf5dcad4ada/go.mod h1:Ch1tH69qFZu15pkjo5kYi6mth2Zzwzt50oCQKQE9RUs=

|

github.com/klauspost/pgzip v1.0.2-0.20170402124221-0bf5dcad4ada/go.mod h1:Ch1tH69qFZu15pkjo5kYi6mth2Zzwzt50oCQKQE9RUs=

|

||||||

|

|

@ -552,7 +552,6 @@ github.com/markbates/safe v1.0.1/go.mod h1:nAqgmRi7cY2nqMc92/bSEeQA+R4OheNU2T1kN

|

||||||

github.com/mattn/go-colorable v0.0.9/go.mod h1:9vuHe8Xs5qXnSaW/c/ABM9alt+Vo+STaOChaDxuIBZU=

|

github.com/mattn/go-colorable v0.0.9/go.mod h1:9vuHe8Xs5qXnSaW/c/ABM9alt+Vo+STaOChaDxuIBZU=

|

||||||

github.com/mattn/go-colorable v0.1.4/go.mod h1:U0ppj6V5qS13XJ6of8GYAs25YV2eR4EVcfRqFIhoBtE=

|

github.com/mattn/go-colorable v0.1.4/go.mod h1:U0ppj6V5qS13XJ6of8GYAs25YV2eR4EVcfRqFIhoBtE=

|

||||||

github.com/mattn/go-colorable v0.1.6/go.mod h1:u6P/XSegPjTcexA+o6vUJrdnUu04hMope9wVRipJSqc=

|

github.com/mattn/go-colorable v0.1.6/go.mod h1:u6P/XSegPjTcexA+o6vUJrdnUu04hMope9wVRipJSqc=

|

||||||

github.com/mattn/go-colorable v0.1.9/go.mod h1:u6P/XSegPjTcexA+o6vUJrdnUu04hMope9wVRipJSqc=

|

|

||||||

github.com/mattn/go-colorable v0.1.13 h1:fFA4WZxdEF4tXPZVKMLwD8oUnCTTo08duU7wxecdEvA=

|

github.com/mattn/go-colorable v0.1.13 h1:fFA4WZxdEF4tXPZVKMLwD8oUnCTTo08duU7wxecdEvA=

|

||||||

github.com/mattn/go-colorable v0.1.13/go.mod h1:7S9/ev0klgBDR4GtXTXX8a3vIGJpMovkB8vQcUbaXHg=

|

github.com/mattn/go-colorable v0.1.13/go.mod h1:7S9/ev0klgBDR4GtXTXX8a3vIGJpMovkB8vQcUbaXHg=

|

||||||

github.com/mattn/go-isatty v0.0.3/go.mod h1:M+lRXTBqGeGNdLjl/ufCoiOlB5xdOkqRJdNxMWT7Zi4=

|

github.com/mattn/go-isatty v0.0.3/go.mod h1:M+lRXTBqGeGNdLjl/ufCoiOlB5xdOkqRJdNxMWT7Zi4=

|

||||||

|

|

@ -561,7 +560,6 @@ github.com/mattn/go-isatty v0.0.8/go.mod h1:Iq45c/XA43vh69/j3iqttzPXn0bhXyGjM0Hd

|

||||||

github.com/mattn/go-isatty v0.0.10/go.mod h1:qgIWMr58cqv1PHHyhnkY9lrL7etaEgOFcMEpPG5Rm84=

|

github.com/mattn/go-isatty v0.0.10/go.mod h1:qgIWMr58cqv1PHHyhnkY9lrL7etaEgOFcMEpPG5Rm84=

|

||||||

github.com/mattn/go-isatty v0.0.11/go.mod h1:PhnuNfih5lzO57/f3n+odYbM4JtupLOxQOAqxQCu2WE=

|

github.com/mattn/go-isatty v0.0.11/go.mod h1:PhnuNfih5lzO57/f3n+odYbM4JtupLOxQOAqxQCu2WE=

|

||||||

github.com/mattn/go-isatty v0.0.12/go.mod h1:cbi8OIDigv2wuxKPP5vlRcQ1OAZbq2CE4Kysco4FUpU=

|

github.com/mattn/go-isatty v0.0.12/go.mod h1:cbi8OIDigv2wuxKPP5vlRcQ1OAZbq2CE4Kysco4FUpU=

|

||||||

github.com/mattn/go-isatty v0.0.14/go.mod h1:7GGIvUiUoEMVVmxf/4nioHXj79iQHKdU27kJ6hsGG94=

|

|

||||||

github.com/mattn/go-isatty v0.0.16/go.mod h1:kYGgaQfpe5nmfYZH+SKPsOc2e4SrIfOl2e/yFXSvRLM=

|

github.com/mattn/go-isatty v0.0.16/go.mod h1:kYGgaQfpe5nmfYZH+SKPsOc2e4SrIfOl2e/yFXSvRLM=

|

||||||

github.com/mattn/go-isatty v0.0.17 h1:BTarxUcIeDqL27Mc+vyvdWYSL28zpIhv3RoTdsLMPng=

|

github.com/mattn/go-isatty v0.0.17 h1:BTarxUcIeDqL27Mc+vyvdWYSL28zpIhv3RoTdsLMPng=

|

||||||

github.com/mattn/go-isatty v0.0.17/go.mod h1:kYGgaQfpe5nmfYZH+SKPsOc2e4SrIfOl2e/yFXSvRLM=

|

github.com/mattn/go-isatty v0.0.17/go.mod h1:kYGgaQfpe5nmfYZH+SKPsOc2e4SrIfOl2e/yFXSvRLM=

|

||||||

|

|

@ -769,8 +767,8 @@ github.com/uber/jaeger-client-go v2.25.0+incompatible/go.mod h1:WVhlPFC8FDjOFMMW

|

||||||

github.com/uber/jaeger-lib v2.4.0+incompatible/go.mod h1:ComeNDZlWwrWnDv8aPp0Ba6+uUTzImX/AauajbLI56U=

|

github.com/uber/jaeger-lib v2.4.0+incompatible/go.mod h1:ComeNDZlWwrWnDv8aPp0Ba6+uUTzImX/AauajbLI56U=

|

||||||

github.com/urfave/cli v1.20.0/go.mod h1:70zkFmudgCuE/ngEzBv17Jvp/497gISqfk5gWijbERA=

|

github.com/urfave/cli v1.20.0/go.mod h1:70zkFmudgCuE/ngEzBv17Jvp/497gISqfk5gWijbERA=

|

||||||

github.com/urfave/cli v1.22.1/go.mod h1:Gos4lmkARVdJ6EkW0WaNv/tZAAMe9V7XWyB60NtXRu0=

|

github.com/urfave/cli v1.22.1/go.mod h1:Gos4lmkARVdJ6EkW0WaNv/tZAAMe9V7XWyB60NtXRu0=

|

||||||

github.com/urfave/cli/v2 v2.23.7 h1:YHDQ46s3VghFHFf1DdF+Sh7H4RqhcM+t0TmZRJx4oJY=

|

github.com/urfave/cli/v2 v2.24.4 h1:0gyJJEBYtCV87zI/x2nZCPyDxD51K6xM8SkwjHFCNEU=

|

||||||

github.com/urfave/cli/v2 v2.23.7/go.mod h1:GHupkWPMM0M/sj1a2b4wUrWBPzazNrIjouW6fmdJLxc=

|

github.com/urfave/cli/v2 v2.24.4/go.mod h1:GHupkWPMM0M/sj1a2b4wUrWBPzazNrIjouW6fmdJLxc=

|

||||||

github.com/valyala/bytebufferpool v1.0.0 h1:GqA5TC/0021Y/b9FG4Oi9Mr3q7XYx6KllzawFIhcdPw=

|

github.com/valyala/bytebufferpool v1.0.0 h1:GqA5TC/0021Y/b9FG4Oi9Mr3q7XYx6KllzawFIhcdPw=

|

||||||

github.com/valyala/bytebufferpool v1.0.0/go.mod h1:6bBcMArwyJ5K/AmCkWv1jt77kVWyCJ6HpOuEn7z0Csc=

|

github.com/valyala/bytebufferpool v1.0.0/go.mod h1:6bBcMArwyJ5K/AmCkWv1jt77kVWyCJ6HpOuEn7z0Csc=

|

||||||

github.com/valyala/fasthttp v1.30.0/go.mod h1:2rsYD01CKFrjjsvFxx75KlEUNpWNBY9JWD3K/7o2Cus=

|

github.com/valyala/fasthttp v1.30.0/go.mod h1:2rsYD01CKFrjjsvFxx75KlEUNpWNBY9JWD3K/7o2Cus=

|

||||||

|

|

@ -780,8 +778,8 @@ github.com/valyala/fastrand v1.1.0 h1:f+5HkLW4rsgzdNoleUOB69hyT9IlD2ZQh9GyDMfb5G

|

||||||

github.com/valyala/fastrand v1.1.0/go.mod h1:HWqCzkrkg6QXT8V2EXWvXCoow7vLwOFN002oeRzjapQ=

|

github.com/valyala/fastrand v1.1.0/go.mod h1:HWqCzkrkg6QXT8V2EXWvXCoow7vLwOFN002oeRzjapQ=

|

||||||

github.com/valyala/fasttemplate v1.2.2 h1:lxLXG0uE3Qnshl9QyaK6XJxMXlQZELvChBOCmQD0Loo=

|

github.com/valyala/fasttemplate v1.2.2 h1:lxLXG0uE3Qnshl9QyaK6XJxMXlQZELvChBOCmQD0Loo=

|

||||||

github.com/valyala/fasttemplate v1.2.2/go.mod h1:KHLXt3tVN2HBp8eijSv/kGJopbvo7S+qRAEEKiv+SiQ=

|

github.com/valyala/fasttemplate v1.2.2/go.mod h1:KHLXt3tVN2HBp8eijSv/kGJopbvo7S+qRAEEKiv+SiQ=

|

||||||

github.com/valyala/gozstd v1.17.0 h1:M4Ds4MIrw+pD+s6vYtuFZ8D3iEw9htzfdytOV3C3iQU=

|

github.com/valyala/gozstd v1.18.0 h1:f4BskcUZBnDrEJ2F+lVbNCMGOFBoGHEw71RBkCNR4IM=

|

||||||

github.com/valyala/gozstd v1.17.0/go.mod h1:y5Ew47GLlP37EkTB+B4s7r6A5rdaeB7ftbl9zoYiIPQ=

|

github.com/valyala/gozstd v1.18.0/go.mod h1:y5Ew47GLlP37EkTB+B4s7r6A5rdaeB7ftbl9zoYiIPQ=

|

||||||

github.com/valyala/histogram v1.2.0 h1:wyYGAZZt3CpwUiIb9AU/Zbllg1llXyrtApRS815OLoQ=

|

github.com/valyala/histogram v1.2.0 h1:wyYGAZZt3CpwUiIb9AU/Zbllg1llXyrtApRS815OLoQ=

|

||||||

github.com/valyala/histogram v1.2.0/go.mod h1:Hb4kBwb4UxsaNbbbh+RRz8ZR6pdodR57tzWUS3BUzXY=

|

github.com/valyala/histogram v1.2.0/go.mod h1:Hb4kBwb4UxsaNbbbh+RRz8ZR6pdodR57tzWUS3BUzXY=

|

||||||

github.com/valyala/quicktemplate v1.7.0 h1:LUPTJmlVcb46OOUY3IeD9DojFpAVbsG+5WFTcjMJzCM=

|

github.com/valyala/quicktemplate v1.7.0 h1:LUPTJmlVcb46OOUY3IeD9DojFpAVbsG+5WFTcjMJzCM=

|

||||||

|

|

@ -934,8 +932,8 @@ golang.org/x/net v0.0.0-20210405180319-a5a99cb37ef4/go.mod h1:p54w0d4576C0XHj96b

|

||||||

golang.org/x/net v0.0.0-20210510120150-4163338589ed/go.mod h1:9nx3DQGgdP8bBQD5qxJ1jj9UTztislL4KSBs9R2vV5Y=

|

golang.org/x/net v0.0.0-20210510120150-4163338589ed/go.mod h1:9nx3DQGgdP8bBQD5qxJ1jj9UTztislL4KSBs9R2vV5Y=

|

||||||

golang.org/x/net v0.0.0-20220722155237-a158d28d115b/go.mod h1:XRhObCWvk6IyKnWLug+ECip1KBveYUHfp+8e9klMJ9c=

|

golang.org/x/net v0.0.0-20220722155237-a158d28d115b/go.mod h1:XRhObCWvk6IyKnWLug+ECip1KBveYUHfp+8e9klMJ9c=

|

||||||

golang.org/x/net v0.1.0/go.mod h1:Cx3nUiGt4eDBEyega/BKRp+/AlGL8hYe7U9odMt2Cco=

|

golang.org/x/net v0.1.0/go.mod h1:Cx3nUiGt4eDBEyega/BKRp+/AlGL8hYe7U9odMt2Cco=

|

||||||

golang.org/x/net v0.5.0 h1:GyT4nK/YDHSqa1c4753ouYCDajOYKTja9Xb/OHtgvSw=

|

golang.org/x/net v0.7.0 h1:rJrUqqhjsgNp7KqAIc25s9pZnjU7TUcSY7HcVZjdn1g=

|

||||||

golang.org/x/net v0.5.0/go.mod h1:DivGGAXEgPSlEBzxGzZI+ZLohi+xUj054jfeKui00ws=

|

golang.org/x/net v0.7.0/go.mod h1:2Tu9+aMcznHK/AK1HMvgo6xiTLG5rD5rZLDS+rp2Bjs=

|

||||||

golang.org/x/oauth2 v0.0.0-20180821212333-d2e6202438be/go.mod h1:N/0e6XlmueqKjAGxoOufVs8QHGRruUQn6yWY3a++T0U=

|

golang.org/x/oauth2 v0.0.0-20180821212333-d2e6202438be/go.mod h1:N/0e6XlmueqKjAGxoOufVs8QHGRruUQn6yWY3a++T0U=

|

||||||

golang.org/x/oauth2 v0.0.0-20190226205417-e64efc72b421/go.mod h1:gOpvHmFTYa4IltrdGE7lF6nIHvwfUNPOp7c8zoXwtLw=

|

golang.org/x/oauth2 v0.0.0-20190226205417-e64efc72b421/go.mod h1:gOpvHmFTYa4IltrdGE7lF6nIHvwfUNPOp7c8zoXwtLw=

|

||||||

golang.org/x/oauth2 v0.0.0-20190604053449-0f29369cfe45/go.mod h1:gOpvHmFTYa4IltrdGE7lF6nIHvwfUNPOp7c8zoXwtLw=

|

golang.org/x/oauth2 v0.0.0-20190604053449-0f29369cfe45/go.mod h1:gOpvHmFTYa4IltrdGE7lF6nIHvwfUNPOp7c8zoXwtLw=

|

||||||

|

|

@ -943,8 +941,8 @@ golang.org/x/oauth2 v0.0.0-20191202225959-858c2ad4c8b6/go.mod h1:gOpvHmFTYa4Iltr

|

||||||

golang.org/x/oauth2 v0.0.0-20200107190931-bf48bf16ab8d/go.mod h1:gOpvHmFTYa4IltrdGE7lF6nIHvwfUNPOp7c8zoXwtLw=

|

golang.org/x/oauth2 v0.0.0-20200107190931-bf48bf16ab8d/go.mod h1:gOpvHmFTYa4IltrdGE7lF6nIHvwfUNPOp7c8zoXwtLw=

|

||||||

golang.org/x/oauth2 v0.0.0-20200902213428-5d25da1a8d43/go.mod h1:KelEdhl1UZF7XfJ4dDtk6s++YSgaE7mD/BuKKDLBl4A=

|

golang.org/x/oauth2 v0.0.0-20200902213428-5d25da1a8d43/go.mod h1:KelEdhl1UZF7XfJ4dDtk6s++YSgaE7mD/BuKKDLBl4A=

|

||||||

golang.org/x/oauth2 v0.0.0-20201109201403-9fd604954f58/go.mod h1:KelEdhl1UZF7XfJ4dDtk6s++YSgaE7mD/BuKKDLBl4A=

|

golang.org/x/oauth2 v0.0.0-20201109201403-9fd604954f58/go.mod h1:KelEdhl1UZF7XfJ4dDtk6s++YSgaE7mD/BuKKDLBl4A=

|

||||||

golang.org/x/oauth2 v0.4.0 h1:NF0gk8LVPg1Ml7SSbGyySuoxdsXitj7TvgvuRxIMc/M=

|

golang.org/x/oauth2 v0.5.0 h1:HuArIo48skDwlrvM3sEdHXElYslAMsf3KwRkkW4MC4s=

|

||||||

golang.org/x/oauth2 v0.4.0/go.mod h1:RznEsdpjGAINPTOF0UH/t+xJ75L18YO3Ho6Pyn+uRec=

|

golang.org/x/oauth2 v0.5.0/go.mod h1:9/XBHVqLaWO3/BRHs5jbpYCnOZVjj5V0ndyaAM7KB4I=

|

||||||

golang.org/x/sync v0.0.0-20180314180146-1d60e4601c6f/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

golang.org/x/sync v0.0.0-20180314180146-1d60e4601c6f/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

||||||

golang.org/x/sync v0.0.0-20181108010431-42b317875d0f/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

golang.org/x/sync v0.0.0-20181108010431-42b317875d0f/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

||||||

golang.org/x/sync v0.0.0-20181221193216-37e7f081c4d4/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

golang.org/x/sync v0.0.0-20181221193216-37e7f081c4d4/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

||||||

|

|

@ -1023,14 +1021,13 @@ golang.org/x/sys v0.0.0-20210423082822-04245dca01da/go.mod h1:h1NjWce9XRLGQEsW7w

|

||||||

golang.org/x/sys v0.0.0-20210510120138-977fb7262007/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

golang.org/x/sys v0.0.0-20210510120138-977fb7262007/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||||

golang.org/x/sys v0.0.0-20210514084401-e8d321eab015/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

golang.org/x/sys v0.0.0-20210514084401-e8d321eab015/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||||

golang.org/x/sys v0.0.0-20210615035016-665e8c7367d1/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

golang.org/x/sys v0.0.0-20210615035016-665e8c7367d1/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||||

golang.org/x/sys v0.0.0-20210630005230-0f9fa26af87c/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

|

||||||

golang.org/x/sys v0.0.0-20220405052023-b1e9470b6e64/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

golang.org/x/sys v0.0.0-20220405052023-b1e9470b6e64/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||||

golang.org/x/sys v0.0.0-20220520151302-bc2c85ada10a/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

golang.org/x/sys v0.0.0-20220520151302-bc2c85ada10a/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||||

golang.org/x/sys v0.0.0-20220722155257-8c9f86f7a55f/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

golang.org/x/sys v0.0.0-20220722155257-8c9f86f7a55f/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||||

golang.org/x/sys v0.0.0-20220811171246-fbc7d0a398ab/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

golang.org/x/sys v0.0.0-20220811171246-fbc7d0a398ab/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||||

golang.org/x/sys v0.1.0/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

golang.org/x/sys v0.1.0/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||||

golang.org/x/sys v0.4.0 h1:Zr2JFtRQNX3BCZ8YtxRE9hNJYC8J6I1MVbMg6owUp18=

|

golang.org/x/sys v0.5.0 h1:MUK/U/4lj1t1oPg0HfuXDN/Z1wv31ZJ/YcPiGccS4DU=

|

||||||

golang.org/x/sys v0.4.0/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

golang.org/x/sys v0.5.0/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||||

golang.org/x/term v0.0.0-20201126162022-7de9c90e9dd1/go.mod h1:bj7SfCRtBDWHUb9snDiAeCFNEtKQo2Wmx5Cou7ajbmo=

|

golang.org/x/term v0.0.0-20201126162022-7de9c90e9dd1/go.mod h1:bj7SfCRtBDWHUb9snDiAeCFNEtKQo2Wmx5Cou7ajbmo=

|

||||||

golang.org/x/term v0.0.0-20210927222741-03fcf44c2211/go.mod h1:jbD1KX2456YbFQfuXm/mYQcufACuNUgVhRMnK/tPxf8=

|

golang.org/x/term v0.0.0-20210927222741-03fcf44c2211/go.mod h1:jbD1KX2456YbFQfuXm/mYQcufACuNUgVhRMnK/tPxf8=

|

||||||

golang.org/x/term v0.1.0/go.mod h1:jbD1KX2456YbFQfuXm/mYQcufACuNUgVhRMnK/tPxf8=

|

golang.org/x/term v0.1.0/go.mod h1:jbD1KX2456YbFQfuXm/mYQcufACuNUgVhRMnK/tPxf8=

|

||||||

|

|

@ -1043,8 +1040,8 @@ golang.org/x/text v0.3.4/go.mod h1:5Zoc/QRtKVWzQhOtBMvqHzDpF6irO9z98xDceosuGiQ=

|

||||||

golang.org/x/text v0.3.6/go.mod h1:5Zoc/QRtKVWzQhOtBMvqHzDpF6irO9z98xDceosuGiQ=

|

golang.org/x/text v0.3.6/go.mod h1:5Zoc/QRtKVWzQhOtBMvqHzDpF6irO9z98xDceosuGiQ=

|

||||||

golang.org/x/text v0.3.7/go.mod h1:u+2+/6zg+i71rQMx5EYifcz6MCKuco9NR6JIITiCfzQ=

|

golang.org/x/text v0.3.7/go.mod h1:u+2+/6zg+i71rQMx5EYifcz6MCKuco9NR6JIITiCfzQ=

|

||||||

golang.org/x/text v0.4.0/go.mod h1:mrYo+phRRbMaCq/xk9113O4dZlRixOauAjOtrjsXDZ8=

|

golang.org/x/text v0.4.0/go.mod h1:mrYo+phRRbMaCq/xk9113O4dZlRixOauAjOtrjsXDZ8=

|

||||||

golang.org/x/text v0.6.0 h1:3XmdazWV+ubf7QgHSTWeykHOci5oeekaGJBLkrkaw4k=

|

golang.org/x/text v0.7.0 h1:4BRB4x83lYWy72KwLD/qYDuTu7q9PjSagHvijDw7cLo=

|

||||||

golang.org/x/text v0.6.0/go.mod h1:mrYo+phRRbMaCq/xk9113O4dZlRixOauAjOtrjsXDZ8=

|

golang.org/x/text v0.7.0/go.mod h1:mrYo+phRRbMaCq/xk9113O4dZlRixOauAjOtrjsXDZ8=

|

||||||

golang.org/x/time v0.0.0-20180412165947-fbb02b2291d2/go.mod h1:tRJNPiyCQ0inRvYxbN9jk5I+vvW/OXSQhTDSoE431IQ=

|

golang.org/x/time v0.0.0-20180412165947-fbb02b2291d2/go.mod h1:tRJNPiyCQ0inRvYxbN9jk5I+vvW/OXSQhTDSoE431IQ=

|

||||||

golang.org/x/time v0.0.0-20181108054448-85acf8d2951c/go.mod h1:tRJNPiyCQ0inRvYxbN9jk5I+vvW/OXSQhTDSoE431IQ=

|

golang.org/x/time v0.0.0-20181108054448-85acf8d2951c/go.mod h1:tRJNPiyCQ0inRvYxbN9jk5I+vvW/OXSQhTDSoE431IQ=

|

||||||

golang.org/x/time v0.0.0-20190308202827-9d24e82272b4/go.mod h1:tRJNPiyCQ0inRvYxbN9jk5I+vvW/OXSQhTDSoE431IQ=

|

golang.org/x/time v0.0.0-20190308202827-9d24e82272b4/go.mod h1:tRJNPiyCQ0inRvYxbN9jk5I+vvW/OXSQhTDSoE431IQ=

|

||||||

|

|

@ -1146,8 +1143,8 @@ google.golang.org/api v0.28.0/go.mod h1:lIXQywCXRcnZPGlsd8NbLnOjtAoL6em04bJ9+z0M

|

||||||

google.golang.org/api v0.29.0/go.mod h1:Lcubydp8VUV7KeIHD9z2Bys/sm/vGKnG1UHuDBSrHWM=

|

google.golang.org/api v0.29.0/go.mod h1:Lcubydp8VUV7KeIHD9z2Bys/sm/vGKnG1UHuDBSrHWM=

|

||||||

google.golang.org/api v0.30.0/go.mod h1:QGmEvQ87FHZNiUVJkT14jQNYJ4ZJjdRF23ZXz5138Fc=

|

google.golang.org/api v0.30.0/go.mod h1:QGmEvQ87FHZNiUVJkT14jQNYJ4ZJjdRF23ZXz5138Fc=

|

||||||

google.golang.org/api v0.35.0/go.mod h1:/XrVsuzM0rZmrsbjJutiuftIzeuTQcEeaYcSk/mQ1dg=

|

google.golang.org/api v0.35.0/go.mod h1:/XrVsuzM0rZmrsbjJutiuftIzeuTQcEeaYcSk/mQ1dg=

|

||||||

google.golang.org/api v0.106.0 h1:ffmW0faWCwKkpbbtvlY/K/8fUl+JKvNS5CVzRoyfCv8=

|

google.golang.org/api v0.110.0 h1:l+rh0KYUooe9JGbGVx71tbFo4SMbMTXK3I3ia2QSEeU=

|

||||||

google.golang.org/api v0.106.0/go.mod h1:2Ts0XTHNVWxypznxWOYUeI4g3WdP9Pk2Qk58+a/O9MY=

|

google.golang.org/api v0.110.0/go.mod h1:7FC4Vvx1Mooxh8C5HWjzZHcavuS2f6pmJpZx60ca7iI=

|

||||||

google.golang.org/appengine v1.1.0/go.mod h1:EbEs0AVv82hx2wNQdGPgUI5lhzA/G0D9YwlJXL52JkM=

|

google.golang.org/appengine v1.1.0/go.mod h1:EbEs0AVv82hx2wNQdGPgUI5lhzA/G0D9YwlJXL52JkM=

|

||||||

google.golang.org/appengine v1.2.0/go.mod h1:xpcJRLb0r/rnEns0DIKYYv+WjYCduHsrkT7/EB5XEv4=

|

google.golang.org/appengine v1.2.0/go.mod h1:xpcJRLb0r/rnEns0DIKYYv+WjYCduHsrkT7/EB5XEv4=

|

||||||

google.golang.org/appengine v1.4.0/go.mod h1:xpcJRLb0r/rnEns0DIKYYv+WjYCduHsrkT7/EB5XEv4=

|

google.golang.org/appengine v1.4.0/go.mod h1:xpcJRLb0r/rnEns0DIKYYv+WjYCduHsrkT7/EB5XEv4=

|

||||||

|

|

@ -1191,8 +1188,8 @@ google.golang.org/genproto v0.0.0-20200729003335-053ba62fc06f/go.mod h1:FWY/as6D

|

||||||

google.golang.org/genproto v0.0.0-20200804131852-c06518451d9c/go.mod h1:FWY/as6DDZQgahTzZj3fqbO1CbirC29ZNUFHwi0/+no=

|

google.golang.org/genproto v0.0.0-20200804131852-c06518451d9c/go.mod h1:FWY/as6DDZQgahTzZj3fqbO1CbirC29ZNUFHwi0/+no=

|

||||||

google.golang.org/genproto v0.0.0-20200825200019-8632dd797987/go.mod h1:FWY/as6DDZQgahTzZj3fqbO1CbirC29ZNUFHwi0/+no=

|

google.golang.org/genproto v0.0.0-20200825200019-8632dd797987/go.mod h1:FWY/as6DDZQgahTzZj3fqbO1CbirC29ZNUFHwi0/+no=

|

||||||

google.golang.org/genproto v0.0.0-20200904004341-0bd0a958aa1d/go.mod h1:FWY/as6DDZQgahTzZj3fqbO1CbirC29ZNUFHwi0/+no=

|

google.golang.org/genproto v0.0.0-20200904004341-0bd0a958aa1d/go.mod h1:FWY/as6DDZQgahTzZj3fqbO1CbirC29ZNUFHwi0/+no=

|

||||||

google.golang.org/genproto v0.0.0-20230110181048-76db0878b65f h1:BWUVssLB0HVOSY78gIdvk1dTVYtT1y8SBWtPYuTJ/6w=

|

google.golang.org/genproto v0.0.0-20230216225411-c8e22ba71e44 h1:EfLuoKW5WfkgVdDy7dTK8qSbH37AX5mj/MFh+bGPz14=

|

||||||

google.golang.org/genproto v0.0.0-20230110181048-76db0878b65f/go.mod h1:RGgjbofJ8xD9Sq1VVhDM1Vok1vRONV+rg+CjzG4SZKM=

|

google.golang.org/genproto v0.0.0-20230216225411-c8e22ba71e44/go.mod h1:8B0gmkoRebU8ukX6HP+4wrVQUY1+6PkQ44BSyIlflHA=

|

||||||

google.golang.org/grpc v1.17.0/go.mod h1:6QZJwpn2B+Zp71q/5VxRsJ6NXXVCE5NRUHRo+f3cWCs=

|

google.golang.org/grpc v1.17.0/go.mod h1:6QZJwpn2B+Zp71q/5VxRsJ6NXXVCE5NRUHRo+f3cWCs=

|

||||||

google.golang.org/grpc v1.19.0/go.mod h1:mqu4LbDTu4XGKhr4mRzUsmM4RtVoemTSY81AxZiDr8c=

|

google.golang.org/grpc v1.19.0/go.mod h1:mqu4LbDTu4XGKhr4mRzUsmM4RtVoemTSY81AxZiDr8c=

|

||||||

google.golang.org/grpc v1.20.0/go.mod h1:chYK+tFQF0nDUGJgXMSgLCQk3phJEuONr2DCgLDdAQM=

|

google.golang.org/grpc v1.20.0/go.mod h1:chYK+tFQF0nDUGJgXMSgLCQk3phJEuONr2DCgLDdAQM=

|

||||||

|

|

@ -1213,8 +1210,8 @@ google.golang.org/grpc v1.31.0/go.mod h1:N36X2cJ7JwdamYAgDz+s+rVMFjt3numwzf/HckM

|

||||||

google.golang.org/grpc v1.31.1/go.mod h1:N36X2cJ7JwdamYAgDz+s+rVMFjt3numwzf/HckM8pak=

|

google.golang.org/grpc v1.31.1/go.mod h1:N36X2cJ7JwdamYAgDz+s+rVMFjt3numwzf/HckM8pak=

|

||||||

google.golang.org/grpc v1.33.1/go.mod h1:fr5YgcSWrqhRRxogOsw7RzIpsmvOZ6IcH4kBYTpR3n0=

|

google.golang.org/grpc v1.33.1/go.mod h1:fr5YgcSWrqhRRxogOsw7RzIpsmvOZ6IcH4kBYTpR3n0=

|

||||||

google.golang.org/grpc v1.33.2/go.mod h1:JMHMWHQWaTccqQQlmk3MJZS+GWXOdAesneDmEnv2fbc=

|

google.golang.org/grpc v1.33.2/go.mod h1:JMHMWHQWaTccqQQlmk3MJZS+GWXOdAesneDmEnv2fbc=

|

||||||

google.golang.org/grpc v1.52.0 h1:kd48UiU7EHsV4rnLyOJRuP/Il/UHE7gdDAQ+SZI7nZk=

|

google.golang.org/grpc v1.53.0 h1:LAv2ds7cmFV/XTS3XG1NneeENYrXGmorPxsBbptIjNc=

|

||||||

google.golang.org/grpc v1.52.0/go.mod h1:pu6fVzoFb+NBYNAvQL08ic+lvB2IojljRYuun5vorUY=

|

google.golang.org/grpc v1.53.0/go.mod h1:OnIrk0ipVdj4N5d9IUoFUx72/VlD7+jUsHwZgwSMQpw=

|

||||||

google.golang.org/protobuf v0.0.0-20200109180630-ec00e32a8dfd/go.mod h1:DFci5gLYBciE7Vtevhsrf46CRTquxDuWsQurQQe4oz8=

|

google.golang.org/protobuf v0.0.0-20200109180630-ec00e32a8dfd/go.mod h1:DFci5gLYBciE7Vtevhsrf46CRTquxDuWsQurQQe4oz8=

|

||||||

google.golang.org/protobuf v0.0.0-20200221191635-4d8936d0db64/go.mod h1:kwYJMbMJ01Woi6D6+Kah6886xMZcty6N08ah7+eCXa0=

|

google.golang.org/protobuf v0.0.0-20200221191635-4d8936d0db64/go.mod h1:kwYJMbMJ01Woi6D6+Kah6886xMZcty6N08ah7+eCXa0=

|

||||||

google.golang.org/protobuf v0.0.0-20200228230310-ab0ca4ff8a60/go.mod h1:cfTl7dwQJ+fmap5saPgwCLgHXTUD7jkjRqWcaiX5VyM=

|

google.golang.org/protobuf v0.0.0-20200228230310-ab0ca4ff8a60/go.mod h1:cfTl7dwQJ+fmap5saPgwCLgHXTUD7jkjRqWcaiX5VyM=

|

||||||

|

|

|

||||||

2

vendor/cloud.google.com/go/compute/internal/version.go

generated

vendored

2

vendor/cloud.google.com/go/compute/internal/version.go

generated

vendored

|

|

@ -15,4 +15,4 @@

|

||||||

package internal

|

package internal

|

||||||

|

|

||||||

// Version is the current tagged release of the library.

|

// Version is the current tagged release of the library.

|

||||||

const Version = "1.15.1"

|

const Version = "1.18.0"

|

||||||

|

|

|

||||||

14

vendor/cloud.google.com/go/iam/CHANGES.md

generated

vendored

14

vendor/cloud.google.com/go/iam/CHANGES.md

generated

vendored

|

|

@ -1,5 +1,19 @@

|

||||||

# Changes

|

# Changes

|

||||||

|

|

||||||

|

## [0.12.0](https://github.com/googleapis/google-cloud-go/compare/iam/v0.11.0...iam/v0.12.0) (2023-02-17)

|

||||||

|

|

||||||

|

|

||||||

|

### Features

|

||||||

|

|

||||||

|

* **iam:** Migrate to new stubs ([a61ddcd](https://github.com/googleapis/google-cloud-go/commit/a61ddcd3041c7af4a15109dc4431f9b327c497fb))

|

||||||

|

|

||||||

|

## [0.11.0](https://github.com/googleapis/google-cloud-go/compare/iam/v0.10.0...iam/v0.11.0) (2023-02-16)

|

||||||

|

|

||||||

|

|

||||||

|

### Features

|

||||||

|

|

||||||

|

* **iam:** Start generating proto stubs ([970d763](https://github.com/googleapis/google-cloud-go/commit/970d763531b54b2bc75d7ff26a20b6e05150cab8))

|

||||||

|

|

||||||

## [0.10.0](https://github.com/googleapis/google-cloud-go/compare/iam/v0.9.0...iam/v0.10.0) (2023-01-04)

|

## [0.10.0](https://github.com/googleapis/google-cloud-go/compare/iam/v0.9.0...iam/v0.10.0) (2023-01-04)

|

||||||

|

|

||||||

|

|

||||||

|

|

|

||||||

2

vendor/cloud.google.com/go/iam/apiv1/iampb/iam_policy.pb.go

generated

vendored

2

vendor/cloud.google.com/go/iam/apiv1/iampb/iam_policy.pb.go

generated

vendored

|

|

@ -15,7 +15,7 @@

|

||||||

// Code generated by protoc-gen-go. DO NOT EDIT.

|

// Code generated by protoc-gen-go. DO NOT EDIT.

|

||||||

// versions:

|

// versions:

|

||||||

// protoc-gen-go v1.26.0

|

// protoc-gen-go v1.26.0

|

||||||

// protoc v3.21.9

|

// protoc v3.21.12

|

||||||

// source: google/iam/v1/iam_policy.proto

|

// source: google/iam/v1/iam_policy.proto

|

||||||

|

|

||||||

package iampb

|

package iampb

|

||||||

|

|

|

||||||

2

vendor/cloud.google.com/go/iam/apiv1/iampb/options.pb.go

generated

vendored

2

vendor/cloud.google.com/go/iam/apiv1/iampb/options.pb.go

generated

vendored

|

|

@ -15,7 +15,7 @@

|

||||||

// Code generated by protoc-gen-go. DO NOT EDIT.

|

// Code generated by protoc-gen-go. DO NOT EDIT.

|

||||||

// versions:

|

// versions:

|

||||||

// protoc-gen-go v1.26.0

|

// protoc-gen-go v1.26.0

|

||||||

// protoc v3.21.9

|

// protoc v3.21.12

|

||||||

// source: google/iam/v1/options.proto

|

// source: google/iam/v1/options.proto

|

||||||

|

|

||||||

package iampb

|

package iampb

|

||||||

|

|

|

||||||

2

vendor/cloud.google.com/go/iam/apiv1/iampb/policy.pb.go

generated

vendored

2

vendor/cloud.google.com/go/iam/apiv1/iampb/policy.pb.go

generated

vendored

|

|

@ -15,7 +15,7 @@

|

||||||

// Code generated by protoc-gen-go. DO NOT EDIT.

|

// Code generated by protoc-gen-go. DO NOT EDIT.

|

||||||

// versions:

|

// versions:

|

||||||

// protoc-gen-go v1.26.0

|

// protoc-gen-go v1.26.0

|

||||||

// protoc v3.21.9

|

// protoc v3.21.12

|

||||||

// source: google/iam/v1/policy.proto

|

// source: google/iam/v1/policy.proto

|

||||||

|

|

||||||

package iampb

|

package iampb

|

||||||

|

|

|

||||||

2

vendor/cloud.google.com/go/iam/iam.go

generated

vendored

2

vendor/cloud.google.com/go/iam/iam.go

generated

vendored

|

|

@ -26,8 +26,8 @@ import (

|

||||||

"fmt"

|

"fmt"

|

||||||

"time"

|

"time"

|

||||||

|

|

||||||

|

pb "cloud.google.com/go/iam/apiv1/iampb"

|

||||||

gax "github.com/googleapis/gax-go/v2"

|

gax "github.com/googleapis/gax-go/v2"

|

||||||

pb "google.golang.org/genproto/googleapis/iam/v1"

|

|

||||||

"google.golang.org/grpc"

|

"google.golang.org/grpc"

|

||||||

"google.golang.org/grpc/codes"

|

"google.golang.org/grpc/codes"

|

||||||

"google.golang.org/grpc/metadata"

|

"google.golang.org/grpc/metadata"

|

||||||

|

|

|

||||||

27

vendor/cloud.google.com/go/internal/.repo-metadata-full.json

generated

vendored

27

vendor/cloud.google.com/go/internal/.repo-metadata-full.json

generated

vendored

|

|

@ -548,6 +548,15 @@

|

||||||

"release_level": "beta",

|

"release_level": "beta",

|

||||||

"library_type": "GAPIC_AUTO"

|

"library_type": "GAPIC_AUTO"

|

||||||

},

|

},

|

||||||

|

"cloud.google.com/go/datacatalog/lineage/apiv1": {

|

||||||

|

"distribution_name": "cloud.google.com/go/datacatalog/lineage/apiv1",

|

||||||

|

"description": "Data Lineage API",

|

||||||

|

"language": "Go",

|

||||||

|

"client_library_type": "generated",

|

||||||

|

"docs_url": "https://cloud.google.com/go/docs/reference/cloud.google.com/go/datacatalog/latest/lineage/apiv1",

|

||||||

|

"release_level": "beta",

|

||||||

|

"library_type": "GAPIC_AUTO"

|

||||||

|

},

|

||||||

"cloud.google.com/go/dataflow/apiv1beta3": {

|

"cloud.google.com/go/dataflow/apiv1beta3": {

|

||||||

"distribution_name": "cloud.google.com/go/dataflow/apiv1beta3",

|

"distribution_name": "cloud.google.com/go/dataflow/apiv1beta3",

|

||||||

"description": "Dataflow API",

|

"description": "Dataflow API",

|

||||||

|

|

@ -710,6 +719,15 @@

|

||||||

"release_level": "beta",

|

"release_level": "beta",

|

||||||

"library_type": "GAPIC_AUTO"

|

"library_type": "GAPIC_AUTO"

|

||||||

},

|

},

|

||||||

|

"cloud.google.com/go/discoveryengine/apiv1beta": {

|

||||||

|

"distribution_name": "cloud.google.com/go/discoveryengine/apiv1beta",

|

||||||

|

"description": "Discovery Engine API",

|

||||||

|

"language": "Go",

|

||||||

|

"client_library_type": "generated",

|

||||||

|

"docs_url": "https://cloud.google.com/go/docs/reference/cloud.google.com/go/discoveryengine/latest/apiv1beta",

|

||||||

|

"release_level": "beta",

|

||||||

|

"library_type": "GAPIC_AUTO"

|

||||||

|

},

|

||||||

"cloud.google.com/go/dlp/apiv2": {

|

"cloud.google.com/go/dlp/apiv2": {

|

||||||

"distribution_name": "cloud.google.com/go/dlp/apiv2",

|

"distribution_name": "cloud.google.com/go/dlp/apiv2",

|

||||||

"description": "Cloud Data Loss Prevention (DLP) API",

|

"description": "Cloud Data Loss Prevention (DLP) API",

|

||||||

|

|

@ -1079,6 +1097,15 @@

|

||||||

"release_level": "beta",

|

"release_level": "beta",

|

||||||

"library_type": "GAPIC_AUTO"

|

"library_type": "GAPIC_AUTO"

|

||||||

},

|

},

|

||||||

|

"cloud.google.com/go/maps/mapsplatformdatasets/apiv1alpha": {

|

||||||

|

"distribution_name": "cloud.google.com/go/maps/mapsplatformdatasets/apiv1alpha",

|

||||||

|

"description": "Maps Platform Datasets API",

|

||||||

|

"language": "Go",

|

||||||

|

"client_library_type": "generated",

|

||||||

|

"docs_url": "https://cloud.google.com/go/docs/reference/cloud.google.com/go/maps/latest/mapsplatformdatasets/apiv1alpha",

|

||||||

|

"release_level": "alpha",

|

||||||

|

"library_type": "GAPIC_AUTO"

|

||||||

|

},

|

||||||

"cloud.google.com/go/maps/routing/apiv2": {

|

"cloud.google.com/go/maps/routing/apiv2": {

|

||||||

"distribution_name": "cloud.google.com/go/maps/routing/apiv2",

|

"distribution_name": "cloud.google.com/go/maps/routing/apiv2",

|

||||||

"description": "Routes API",

|

"description": "Routes API",

|

||||||

|

|

|

||||||

27

vendor/cloud.google.com/go/internal/README.md

generated

vendored

27

vendor/cloud.google.com/go/internal/README.md

generated

vendored

|

|

@ -15,4 +15,29 @@ One day, we may want to create individual `.repo-metadata.json` files next to

|

||||||

each package, which is the pattern followed by some other languages. External

|

each package, which is the pattern followed by some other languages. External

|

||||||

tools would then talk to pkg.go.dev or some other service to get the overall

|

tools would then talk to pkg.go.dev or some other service to get the overall

|

||||||

list of packages and use the `.repo-metadata.json` files to get the additional

|

list of packages and use the `.repo-metadata.json` files to get the additional

|

||||||

metadata required. For now, `.repo-metadata-full.json` includes everything.

|

metadata required. For now, `.repo-metadata-full.json` includes everything.

|

||||||

|

|

||||||

|

## cloudbuild.yaml

|

||||||

|

|

||||||

|

To kick off a build locally run from the repo root:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

gcloud builds submit --project=cloud-devrel-kokoro-resources --config=internal/cloudbuild.yaml

|

||||||

|

```

|

||||||

|

|

||||||

|

### Updating OwlBot SHA

|

||||||

|

|

||||||

|

You may want to manually update the which version of the post processor will be

|

||||||

|

used -- to do this you need to update the SHA in the OwlBot lock file. Start by

|

||||||

|

running the following commands:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

docker pull gcr.io/cloud-devrel-public-resources/owlbot-go:latest

|

||||||

|

docker inspect --format='{{index .RepoDigests 0}}' gcr.io/cloud-devrel-public-resources/owlbot-go:latest

|

||||||

|

```

|

||||||

|

|

||||||

|

This will give you a SHA. You can use this value to update the value in

|

||||||

|

`.github/.OwlBot.lock.yaml`.

|

||||||

|

|

||||||

|

*Note*: OwlBot will eventually open a pull request to update this value if it

|

||||||

|

discovers a new version of the container.

|

||||||

|

|

|

||||||

25

vendor/cloud.google.com/go/internal/cloudbuild.yaml

generated

vendored

Normal file

25

vendor/cloud.google.com/go/internal/cloudbuild.yaml

generated

vendored

Normal file

|

|

@ -0,0 +1,25 @@

|

||||||

|

# Copyright 2023 Google LLC

|

||||||

|

#

|

||||||

|

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||||

|

# you may not use this file except in compliance with the License.

|

||||||

|

# You may obtain a copy of the License at

|

||||||

|

#

|

||||||

|

# http://www.apache.org/licenses/LICENSE-2.0

|

||||||

|

#

|

||||||

|

# Unless required by applicable law or agreed to in writing, software

|

||||||

|

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||||

|

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||||

|

# See the License for the specific language governing permissions and

|

||||||

|

# limitations under the License.

|

||||||

|

|

||||||

|

# note: /workspace is a special directory in the docker image where all the files in this folder

|

||||||

|

# get placed on your behalf

|

||||||

|

|

||||||

|

timeout: 7200s # 2 hours

|

||||||

|

steps:

|

||||||

|

- name: gcr.io/cloud-builders/docker

|

||||||

|

args: ['build', '-t', 'gcr.io/cloud-devrel-public-resources/owlbot-go', '-f', 'postprocessor/Dockerfile', '.']

|

||||||

|

dir: internal

|

||||||

|

|

||||||

|

images:

|

||||||

|

- gcr.io/cloud-devrel-public-resources/owlbot-go:latest

|

||||||

13

vendor/cloud.google.com/go/storage/CHANGES.md

generated

vendored

13

vendor/cloud.google.com/go/storage/CHANGES.md

generated

vendored

|

|

@ -1,6 +1,19 @@

|

||||||

# Changes

|

# Changes

|

||||||

|

|

||||||

|

|

||||||

|

## [1.29.0](https://github.com/googleapis/google-cloud-go/compare/storage/v1.28.1...storage/v1.29.0) (2023-01-19)

|

||||||

|

|

||||||

|

|

||||||

|

### Features

|

||||||

|

|

||||||

|

* **storage:** Add ComponentCount as part of ObjectAttrs ([#7230](https://github.com/googleapis/google-cloud-go/issues/7230)) ([a19bca6](https://github.com/googleapis/google-cloud-go/commit/a19bca60704b4fbb674cf51d828580aa653c8210))

|

||||||

|

* **storage:** Add REST client ([06a54a1](https://github.com/googleapis/google-cloud-go/commit/06a54a16a5866cce966547c51e203b9e09a25bc0))

|

||||||

|

|

||||||

|

|

||||||

|

### Documentation

|

||||||

|

|

||||||

|

* **storage/internal:** Corrected typos and spellings ([7357077](https://github.com/googleapis/google-cloud-go/commit/735707796d81d7f6f32fc3415800c512fe62297e))

|

||||||

|

|

||||||

## [1.28.1](https://github.com/googleapis/google-cloud-go/compare/storage/v1.28.0...storage/v1.28.1) (2022-12-02)

|

## [1.28.1](https://github.com/googleapis/google-cloud-go/compare/storage/v1.28.0...storage/v1.28.1) (2022-12-02)

|

||||||

|

|

||||||

|

|

||||||

|

|

|

||||||

9

vendor/cloud.google.com/go/storage/bucket.go

generated

vendored

9

vendor/cloud.google.com/go/storage/bucket.go

generated

vendored

|

|

@ -35,6 +35,7 @@ import (

|

||||||

raw "google.golang.org/api/storage/v1"

|

raw "google.golang.org/api/storage/v1"

|

||||||

dpb "google.golang.org/genproto/googleapis/type/date"

|

dpb "google.golang.org/genproto/googleapis/type/date"

|

||||||

"google.golang.org/protobuf/proto"

|

"google.golang.org/protobuf/proto"

|

||||||

|

"google.golang.org/protobuf/types/known/durationpb"

|

||||||

)

|

)

|

||||||

|

|

||||||

// BucketHandle provides operations on a Google Cloud Storage bucket.

|

// BucketHandle provides operations on a Google Cloud Storage bucket.

|

||||||

|

|

@ -1389,12 +1390,12 @@ func (rp *RetentionPolicy) toProtoRetentionPolicy() *storagepb.Bucket_RetentionP

|

||||||

}

|

}

|

||||||

// RetentionPeriod must be greater than 0, so if it is 0, the user left it

|

// RetentionPeriod must be greater than 0, so if it is 0, the user left it

|

||||||

// unset, and so we should not send it in the request i.e. nil is sent.

|

// unset, and so we should not send it in the request i.e. nil is sent.

|

||||||

var period *int64

|

var dur *durationpb.Duration

|

||||||

if rp.RetentionPeriod != 0 {

|

if rp.RetentionPeriod != 0 {

|

||||||

period = proto.Int64(int64(rp.RetentionPeriod / time.Second))

|

dur = durationpb.New(rp.RetentionPeriod)

|

||||||

}

|

}

|

||||||

return &storagepb.Bucket_RetentionPolicy{

|

return &storagepb.Bucket_RetentionPolicy{

|

||||||

RetentionPeriod: period,

|

RetentionDuration: dur,

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

@ -1418,7 +1419,7 @@ func toRetentionPolicyFromProto(rp *storagepb.Bucket_RetentionPolicy) *Retention

|

||||||

return nil

|

return nil

|

||||||

}

|

}

|

||||||

return &RetentionPolicy{

|

return &RetentionPolicy{

|

||||||

RetentionPeriod: time.Duration(rp.GetRetentionPeriod()) * time.Second,

|

RetentionPeriod: rp.GetRetentionDuration().AsDuration(),

|

||||||

EffectiveTime: rp.GetEffectiveTime().AsTime(),

|

EffectiveTime: rp.GetEffectiveTime().AsTime(),

|

||||||

IsLocked: rp.GetIsLocked(),

|

IsLocked: rp.GetIsLocked(),

|

||||||

}

|

}

|

||||||

|

|

|

||||||

36

vendor/cloud.google.com/go/storage/grpc_client.go

generated

vendored

36

vendor/cloud.google.com/go/storage/grpc_client.go

generated

vendored

|

|

@ -34,7 +34,6 @@ import (

|

||||||

"google.golang.org/grpc/codes"

|

"google.golang.org/grpc/codes"

|

||||||

"google.golang.org/grpc/metadata"

|

"google.golang.org/grpc/metadata"

|

||||||

"google.golang.org/grpc/status"

|

"google.golang.org/grpc/status"

|

||||||

"google.golang.org/protobuf/proto"

|

|

||||||

fieldmaskpb "google.golang.org/protobuf/types/known/fieldmaskpb"

|

fieldmaskpb "google.golang.org/protobuf/types/known/fieldmaskpb"

|

||||||

)

|

)

|

||||||

|

|

||||||

|

|

@ -1496,11 +1495,16 @@ func (w *gRPCWriter) startResumableUpload() error {

|

||||||

if err != nil {

|

if err != nil {

|

||||||

return err

|

return err

|

||||||

}

|

}

|

||||||

|

req := &storagepb.StartResumableWriteRequest{

|

||||||

|

WriteObjectSpec: spec,

|

||||||

|

CommonObjectRequestParams: toProtoCommonObjectRequestParams(w.encryptionKey),

|

||||||

|

}

|

||||||

|

// TODO: Currently the checksums are only sent on the request to initialize

|

||||||

|

// the upload, but in the future, we must also support sending it

|

||||||

|

// on the *last* message of the stream.

|

||||||

|

req.ObjectChecksums = toProtoChecksums(w.sendCRC32C, w.attrs)

|

||||||

return run(w.ctx, func() error {

|

return run(w.ctx, func() error {

|

||||||

upres, err := w.c.raw.StartResumableWrite(w.ctx, &storagepb.StartResumableWriteRequest{

|

upres, err := w.c.raw.StartResumableWrite(w.ctx, req)

|

||||||

WriteObjectSpec: spec,

|

|

||||||

CommonObjectRequestParams: toProtoCommonObjectRequestParams(w.encryptionKey),

|

|

||||||

})

|

|

||||||

w.upid = upres.GetUploadId()

|

w.upid = upres.GetUploadId()

|

||||||

return err

|

return err

|

||||||

}, w.settings.retry, w.settings.idempotent, setRetryHeaderGRPC(w.ctx))

|

}, w.settings.retry, w.settings.idempotent, setRetryHeaderGRPC(w.ctx))

|

||||||

|

|

@ -1585,25 +1589,13 @@ func (w *gRPCWriter) uploadBuffer(recvd int, start int64, doneReading bool) (*st

|

||||||

WriteObjectSpec: spec,

|

WriteObjectSpec: spec,

|

||||||

}

|

}

|

||||||

req.CommonObjectRequestParams = toProtoCommonObjectRequestParams(w.encryptionKey)

|

req.CommonObjectRequestParams = toProtoCommonObjectRequestParams(w.encryptionKey)

|

||||||

|

// For a non-resumable upload, checksums must be sent in this message.

|

||||||

|

// TODO: Currently the checksums are only sent on the first message

|

||||||

|

// of the stream, but in the future, we must also support sending it

|

||||||

|

// on the *last* message of the stream (instead of the first).

|

||||||

|

req.ObjectChecksums = toProtoChecksums(w.sendCRC32C, w.attrs)

|

||||||

}

|

}

|

||||||

|

|

||||||

// TODO: Currently the checksums are only sent on the first message

|

|

||||||

// of the stream, but in the future, we must also support sending it

|

|

||||||

// on the *last* message of the stream (instead of the first).

|

|

||||||

if w.sendCRC32C {

|

|

||||||

req.ObjectChecksums = &storagepb.ObjectChecksums{

|

|

||||||

Crc32C: proto.Uint32(w.attrs.CRC32C),

|

|

||||||

}

|

|

||||||

}

|

|

||||||

if len(w.attrs.MD5) != 0 {

|

|

||||||

if cs := req.GetObjectChecksums(); cs == nil {

|

|

||||||

req.ObjectChecksums = &storagepb.ObjectChecksums{

|

|

||||||

Md5Hash: w.attrs.MD5,

|

|

||||||

}

|

|

||||||

} else {

|

|

||||||

cs.Md5Hash = w.attrs.MD5

|

|

||||||

}

|

|

||||||

}

|

|

||||||

}

|

}

|

||||||

|

|

||||||

err = w.stream.Send(req)

|

err = w.stream.Send(req)

|

||||||

|

|

|

||||||

10

vendor/cloud.google.com/go/storage/internal/apiv2/doc.go

generated

vendored

10

vendor/cloud.google.com/go/storage/internal/apiv2/doc.go

generated

vendored

|

|

@ -1,4 +1,4 @@

|

||||||

// Copyright 2022 Google LLC

|

// Copyright 2023 Google LLC

|

||||||

//

|

//

|

||||||

// Licensed under the Apache License, Version 2.0 (the "License");

|

// Licensed under the Apache License, Version 2.0 (the "License");

|

||||||

// you may not use this file except in compliance with the License.

|

// you may not use this file except in compliance with the License.

|

||||||

|

|

@ -21,6 +21,11 @@

|

||||||

//

|

//

|

||||||

// NOTE: This package is in alpha. It is not stable, and is likely to change.

|

// NOTE: This package is in alpha. It is not stable, and is likely to change.

|

||||||

//

|

//

|

||||||

|

// # General documentation

|

||||||

|

//

|

||||||

|

// For information about setting deadlines, reusing contexts, and more

|

||||||

|

// please visit https://pkg.go.dev/cloud.google.com/go.

|

||||||

|

//

|

||||||

// # Example usage

|

// # Example usage

|

||||||

//

|

//

|

||||||