---

weight: 3

title: Kubernetes monitoring with VictoriaMetrics Cluster

menu:

docs:

parent: "guides"

weight: 3

aliases:

- /guides/k8s-monitoring-via-vm-cluster.html

---

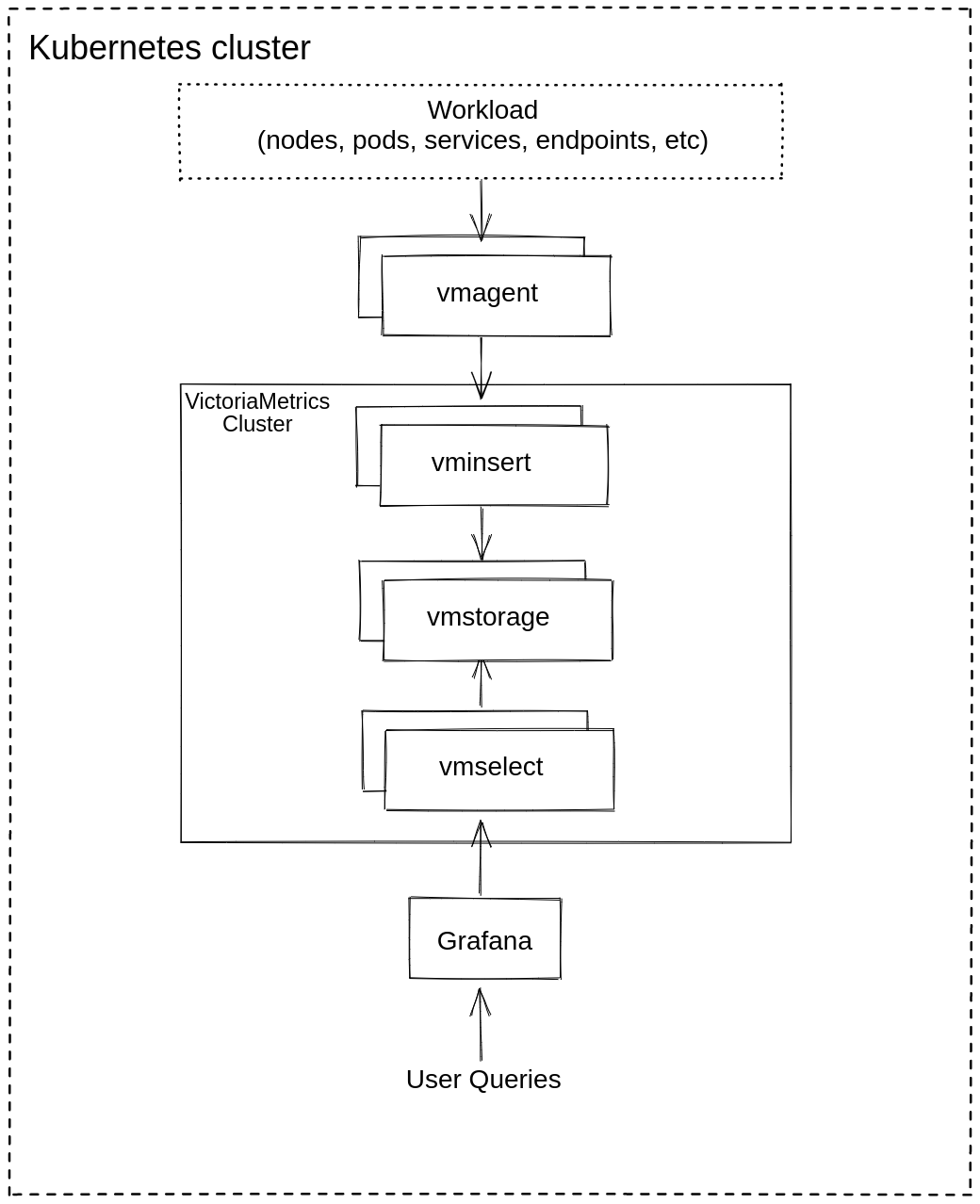

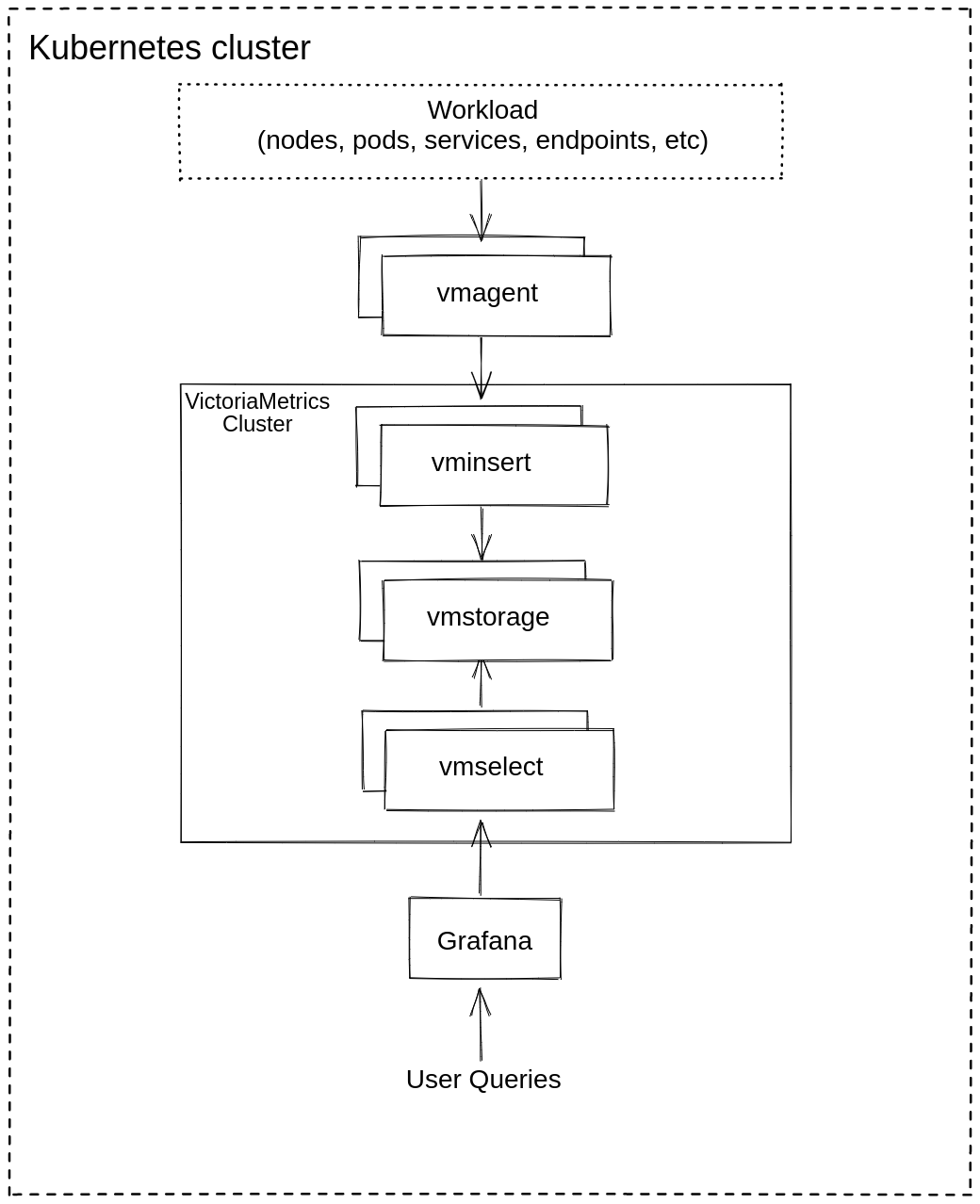

# Kubernetes monitoring with VictoriaMetrics Cluster

**This guide covers:**

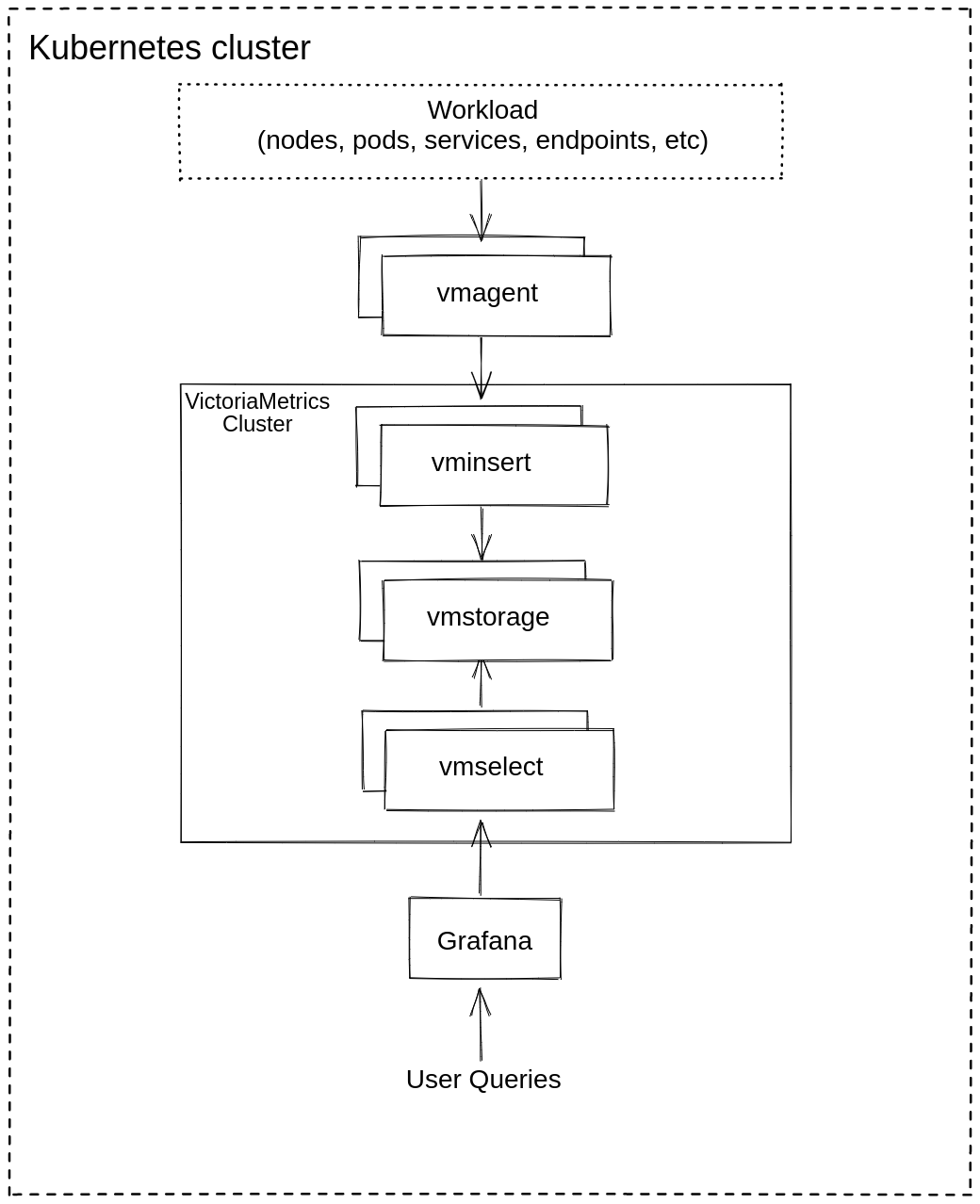

* The setup of a [VictoriaMetrics cluster](https://docs.victoriametrics.com/Cluster-VictoriaMetrics.html) in [Kubernetes](https://kubernetes.io/) via Helm charts

* How to scrape metrics from k8s components using service discovery

* How to visualize stored data

* How to store metrics in [VictoriaMetrics](https://victoriametrics.com) tsdb

**Precondition**

We will use:

* [Kubernetes cluster 1.19.9-gke.1900](https://cloud.google.com/kubernetes-engine)

> We use GKE cluster from [GCP](https://cloud.google.com/) but this guide also applies on any Kubernetes cluster. For example [Amazon EKS](https://aws.amazon.com/ru/eks/).

* [Helm 3 ](https://helm.sh/docs/intro/install)

* [kubectl 1.21](https://kubernetes.io/docs/tasks/tools/install-kubectl)

## 1. VictoriaMetrics Helm repository

> For this guide we will use Helm 3 but if you already use Helm 2 please see this [https://github.com/VictoriaMetrics/helm-charts#for-helm-v2](https://github.com/VictoriaMetrics/helm-charts#for-helm-v2)

You need to add the VictoriaMetrics Helm repository to install VictoriaMetrics components. We’re going to use [VictoriaMetrics Cluster](https://docs.victoriametrics.com/Cluster-VictoriaMetrics.html). You can do this by running the following command:

```console

helm repo add vm https://victoriametrics.github.io/helm-charts/

```

Update Helm repositories:

```console

helm repo update

```

To verify that everything is set up correctly you may run this command:

```console

helm search repo vm/

```

The expected output is:

```console

NAME CHART VERSION APP VERSION DESCRIPTION

vm/victoria-metrics-agent 0.7.20 v1.62.0 Victoria Metrics Agent - collects metrics from ...

vm/victoria-metrics-alert 0.3.34 v1.62.0 Victoria Metrics Alert - executes a list of giv...

vm/victoria-metrics-auth 0.2.23 1.62.0 Victoria Metrics Auth - is a simple auth proxy ...

vm/victoria-metrics-cluster 0.8.32 1.62.0 Victoria Metrics Cluster version - high-perform...

vm/victoria-metrics-k8s-stack 0.2.9 1.16.0 Kubernetes monitoring on VictoriaMetrics stack....

vm/victoria-metrics-operator 0.1.17 0.16.0 Victoria Metrics Operator

vm/victoria-metrics-single 0.7.5 1.62.0 Victoria Metrics Single version - high-performa...

```

## 2. Install VictoriaMetrics Cluster from the Helm chart

Run this command in your terminal:

```yaml

cat <

* By running `Helm install vmcluster vm/victoria-metrics-cluster` we install [VictoriaMetrics cluster](https://docs.victoriametrics.com/Cluster-VictoriaMetrics.html) to default [namespace](https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces/) inside your cluster.

* By adding `podAnnotations: prometheus.io/scrape: "true"` we enable the scraping of metrics from the vmselect, vminsert and vmstorage pods.

* By adding `podAnnotations:prometheus.io/port: "some_port" ` we enable the scraping of metrics from the vmselect, vminsert and vmstorage pods from their ports as well.

As a result of this command you will see the following output:

```console

NAME: vmcluster

LAST DEPLOYED: Thu Jul 1 09:41:57 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Write API:

The Victoria Metrics write api can be accessed via port 8480 with the following DNS name from within your cluster:

vmcluster-victoria-metrics-cluster-vminsert.default.svc.cluster.local

Get the Victoria Metrics insert service URL by running these commands in the same shell:

export POD_NAME=$(kubectl get pods --namespace default -l "app=vminsert" -o jsonpath="{.items[0].metadata.name}")

kubectl --namespace default port-forward $POD_NAME 8480

You need to update your Prometheus configuration file and add the following lines to it:

prometheus.yml

remote_write:

- url: "http:///insert/0/prometheus/"

for example - inside the Kubernetes cluster:

remote_write:

- url: "http://vmcluster-victoria-metrics-cluster-vminsert.default.svc.cluster.local:8480/insert/0/prometheus/"

Read API:

The VictoriaMetrics read api can be accessed via port 8481 with the following DNS name from within your cluster:

vmcluster-victoria-metrics-cluster-vmselect.default.svc.cluster.local

Get the VictoriaMetrics select service URL by running these commands in the same shell:

export POD_NAME=$(kubectl get pods --namespace default -l "app=vmselect" -o jsonpath="{.items[0].metadata.name}")

kubectl --namespace default port-forward $POD_NAME 8481

You will need to specify select service URL in your Grafana:

NOTE: you need to use Prometheus Data Source

Input this URL field in Grafana

http:///select/0/prometheus/

for example - inside the Kubernetes cluster:

http://vmcluster-victoria-metrics-cluster-vmselect.default.svc.cluster.local:8481/select/0/prometheus/"

```

For us it’s important to remember the url for the datasource (copy lines from the output).

Verify that [VictoriaMetrics cluster](https://docs.victoriametrics.com/Cluster-VictoriaMetrics.html) pods are up and running by executing the following command:

```console

kubectl get pods

```

The expected output is:

```console

NAME READY STATUS RESTARTS AGE

vmcluster-victoria-metrics-cluster-vminsert-689cbc8f55-95szg 1/1 Running 0 16m

vmcluster-victoria-metrics-cluster-vminsert-689cbc8f55-f852l 1/1 Running 0 16m

vmcluster-victoria-metrics-cluster-vmselect-977d74cdf-bbgp5 1/1 Running 0 16m

vmcluster-victoria-metrics-cluster-vmselect-977d74cdf-vzp6z 1/1 Running 0 16m

vmcluster-victoria-metrics-cluster-vmstorage-0 1/1 Running 0 16m

vmcluster-victoria-metrics-cluster-vmstorage-1 1/1 Running 0 16m

```

## 3. Install vmagent from the Helm chart

To scrape metrics from Kubernetes with a [VictoriaMetrics cluster](https://docs.victoriametrics.com/Cluster-VictoriaMetrics.html) we need to install [vmagent](https://docs.victoriametrics.com/vmagent.html) with additional configuration. To do so, please run these commands in your terminal:

```yaml

helm install vmagent vm/victoria-metrics-agent -f https://docs.victoriametrics.com/guides/guide-vmcluster-vmagent-values.yaml

```

Here is full file content `guide-vmcluster-vmagent-values.yaml`

```yaml

remoteWriteUrls:

- http://vmcluster-victoria-metrics-cluster-vminsert.default.svc.cluster.local:8480/insert/0/prometheus/

config:

global:

scrape_interval: 10s

scrape_configs:

- job_name: vmagent

static_configs:

- targets: ["localhost:8429"]

- job_name: "kubernetes-apiservers"

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels:

[

__meta_kubernetes_namespace,

__meta_kubernetes_service_name,

__meta_kubernetes_endpoint_port_name,

]

action: keep

regex: default;kubernetes;https

- job_name: "kubernetes-nodes"

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/$1/proxy/metrics

- job_name: "kubernetes-nodes-cadvisor"

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/$1/proxy/metrics/cadvisor

metric_relabel_configs:

- action: replace

source_labels: [pod]

regex: '(.+)'

target_label: pod_name

replacement: '${1}'

- action: replace

source_labels: [container]

regex: '(.+)'

target_label: container_name

replacement: '${1}'

- action: replace

target_label: name

replacement: k8s_stub

- action: replace

source_labels: [id]

regex: '^/system\.slice/(.+)\.service$'

target_label: systemd_service_name

replacement: '${1}'

- job_name: "kubernetes-service-endpoints"

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: drop

source_labels: [__meta_kubernetes_pod_container_init]

regex: true

- action: keep_if_equal

source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_port, __meta_kubernetes_pod_container_port_number]

- source_labels:

[__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels:

[__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels:

[__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels:

[

__address__,

__meta_kubernetes_service_annotation_prometheus_io_port,

]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- source_labels: [__meta_kubernetes_pod_node_name]

action: replace

target_label: kubernetes_node

- job_name: "kubernetes-service-endpoints-slow"

scrape_interval: 5m

scrape_timeout: 30s

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: drop

source_labels: [__meta_kubernetes_pod_container_init]

regex: true

- action: keep_if_equal

source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_port, __meta_kubernetes_pod_container_port_number]

- source_labels:

[__meta_kubernetes_service_annotation_prometheus_io_scrape_slow]

action: keep

regex: true

- source_labels:

[__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels:

[__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels:

[

__address__,

__meta_kubernetes_service_annotation_prometheus_io_port,

]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- source_labels: [__meta_kubernetes_pod_node_name]

action: replace

target_label: kubernetes_node

- job_name: "kubernetes-services"

metrics_path: /probe

params:

module: [http_2xx]

kubernetes_sd_configs:

- role: service

relabel_configs:

- source_labels:

[__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

- job_name: "kubernetes-pods"

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

source_labels: [__meta_kubernetes_pod_container_init]

regex: true

- action: keep_if_equal

source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_port, __meta_kubernetes_pod_container_port_number]

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels:

[__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

```

* By adding `remoteWriteUrls: - http://vmcluster-victoria-metrics-cluster-vminsert.default.svc.cluster.local:8480/insert/0/prometheus/` we configuring [vmagent](https://docs.victoriametrics.com/vmagent.html) to write scraped metrics into the `vmselect service`.

* The second part of this yaml file is needed to add the `metric_relabel_configs` section that helps us to show Kubernetes metrics on the Grafana dashboard.

Verify that `vmagent`'s pod is up and running by executing the following command:

```console

kubectl get pods | grep vmagent

```

The expected output is:

```console

vmagent-victoria-metrics-agent-69974b95b4-mhjph 1/1 Running 0 11m

```

## 4. Install and connect Grafana to VictoriaMetrics with Helm

Add the Grafana Helm repository.

```console

helm repo add grafana https://grafana.github.io/helm-charts

helm repo update

```

See more information on Grafana ArtifactHUB [https://artifacthub.io/packages/helm/grafana/grafana](https://artifacthub.io/packages/helm/grafana/grafana)

To install the chart with the release name `my-grafana`, add the VictoriaMetrics datasource with official dashboard and the Kubernetes dashboard:

```yaml

cat <

By running this command we:

* Install Grafana from the Helm repository.

* Provision a VictoriaMetrics data source with the url from the output above which we remembered.

* Add this [https://grafana.com/grafana/dashboards/11176-victoriametrics-cluster/](https://grafana.com/grafana/dashboards/11176-victoriametrics-cluster/) dashboard for [VictoriaMetrics Cluster](https://docs.victoriametrics.com/Cluster-VictoriaMetrics.html).

* Add this [https://grafana.com/grafana/dashboards/12683-victoriametrics-vmagent/](https://grafana.com/grafana/dashboards/12683-victoriametrics-vmagent/) dashboard for [VictoriaMetrics Agent](https://docs.victoriametrics.com/vmagent.html).

* Add this [https://grafana.com/grafana/dashboards/14205-kubernetes-cluster-monitoring-via-prometheus/](https://grafana.com/grafana/dashboards/14205-kubernetes-cluster-monitoring-via-prometheus/) dashboard to see Kubernetes cluster metrics.

Please see the output log in your terminal. Copy, paste and run these commands.

The first one will show `admin` password for the Grafana admin.

The second and the third will forward Grafana to `127.0.0.1:3000`:

```console

kubectl get secret --namespace default my-grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echo

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=grafana,app.kubernetes.io/instance=my-grafana" -o jsonpath="{.items[0].metadata.name}")

kubectl --namespace default port-forward $POD_NAME 3000

```

## 5. Check the result you obtained in your browser

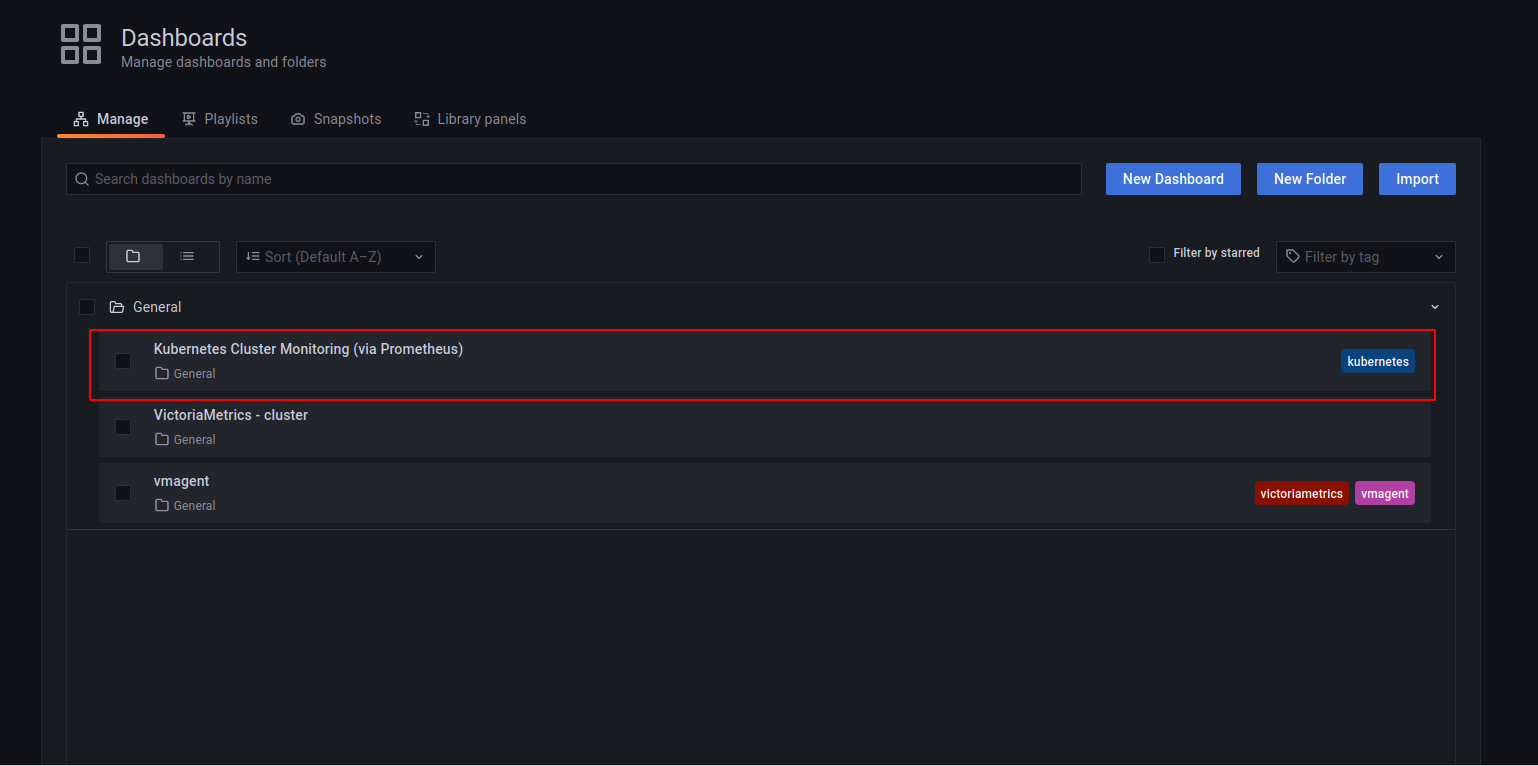

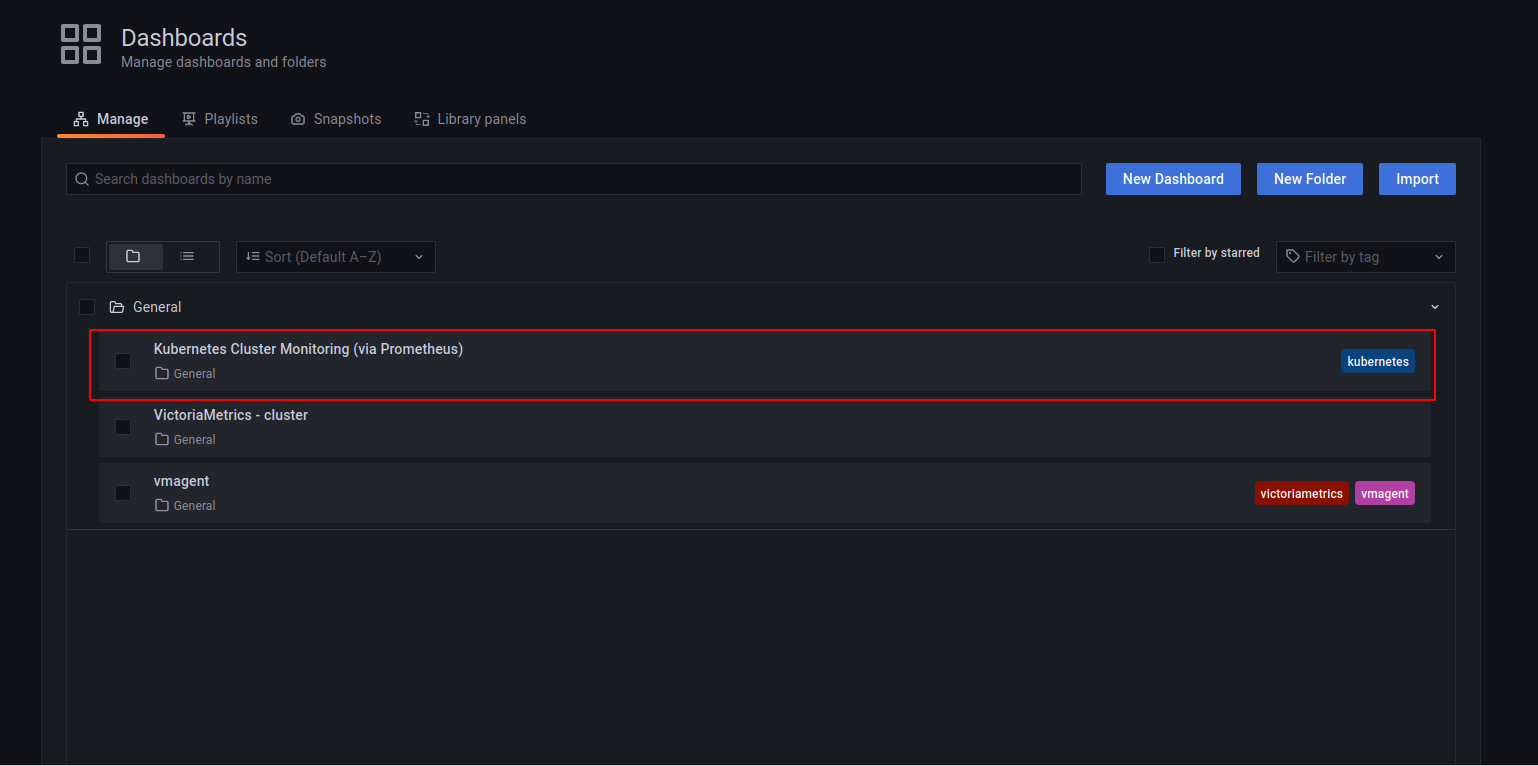

To check that [VictoriaMetrics](https://victoriametrics.com) collects metrics from k8s cluster open in browser [http://127.0.0.1:3000/dashboards](http://127.0.0.1:3000/dashboards) and choose the `Kubernetes Cluster Monitoring (via Prometheus)` dashboard. Use `admin` for login and `password` that you previously got from kubectl.

You will see something like this:

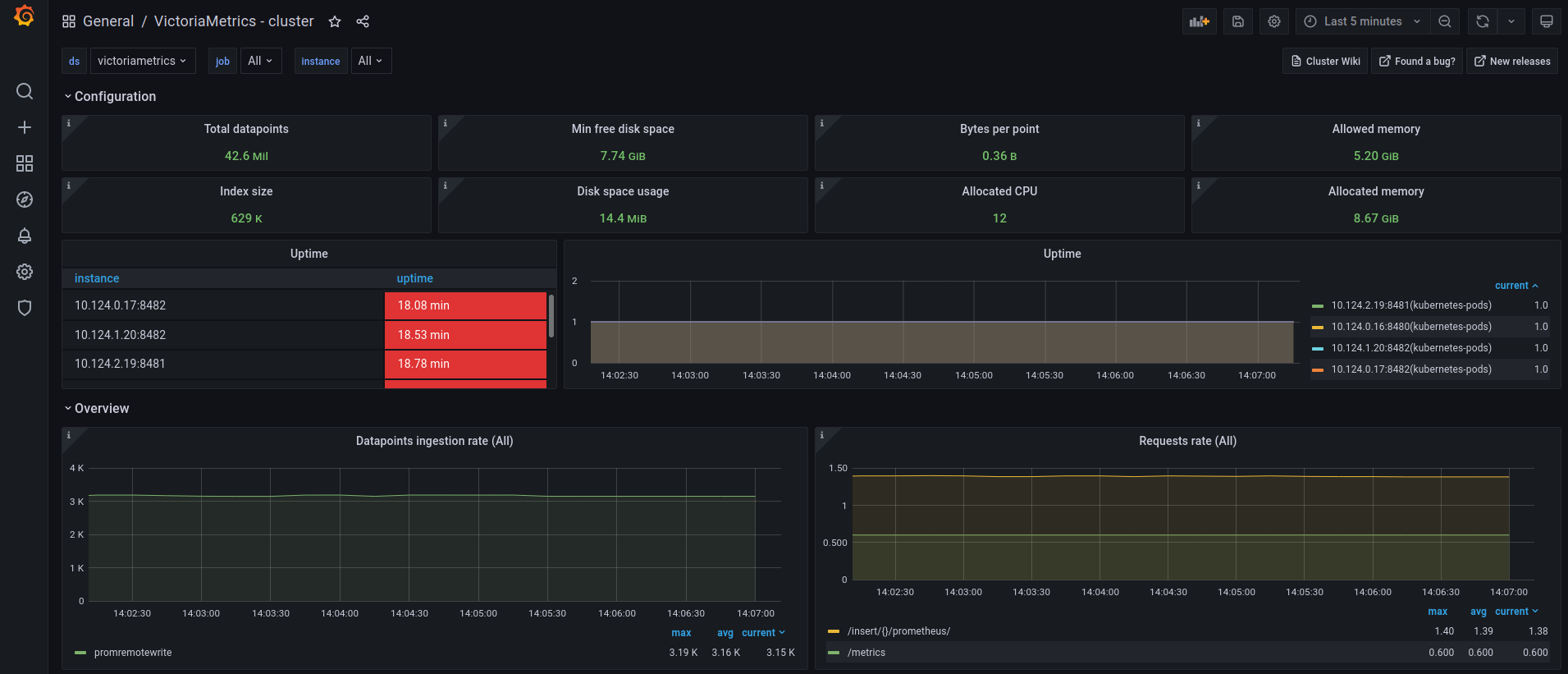

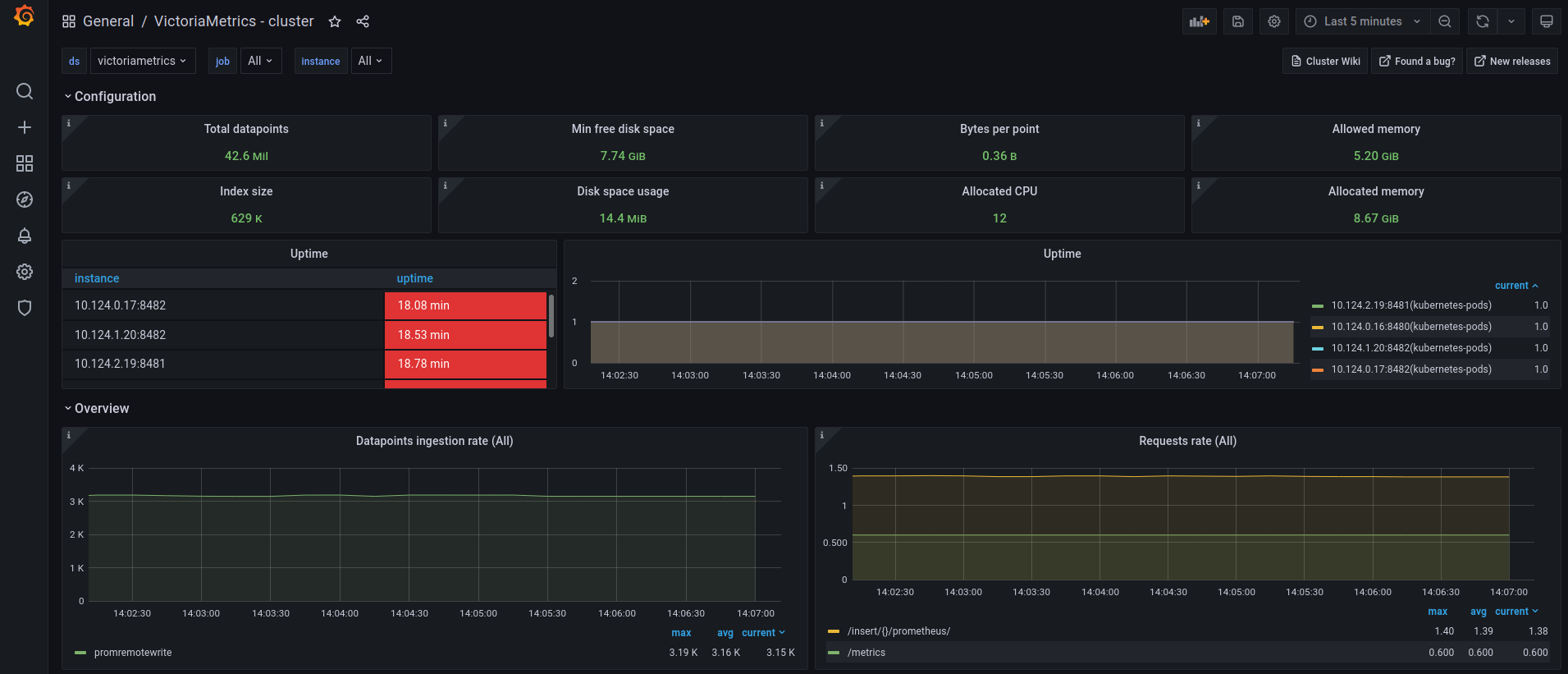

The VictoriaMetrics dashboard is also available to use:

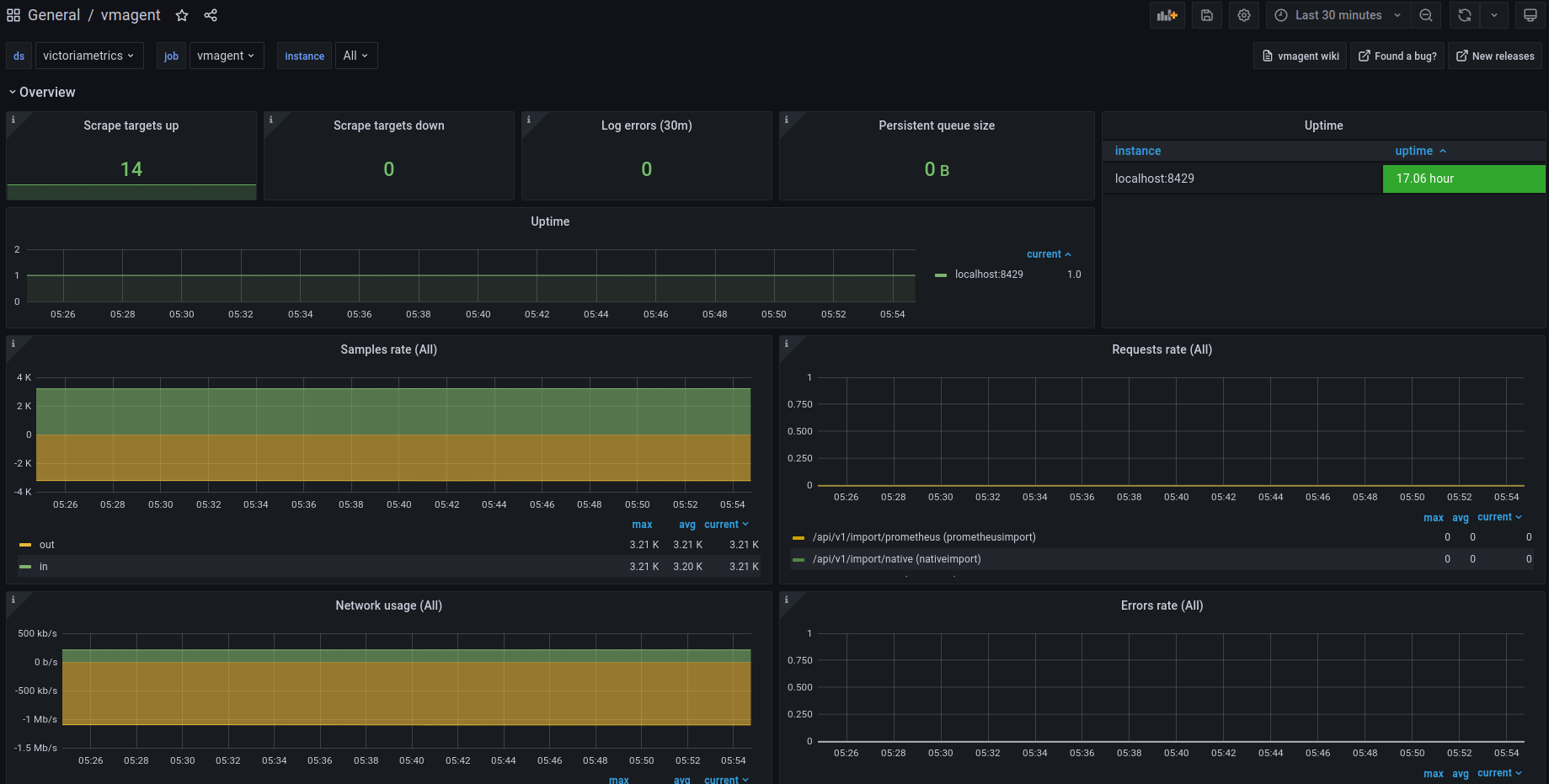

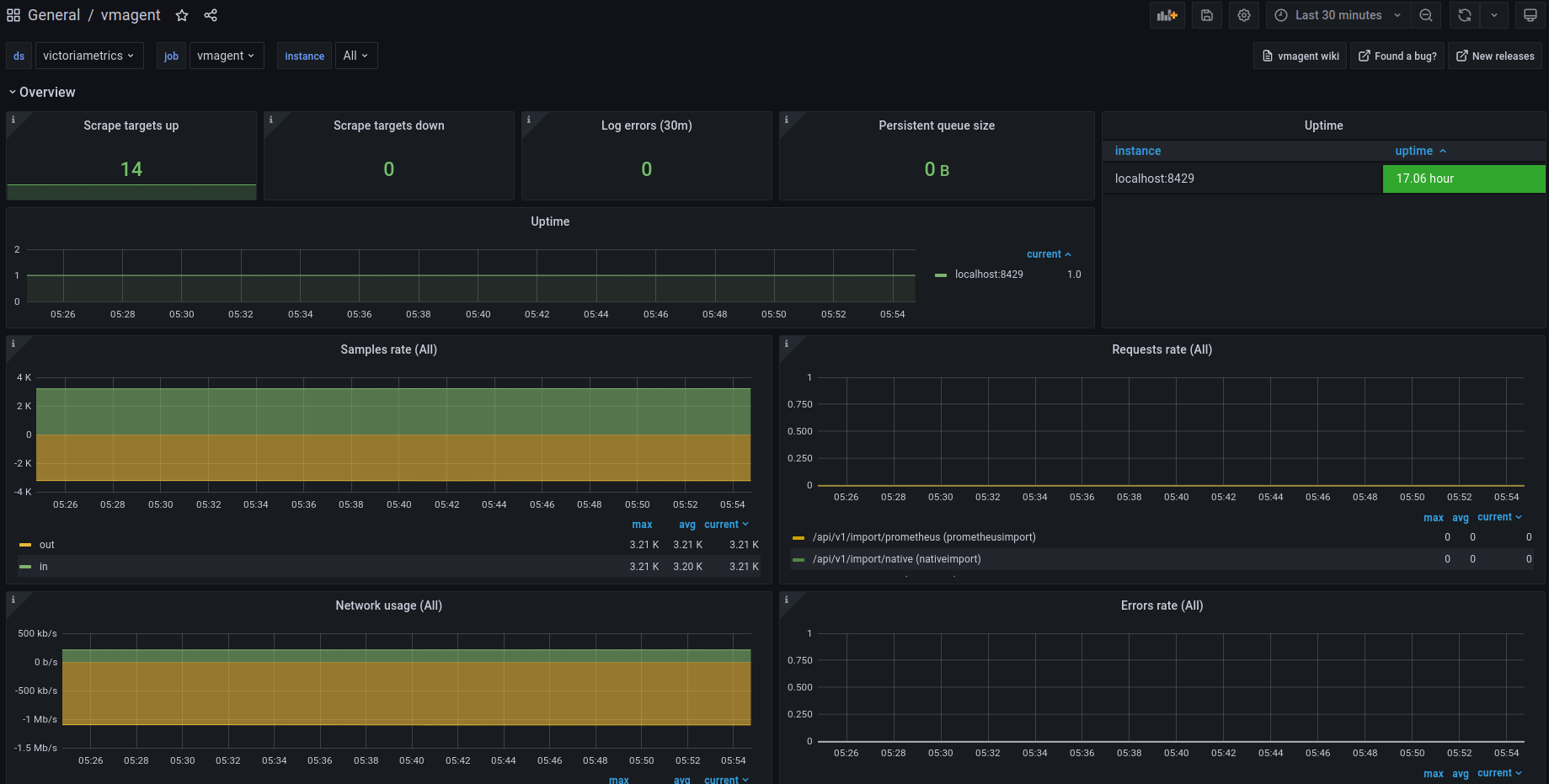

vmagent has it’s own dashboard:

## 6. Final thoughts

* We set up TimeSeries Database for your Kubernetes cluster.

* We collected metrics from all running pods,nodes, … and stored them in a VictoriaMetrics database.

* We visualized resources used in the Kubernetes cluster by using Grafana dashboards.