Automated changes by [create-pull-request](https://github.com/peter-evans/create-pull-request) GitHub action Signed-off-by: Github Actions <133988544+victoriametrics-bot@users.noreply.github.com> Co-authored-by: AndrewChubatiuk <3162380+AndrewChubatiuk@users.noreply.github.com>

12 KiB

| weight | title | menu | aliases | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 5 | Migration from Prometheus |

|

|

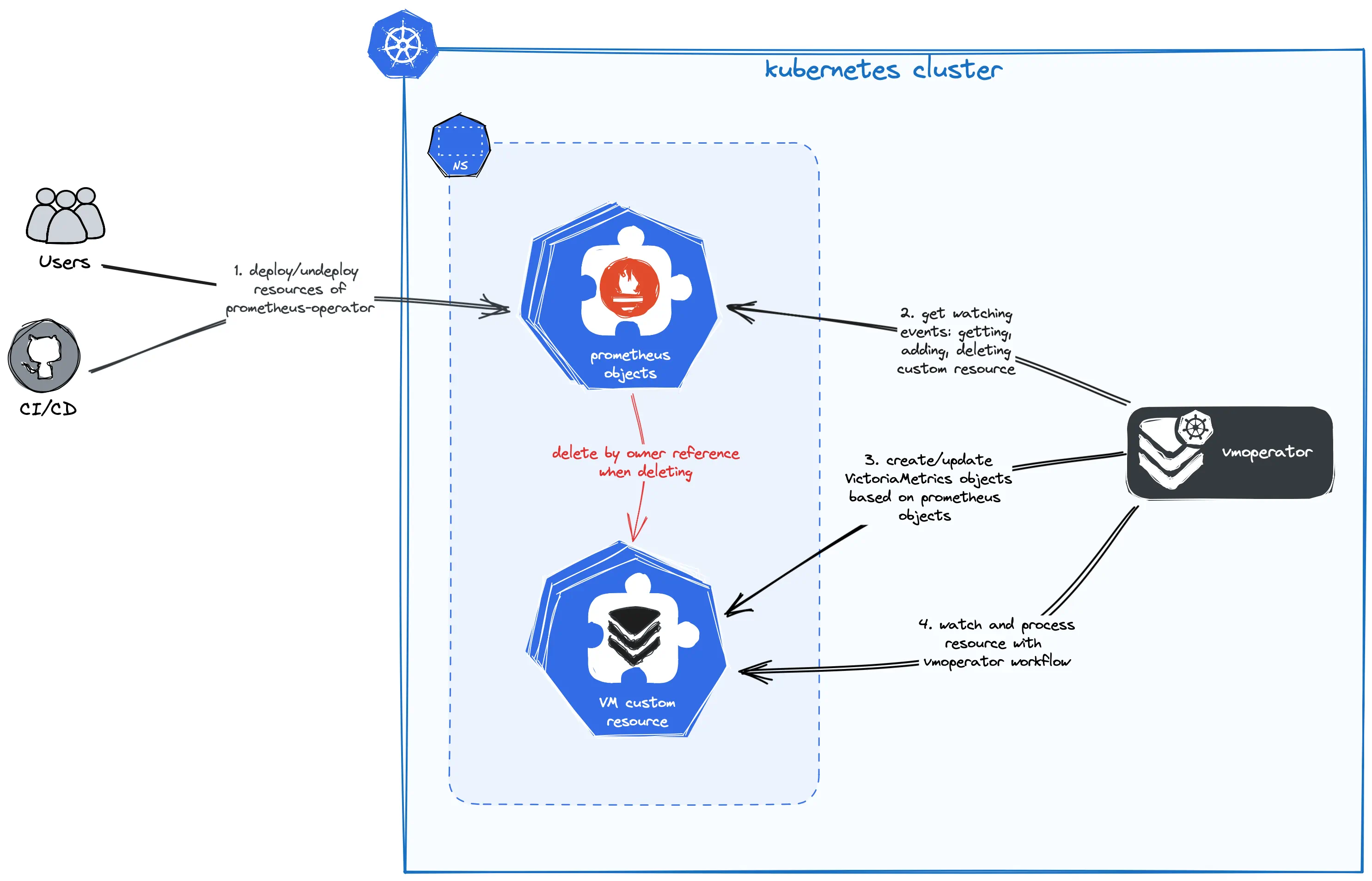

Design and implementation inspired by prometheus-operator. It's great a tool for managing monitoring configuration of your applications. VictoriaMetrics operator has api capability with it.

So you can use familiar CRD objects: ServiceMonitor, PodMonitor, PrometheusRule, Probe and AlertmanagerConfig.

Or you can use VictoriaMetrics CRDs:

VMServiceScrape(instead ofServiceMonitor) - defines scraping metrics configuration from pods backed by services. See details.VMPodScrape(instead ofPodMonitor) - defines scraping metrics configuration from pods. See details.VMRule(instead ofPrometheusRule) - defines alerting or recording rules. See details.VMProbe(instead ofProbe) - defines a probing configuration for targets with blackbox exporter. See details.VMAlertmanagerConfig(instead ofAlertmanagerConfig) - defines a configuration for AlertManager. See details.VMScrapeConfig(instead ofScrapeConfig) - define a scrape config using any of the service discovery options supported in victoriametrics.

Note that Prometheus CRDs are not supplied with the VictoriaMetrics operator,

so you need to install them separately.

VictoriaMetrics operator supports conversion from Prometheus CRD of

version monitoring.coreos.com/v1 for kinds ServiceMonitor, PodMonitor, PrometheusRule, Probe

and version monitoring.coreos.com/v1alpha1 for kind AlertmanagerConfig.

The default behavior of the operator is as follows:

- It converts all existing Prometheus

ServiceMonitor,PodMonitor,PrometheusRule,ProbeandScrapeConfigobjects into corresponding VictoriaMetrics Operator objects. - It syncs updates (including labels) from Prometheus

ServiceMonitor,PodMonitor,PrometheusRule,ProbeandScrapeConfigobjects to corresponding VictoriaMetrics Operator objects. - It DOES NOT delete converted objects after original ones are deleted.

With this configuration removing prometheus-operator API objects wouldn't delete any converted objects. So you can safely migrate or run two operators at the same time.

You can change default behavior with operator configuration - see details below.

Objects conversion

By default, the vmoperator converts all existing prometheus-operator API objects into corresponding VictoriaMetrics Operator objects (see above), i.e. creates resources of VictoriaMetrics similar to Prometheus resources in the same namespace.

You can control this behaviour by setting env variable for operator:

# disable convertion for each object

VM_ENABLEDPROMETHEUSCONVERTER_PODMONITOR=false

VM_ENABLEDPROMETHEUSCONVERTER_SERVICESCRAPE=false

VM_ENABLEDPROMETHEUSCONVERTER_PROMETHEUSRULE=false

VM_ENABLEDPROMETHEUSCONVERTER_PROBE=false

VM_ENABLEDPROMETHEUSCONVERTER_SCRAPECONFIG=false

For victoria-metrics-operator helm-chart you can use following way:

# values.yaml

# ...

operator:

# -- By default, operator converts prometheus-operator objects.

disable_prometheus_converter: true

# ...

Otherwise, VictoriaMetrics Operator would try to discover prometheus-operator API and convert it.

For more information about the operator's workflow, see this doc.

Deletion synchronization

By default, the operator doesn't make converted objects disappear after original ones are deleted. To change this behaviour

configure adding OwnerReferences to converted objects with following operator parameter:

VM_ENABLEDPROMETHEUSCONVERTEROWNERREFERENCES=true

For victoria-metrics-operator helm-chart you can use following way:

# values.yaml

# ...

operator:

# -- Enables ownership reference for converted prometheus-operator objects,

# it will remove corresponding victoria-metrics objects in case of deletion prometheus one.

enable_converter_ownership: true

# ...

Converted objects will be linked to the original ones and will be deleted by kubernetes after the original ones are deleted.

Update synchronization

Conversion of api objects can be controlled by annotations, added to VMObjects.

Annotation operator.victoriametrics.com/ignore-prometheus-updates controls updates from Prometheus api objects.

By default, it set to disabled. You define it to enabled state and all updates from Prometheus api objects will be ignored.

Example:

apiVersion: operator.victoriametrics.com/v1beta1

kind: VMServiceScrape

metadata:

annotations:

meta.helm.sh/release-name: prometheus

operator.victoriametrics.com/ignore-prometheus-updates: enabled

labels:

release: prometheus

name: prometheus-monitor

spec:

endpoints: []

Annotation operator.victoriametrics.com/ignore-prometheus-updates can be set on one of the resources:

And annotation doesn't make sense for VMStaticScrape and VMNodeScrape because these objects are not created as a result of conversion.

Labels and annotations synchronization

Conversion of api objects can be controlled by annotations, added to VMObjects.

Annotation operator.victoriametrics.com/merge-meta-strategy controls syncing of metadata labels and annotations

between VMObjects and Prometheus api objects during updates to Prometheus objects.

By default, it has prefer-prometheus. And annotations and labels will be used from Prometheus objects, manually set values will be dropped.

You can set it to prefer-victoriametrics. In this case all labels and annotations applied to Prometheus object will be ignored and VMObject will use own values.

Two additional strategies annotations -merge-victoriametrics-priority and merge-prometheus-priority merges labelSets into one combined labelSet, with priority.

Example:

apiVersion: operator.victoriametrics.com/v1beta1

kind: VMServiceScrape

metadata:

annotations:

meta.helm.sh/release-name: prometheus

operator.victoriametrics.com/merge-meta-strategy: prefer-victoriametrics

labels:

release: prometheus

name: prometheus-monitor

spec:

endpoints: []

Annotation operator.victoriametrics.com/merge-meta-strategy can be set on one of the resources:

And annotation doesn't make sense for VMStaticScrape and VMNodeScrape because these objects are not created as a result of conversion.

You can filter labels for syncing

with operator parameter VM_FILTERPROMETHEUSCONVERTERLABELPREFIXES:

# it excludes all labels that start with "helm.sh" or "argoproj.io" from synchronization

VM_FILTERPROMETHEUSCONVERTERLABELPREFIXES=helm.sh,argoproj.io

In the same way, annotations with specified prefixes can be excluded from synchronization

with operator parameter VM_FILTERPROMETHEUSCONVERTERANNOTATIONPREFIXES:

# it excludes all annotations that start with "helm.sh" or "argoproj.io" from synchronization

VM_FILTERPROMETHEUSCONVERTERANNOTATIONPREFIXES=helm.sh,argoproj.io

Using converter with ArgoCD

If you use ArgoCD, you can allow ignoring objects at ArgoCD converted from Prometheus CRD

with operator parameter VM_PROMETHEUSCONVERTERADDARGOCDIGNOREANNOTATIONS.

It helps to properly use converter with ArgoCD and should help prevent out-of-sync issues with argo-cd based deployments:

# adds compare-options and sync-options for prometheus objects converted by operator

VM_PROMETHEUSCONVERTERADDARGOCDIGNOREANNOTATIONS=true

Data migration

You can use vmctl for migrating your data from Prometheus to VictoriaMetrics.

See this doc for more details.

Auto-discovery for prometheus.io annotations

There is a scenario where auto-discovery using prometheus.io-annotations

(such as prometheus.io/port, prometheus.io/scrape, prometheus.io/path, etc.)

is required when migrating from Prometheus instead of manually managing scrape objects.

You can enable this feature using special scrape object like that:

apiVersion: operator.victoriametrics.com/v1beta1

kind: VMServiceScrape

metadata:

name: annotations-discovery

spec:

discoveryRole: service

endpoints:

- port: http

relabelConfigs:

# Skip scrape for init containers

- action: drop

source_labels: [__meta_kubernetes_pod_container_init]

regex: "true"

# Match container port with port from annotation

- action: keep_if_equal

source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_port, __meta_kubernetes_pod_container_port_number]

# Check if scrape is enabled

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: "true"

# Set scrape path

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

# Set port to address

- source_labels:

[__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

# Copy labels from pod labels

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

# Set pod name, container name, namespace and node name to labels

- source_labels: [__meta_kubernetes_pod_name]

target_label: pod

- source_labels: [__meta_kubernetes_pod_container_name]

target_label: container

- source_labels: [__meta_kubernetes_namespace]

target_label: namespace

- source_labels: [__meta_kubernetes_pod_node_name]

action: replace

target_label: node

namespaceSelector: {} # You need to specify namespaceSelector here

selector: {} # You need to specify selector here

You can find yaml-file with this example here.

Check out more information about: